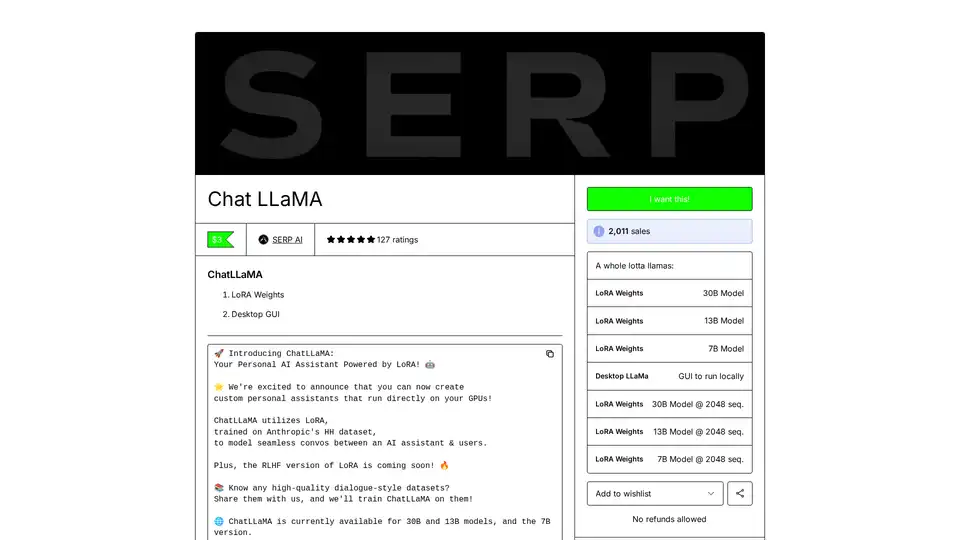

ChatLLaMA

Overview of ChatLLaMA

What is ChatLLaMA?

ChatLLaMA represents a groundbreaking step in accessible AI technology, designed as a personal AI assistant powered by LoRA (Low-Rank Adaptation) fine-tuning on LLaMA models. This open-source tool allows users to run customized conversational AI directly on their own GPUs, eliminating the need for cloud dependencies and enhancing privacy. Trained specifically on Anthropic's high-quality HH dataset, which focuses on helpful and honest dialogues, ChatLLaMA excels at modeling seamless, natural conversations between users and AI assistants. Whether you're a developer experimenting with local AI setups or an enthusiast seeking a tailored chatbot, ChatLLaMA brings advanced language model capabilities to your desktop.

Currently available in versions for 30B, 13B, and 7B LLaMA models, it supports sequence lengths up to 2048 tokens, making it versatile for extended interactions. The tool includes a user-friendly Desktop GUI, simplifying setup and usage for non-experts. Priced affordably at just $3, it has garnered over 2,011 sales and a solid 4.8-star rating from 127 users, with 92% giving it five stars. Users praise its innovative approach, though some note they haven't fully tested it yet—highlighting the excitement around its potential.

How Does ChatLLaMA Work?

At its core, ChatLLaMA leverages LoRA, an efficient fine-tuning method that adapts pre-trained large language models like LLaMA without requiring massive computational resources. Instead of retraining the entire model, LoRA introduces low-rank matrices to the model's weights, focusing updates on key parameters. This results in a lightweight adapter— the LoRA weights—that can be loaded onto base LLaMA models to specialize them for conversational tasks.

The training process uses Anthropic's HH (Helpful and Harmless) dataset, a collection of dialogue examples emphasizing ethical, useful responses. This ensures ChatLLaMA generates responses that are not only engaging but also safe and contextually appropriate. For instance, it simulates realistic back-and-forth exchanges, making interactions feel more human-like compared to generic chatbots.

An upcoming RLHF (Reinforcement Learning from Human Feedback) version promises even better alignment with user preferences, potentially improving response quality through human-rated refinements. To run it, users download the LoRA weights for their chosen model size and integrate them with a local LLaMA inference setup. The Desktop GUI streamlines this: launch the interface, select your model, load the weights, and start chatting—all powered by your GPU for low-latency performance.

No foundation model weights are provided, as ChatLLaMA is intended for research and assumes users have access to base LLaMA models (which are openly available). This modular design allows flexibility; for example, you can experiment with different base models or even contribute datasets for future training iterations.

How to Use ChatLLaMA?

Getting started with ChatLLaMA is straightforward, especially with its Desktop GUI. Here's a step-by-step guide:

Acquire the Base Model: Download the LLaMA 7B, 13B, or 30B model weights from official sources like Hugging Face. Ensure your system has a compatible GPU (NVIDIA recommended with sufficient VRAM— at least 8GB for 7B, more for larger models).

Purchase and Download LoRA Weights: For $3, get the ChatLLaMA LoRA weights tailored to your model size. These are available in standard and 2048-sequence variants for handling longer contexts.

Install the Desktop GUI: The open-source GUI is provided as part of the package. Install dependencies like Python, PyTorch, and any LLaMA-compatible libraries (e.g., llama.cpp for efficient inference). Run the GUI executable to set up your environment.

Load and Launch: In the GUI, point to your base model and LoRA weights. Configure settings like temperature for response creativity or max tokens for output length. Initiate a chat session to test conversational flow.

Customize and Experiment: Input prompts to simulate dialogues. For advanced users, tweak the LoRA adapter or integrate with scripts for automation. If you encounter setup issues, the active Discord community offers real-time support.

The GUI handles much of the heavy lifting, making it accessible even if you're new to AI deployment. Response times are snappy on capable hardware, often under a second per turn.

Why Choose ChatLLaMA?

In a landscape dominated by cloud-based AI like ChatGPT, ChatLLaMA stands out for its emphasis on local execution. This means no subscription fees, no data sent to external servers, and full control over your AI interactions—ideal for privacy-conscious users or those in regions with limited internet. Its LoRA-based approach is resource-efficient, allowing fine-tuned performance without the overhead of full model training, which can cost thousands in compute.

User feedback underscores its value: one reviewer called it "sweet" for the concept, while others appreciate the potential for custom assistants. With high ratings and strong sales, it's clear ChatLLaMA resonates with the AI community. Plus, the project's open-source ethos invites collaboration; developers are encouraged to contribute code, with GPU resources offered in exchange via Discord.

Compared to alternatives, ChatLLaMA's focus on dialogue datasets like HH gives it an edge in natural conversation modeling. It's not just a chatbot—it's a foundation for building specialized assistants, from research tools to personal productivity aids.

Who is ChatLLaMA For?

ChatLLaMA is tailored for a diverse audience:

AI Researchers and Developers: Perfect for experimenting with LoRA fine-tuning, RLHF integration, or dataset contributions. If you're building open-source AI apps, this tool provides a ready-made conversational backbone.

Tech Enthusiasts and Hobbyists: Run your own AI companion offline, customizing it for fun or learning. No advanced coding required thanks to the GUI.

Privacy-Focused Users: Businesses or individuals wary of cloud AI can deploy secure, local instances for internal chats or prototyping.

Educators and Students: Use it to explore large language models hands-on, simulating ethical AI dialogues without infrastructure barriers.

It's especially suited for those with mid-range GPUs, as the 7B model runs on modest hardware. However, it's research-oriented, so expect to handle base models yourself— not a plug-and-play for absolute beginners.

Best Ways to Maximize ChatLLaMA's Potential

To get the most out of ChatLLaMA:

Integrate High-Quality Datasets: Share dialogue datasets with the team for community-driven improvements. This could lead to specialized versions, like industry-specific assistants.

Combine with Other Tools: Pair it with voice interfaces for hybrid setups or embed in apps via APIs for broader applications.

Monitor Performance: Track metrics like coherence and relevance in conversations. The upcoming RLHF update will likely boost these further.

Join the Community: Discord is buzzing with tips, from optimization tweaks to collaboration opportunities. Developers: Reach out to @devinschumacher for GPU-assisted projects.

In summary, ChatLLaMA democratizes advanced AI by making LoRA-powered, local conversational models accessible and customizable. Whether you're revolutionizing personal AI or contributing to open-source innovation, this tool offers immense practical value in the evolving world of large language models. Dive in, and stay funky as you explore its capabilities.

Best Alternative Tools to "ChatLLaMA"

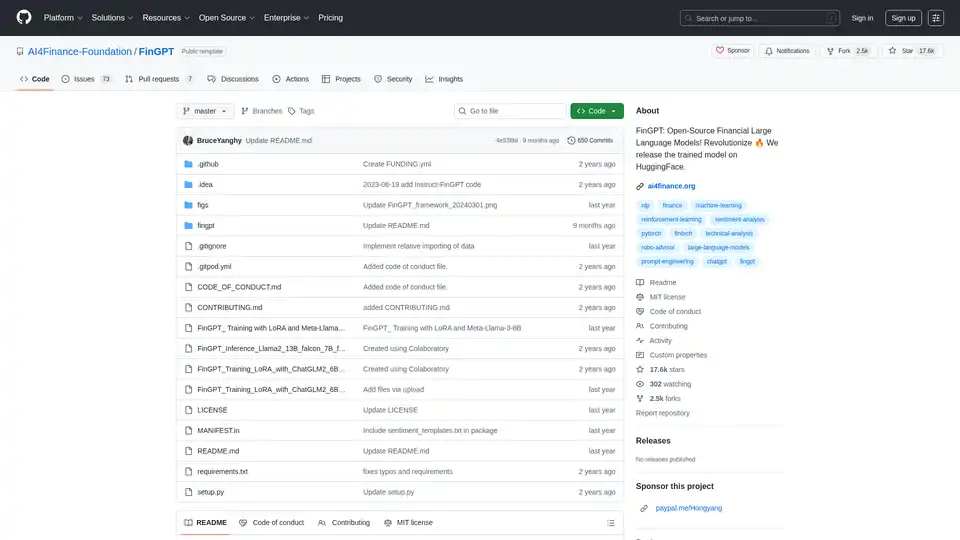

FinGPT: An open-source financial large language model for democratizing financial data, sentiment analysis, and forecasting. Fine-tune swiftly for timely market insights.

Scade.pro is a comprehensive no-code AI platform that enables users to build AI features, automate workflows, and integrate 1500+ AI models without technical skills.

Discover Pykaso AI, the ultimate platform for creating ultra-realistic AI images, videos, and custom characters. Train LoRa models, enhance skins, and generate viral content effortlessly for social media success.

Highly Reliable Cloud-Based ComfyUI, Edit and Run ComfyUI Workflows Online, Publish Them as AI Apps to Earn Revenue, Hundreds of new AI apps daily.

NMKD Stable Diffusion GUI is a free, open-source tool for generating AI images locally on your GPU using Stable Diffusion. It supports text-to-image, image editing, upscaling, and LoRA models with no censorship or data collection.

xTuring is an open-source library that empowers users to customize and fine-tune Large Language Models (LLMs) efficiently, focusing on simplicity, resource optimization, and flexibility for AI personalization.

Browse and compare the latest Flux LoRA models in the Flux LoRA Model Library. Find the perfect Flux LoRA to enhance your Flux model generation experience for AI image creation.

Stable Diffusion API empowers you to generate and finetune AI images effortlessly. Access text-to-image, image-to-image, and inpainting APIs without needing expensive GPUs.

Replicate lets you run and fine-tune open-source machine learning models with a cloud API. Build and scale AI products with ease.

Predibase is a developer platform for fine-tuning and serving open-source LLMs. Achieve unmatched accuracy and speed with end-to-end training and serving infrastructure, featuring reinforcement fine-tuning.

Transform text into stunning visuals with Flux AI Image Generator. Explore various models like Flux Pro and Flux Schnell to create high-quality AI art online for free.

Image Pipeline empowers you to create production-quality AI visuals with maximum control using the latest tech like Stable Diffusion, ControlNets, and custom models. Focus on building AI products without GPU maintenance.

Create personalized visual stories with TheFluxTrain. Train AI on your own images to generate consistent characters and turn them into compelling visual narratives, AI influencers, and product mockups.

Fireworks AI delivers blazing-fast inference for generative AI using state-of-the-art, open-source models. Fine-tune and deploy your own models at no extra cost. Scale AI workloads globally.