Fireworks AI

Overview of Fireworks AI

Fireworks AI: The Fastest Inference Engine for Generative AI

What is Fireworks AI? Fireworks AI is a platform designed to provide the fastest inference speeds for generative AI models. It allows users to build, tune, and scale AI applications with ease, leveraging open-source models optimized for various use cases.

How does Fireworks AI work? Fireworks AI achieves high performance through its inference engine, which is optimized for low latency, high throughput, and concurrency. The platform supports popular models like DeepSeek, Llama, Qwen, and Mistral, enabling developers to experiment and iterate quickly using Fireworks SDKs.

Key Features and Benefits

- Blazing-Fast Inference: Delivers real-time performance with minimal latency, suitable for mission-critical applications.

- Advanced Tuning: Provides tools for maximizing model quality through techniques like reinforcement learning and quantization-aware tuning.

- Seamless Scaling: Automatically provisions the latest GPUs across multiple clouds and regions, ensuring high availability and consistent performance.

- Open-Source Models: Supports a wide range of open-source models, offering flexibility and customization options.

- Enterprise-Ready: Includes features for secure team collaboration, monitoring, and compliance (SOC2 Type II, GDPR, HIPAA).

Use Cases

Fireworks AI is suitable for a variety of applications, including:

- Voice Agents: Powering real-time voice interactions with low latency.

- Code Assistants: Enhancing code generation and completion with fast inference speeds.

- AI Dev Tools: Enabling fine-tuning, AI-powered code search, and deep code context for improved development workflows.

Why is Fireworks AI important?

Fireworks AI addresses the need for speed and scalability in generative AI applications. By optimizing inference and providing seamless scaling, it enables businesses to deploy AI features at scale without sacrificing performance or cost-effectiveness.

Who is Fireworks AI for?

Fireworks AI is ideal for:

- Enterprises: Looking to deploy AI solutions with enterprise-grade security and compliance.

- Developers: Seeking a fast and flexible platform for experimenting with open-source models.

- AI Researchers: Needing robust infrastructure for training and deploying AI models.

Customer Testimonials

Several companies have found success with Fireworks AI:

- Cursor: Sualeh Asif, CPO, praised Fireworks for its performance and minimal degradation in quantized model quality.

- Quora: Spencer Chan, Product Lead, highlighted Fireworks as the best platform for serving open-source LLMs and scaling LoRA adapters.

- Sourcegraph: Beyang Liu, CTO, noted Fireworks' fast and reliable model inference for building AI dev tools like Cody.

- Notion: Sarah Sachs, AI Lead, reported a significant latency reduction by partnering with Fireworks to fine-tune models.

Pricing

Fireworks AI offers flexible pricing options to suit different needs. Details can be found on their Pricing page.

Getting Started

To start building with Fireworks AI, visit their website and explore the available models and documentation. You can also contact their sales team for enterprise solutions.

What's the best way to leverage Fireworks AI? To maximize the benefits of Fireworks AI, start by identifying your specific use case and selecting the appropriate open-source model. Utilize the Fireworks SDKs to fine-tune the model and optimize it for your application. Take advantage of the platform's scaling capabilities to deploy your AI features globally without managing infrastructure.

By providing a robust and scalable inference engine, Fireworks AI empowers developers and enterprises to harness the power of generative AI with unprecedented speed and efficiency.

Best Alternative Tools to "Fireworks AI"

Lightning-fast AI platform for developers. Deploy, fine-tune, and run 200+ optimized LLMs and multimodal models with simple APIs - SiliconFlow.

Xander is an open-source desktop platform that enables no-code AI model training. Describe tasks in natural language for automated pipelines in text classification, image analysis, and LLM fine-tuning, ensuring privacy and performance on your local machine.

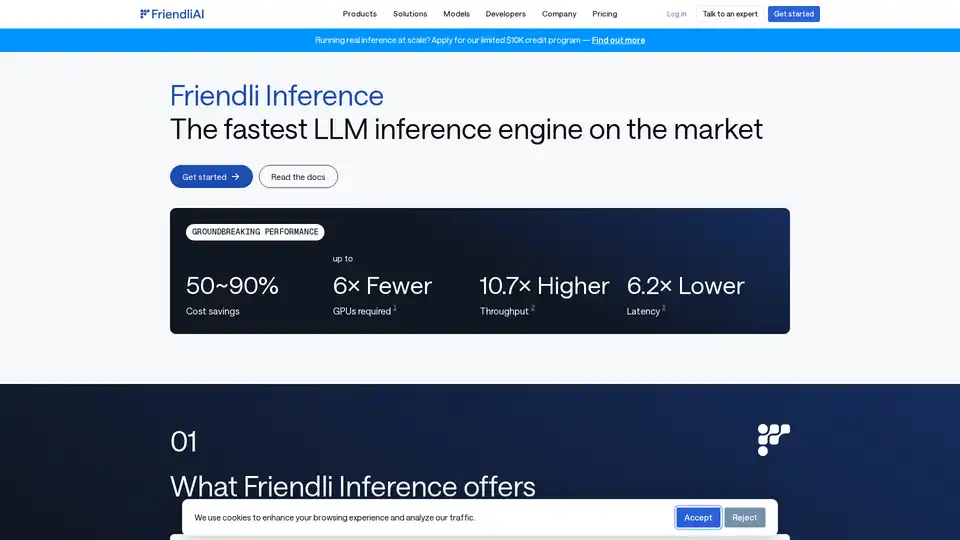

Friendli Inference is the fastest LLM inference engine, optimized for speed and cost-effectiveness, slashing GPU costs by 50-90% while delivering high throughput and low latency.

AI Runner is an offline AI inference engine for art, real-time voice conversations, LLM-powered chatbots, and automated workflows. Run image generation, voice chat, and more locally!

Gnothi is an AI-powered journal that provides personalized insights and resources for self-reflection, behavior tracking, and personal growth through intelligent analysis of your entries.

Essential is an open-source MacOS app that acts as an AI co-pilot for your screen, helping developers fix errors instantly and remember key workflows with summaries and screenshots—no data leaves your device.

vLLM is a high-throughput and memory-efficient inference and serving engine for LLMs, featuring PagedAttention and continuous batching for optimized performance.

OpenUI is an open-source tool that lets you describe UI components in natural language and renders them live using LLMs. Convert descriptions to HTML, React, or Svelte for fast prototyping.

Spice.ai is an open source data and AI inference engine for building AI apps with SQL query federation, acceleration, search, and retrieval grounded in enterprise data.

Cortex is an open-source blockchain platform supporting AI models on a decentralized network, enabling AI integration in smart contracts and DApps.

fima AI is an AI-powered collaboration suite aiming to build efficient work systems alongside human well-being. Features Data-Ground for data analytics and an open-source AI agent framework.

Wavify is the ultimate platform for on-device speech AI, enabling seamless integration of speech recognition, wake word detection, and voice commands with top-tier performance and privacy.

Inweave is an AI-powered platform designed for startups and scaleups to automate workflows efficiently. Deploy customizable AI assistants using top models like GPT and Llama via chat or API for seamless productivity gains.

dstack is an open-source AI container orchestration engine that provides ML teams with a unified control plane for GPU provisioning and orchestration across cloud, Kubernetes, and on-prem. Streamlines development, training, and inference.