Float16.Cloud

Overview of Float16.Cloud

Float16.Cloud: Serverless GPUs for Accelerated AI Development

What is Float16.Cloud?

Float16.Cloud is a serverless GPU platform designed to accelerate AI development. It allows users to instantly run, train, and scale AI models without the complexities of infrastructure setup. This platform offers ready-to-run environments, full control over code, and a seamless developer experience.

How does Float16.Cloud work?

Float16.Cloud simplifies AI development by providing serverless GPUs that eliminate the need for manual server configuration. Key features include:

- Fast GPU Spin-Up: Get compute resources in under a second with preloaded containers ready for AI and Python development.

- Zero Setup: Avoid the overhead of Dockerfiles, launch scripts, and DevOps. Float16 automatically provisions and configures high-performance GPU infrastructure.

- Spot Mode with Pay-Per-Use: Train, fine-tune, and batch process on affordable spot GPUs with per-second billing.

- Native Python Execution on H100: Run Python scripts directly on NVIDIA H100 GPUs without building containers or configuring runtimes.

Key Features and Benefits

Serverless GPU Infrastructure:

- Run and deploy AI workloads instantly without managing servers.

- Containerized infrastructure for efficient execution.

H100 GPUs:

- Leverage NVIDIA H100 GPUs for high-performance computing.

- Ideal for demanding AI tasks.

Zero Setup:

- No need for Dockerfiles, launch scripts, or DevOps overhead.

- Focus on coding, not infrastructure management.

Pay-Per-Use Pricing:

- Per-second billing on H100 GPUs.

- Pay only for what you use, with no idle costs.

Native Python Execution:

- Run .py scripts directly on NVIDIA H100 without building containers or configuring runtimes.

- Containerized and GPU-isolated execution.

Full Execution Trace & Logging:

- Access real-time logs and view job history.

- Inspect request-level metrics, task counts, and execution duration.

Web & CLI-Integrated File I/O:

- Upload/download files via CLI or web UI.

- Supports local files and remote S3 buckets.

Example-Powered Onboarding:

- Deploy with confidence using real-world examples.

- Examples range from model inference to batched training loops.

CLI-First, Web-Enabled:

- Manage everything from the command line or monitor jobs from the dashboard.

- Both interfaces are tightly integrated.

Flexible Pricing Modes:

- On-demand for short bursts.

- Spot pricing for long-running jobs like training and fine-tuning.

Serve Open-Source LLMs:

- Provision a high-performance LLM server from a single CLI command.

- Production-ready HTTPS endpoint.

- Run any GGUF-based model like Qwen, LLaMA, or Gemma.

- Sub-second latency, no cold starts.

Finetune and Train:

- Execute training pipelines on ephemeral GPU instances using your existing Python codebase.

- Spot-optimized scheduling.

- Zero setup environment with automatic CUDA drivers and Python environment setup.

Use Cases

- Serving Open-Source LLMs: Quickly deploy open-source LLMs via llamacpp, with a production-ready HTTPS endpoint.

- Fine-tuning and Training: Execute training pipelines on spot GPUs using existing Python code.

How to use Float16.Cloud?

- Sign Up: Create an account on Float16.Cloud.

- Access the Dashboard: Navigate to the web dashboard or use the CLI.

- Upload Code: Upload your Python scripts or models.

- Select GPU Type: Choose between on-demand or spot GPUs.

- Run Workload: Execute your AI tasks and monitor progress via logs and metrics.

Why choose Float16.Cloud?

Float16.Cloud is ideal for users who want to:

- Accelerate AI development without managing infrastructure.

- Reduce costs with pay-per-use pricing and spot instances.

- Simplify deployment with pre-configured environments and native Python execution.

- Scale AI workloads efficiently.

Who is Float16.Cloud for?

Float16.Cloud is designed for:

- AI/ML Engineers: Accelerate model development and deployment.

- Data Scientists: Focus on data analysis and model building without infrastructure concerns.

- Researchers: Run experiments and train models at scale.

- Startups: Quickly deploy AI applications without significant upfront investment.

Float16.Cloud simplifies the process of deploying and scaling AI models by providing serverless GPUs, a user-friendly interface, and cost-effective pricing. Its features cater to the needs of AI engineers, data scientists, and researchers, making it an excellent choice for those looking to accelerate their AI development workflows.

Best Alternative Tools to "Float16.Cloud"

Cerebrium is a serverless AI infrastructure platform simplifying the deployment of real-time AI applications with low latency, zero DevOps, and per-second billing. Deploy LLMs and vision models globally.

Novita AI provides 200+ Model APIs, custom deployment, GPU Instances, and Serverless GPUs. Scale AI, optimize performance, and innovate with ease and efficiency.

Runpod is an AI cloud platform simplifying AI model building and deployment. Offering on-demand GPU resources, serverless scaling, and enterprise-grade uptime for AI developers.

Runpod is an all-in-one AI cloud platform that simplifies building and deploying AI models. Train, fine-tune, and deploy AI effortlessly with powerful compute and autoscaling.

Explore NVIDIA NIM APIs for optimized inference and deployment of leading AI models. Build enterprise generative AI applications with serverless APIs or self-host on your GPU infrastructure.

Inferless offers blazing fast serverless GPU inference for deploying ML models. It provides scalable, effortless custom machine learning model deployment with features like automatic scaling, dynamic batching, and enterprise security.

Deployo simplifies AI model deployment, turning models into production-ready applications in minutes. Cloud-agnostic, secure, and scalable AI infrastructure for effortless machine learning workflow.

GPUX is a serverless GPU inference platform that enables 1-second cold starts for AI models like StableDiffusionXL, ESRGAN, and AlpacaLLM with optimized performance and P2P capabilities.

Baseten is a platform for deploying and scaling AI models in production. It offers performant model runtimes, cross-cloud high availability, and seamless developer workflows, powered by the Baseten Inference Stack.

Simplify AI deployment with Synexa. Run powerful AI models instantly with just one line of code. Fast, stable, and developer-friendly serverless AI API platform.

ZETIC.ai enables building zero-cost on-device AI apps by deploying models directly on devices. Reduce AI service costs and secure data with serverless AI using ZETIC.MLange.

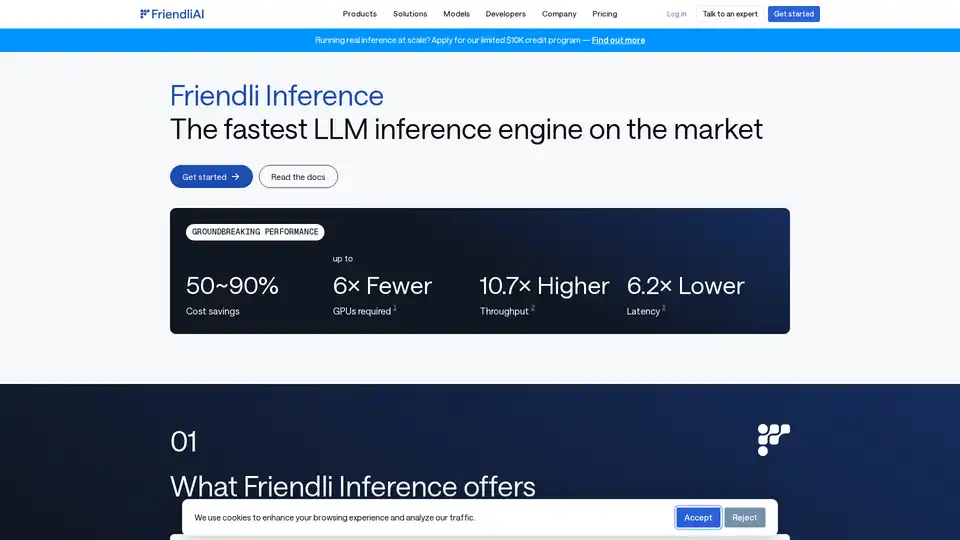

Friendli Inference is the fastest LLM inference engine, optimized for speed and cost-effectiveness, slashing GPU costs by 50-90% while delivering high throughput and low latency.

Scade.pro is a comprehensive no-code AI platform that enables users to build AI features, automate workflows, and integrate 1500+ AI models without technical skills.

Modal: Serverless platform for AI and data teams. Run CPU, GPU, and data-intensive compute at scale with your own code.