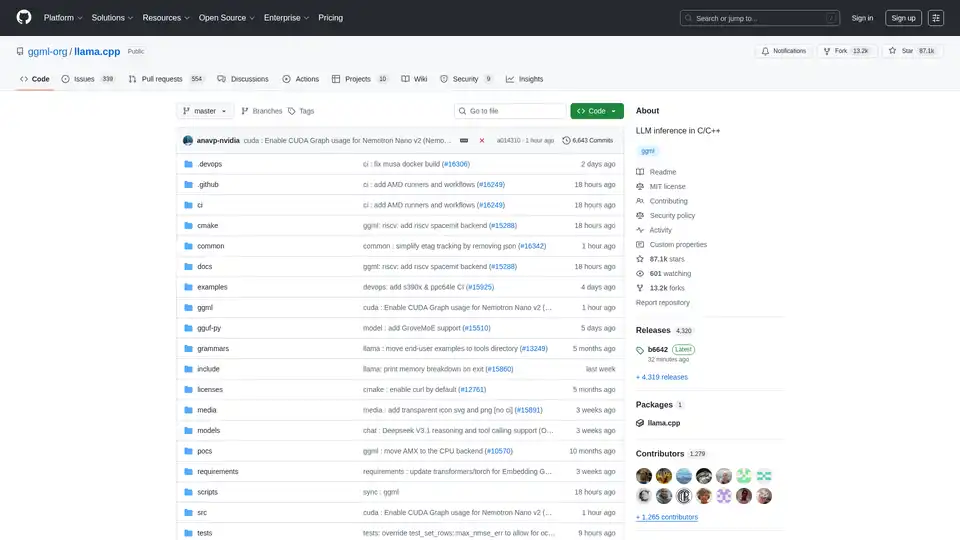

llama.cpp

Overview of llama.cpp

llama.cpp: Your Go-To Library for LLM Inference in C/C++

lama.cpp is a powerful, open-source library designed to enable efficient Large Language Model (LLM) inference using C/C++. Optimized for a wide range of hardware, from local machines to cloud deployments, it stands out for its minimal setup and state-of-the-art performance.

What is llama.cpp?

lama.cpp is a project focused on performing LLM inference in C/C++. It's engineered to provide excellent performance across diverse hardware configurations with minimal dependencies.

Key Features and Benefits

- Plain C/C++ Implementation: Eliminates external dependencies, simplifying deployment.

- Apple Silicon Optimization: Leverages ARM NEON, Accelerate, and Metal frameworks for peak performance on Apple devices.

- x86 Architecture Support: Includes AVX, AVX2, AVX512, and AMX support for optimized performance on x86 CPUs.

- Quantization: Supports 1.5-bit to 8-bit integer quantization, reducing memory usage and accelerating inference.

- GPU Acceleration: Custom CUDA kernels provide efficient LLM execution on NVIDIA GPUs. Also supports AMD GPUs via HIP and Moore Threads GPUs via MUSA.

- Hybrid CPU+GPU Inference: Facilitates the use of models larger than available VRAM by distributing the workload between CPU and GPU.

- Multiple Backends: Supports Metal, BLAS, BLIS, SYCL, MUSA, CUDA, HIP, Vulkan, CANN, OpenCL, IBM zDNN, and WebGPU (in progress).

How does llama.cpp work?

lama.cpp works by implementing LLM inference directly in C/C++. This approach reduces overhead and allows for fine-grained control over hardware resources. The library is designed to be modular, with different backends optimized for various hardware platforms. It uses techniques like quantization to reduce the memory footprint of models, making it possible to run large models on resource-constrained devices.

How to use llama.cpp?

Installation:

- Using Package Managers: Install via brew, nix, or winget.

- Docker: Use the provided Docker images.

- Pre-built Binaries: Download binaries from the releases page.

- Build from Source: Clone the repository and follow the build guide.

Obtaining Models:

- Download GGUF models from Hugging Face or other model hosting sites.

- Convert models to GGUF format using the provided Python scripts.

Running Inference:

- Use the

llama-clitool for experimentation. - Deploy a local HTTP server using

llama-serverfor OpenAI API compatibility.

- Use the

Example Commands:

## Use a local model file

llama-cli -m my_model.gguf

## Or download and run a model directly from Hugging Face

llama-cli -hf ggml-org/gemma-3-1b-it-GGUF

## Launch OpenAI-compatible API server

llama-server -hf ggml-org/gemma-3-1b-it-GGUF

Who is llama.cpp for?

lama.cpp is ideal for:

- Developers: Implementing LLM-powered applications with C/C++.

- Researchers: Experimenting with LLMs on various hardware platforms.

- Hobbyists: Running LLMs on personal computers and devices.

- Organizations: Deploying LLMs in production environments with minimal overhead.

Practical Applications of llama.cpp

lama.cpp can be used in various scenarios, including:

- Local LLM Inference: Run models on personal computers without relying on cloud services.

- Edge Computing: Deploy LLMs on edge devices for low-latency applications.

- Mobile Applications: Integrate LLMs into mobile apps for on-device processing.

- Custom AI Solutions: Build custom AI solutions tailored to specific hardware and software environments.

Why choose llama.cpp?

lama.cpp provides a unique combination of performance, flexibility, and ease of use, making it an excellent choice for LLM inference. Its key advantages include:

- Optimized Performance: Engineered for peak performance on a wide range of hardware.

- Minimal Dependencies: Simplifies deployment and reduces the risk of conflicts.

- Quantization Support: Enables the use of large models on resource-constrained devices.

- Active Community: Benefits from ongoing development and community support.

- Versatile Tooling: includes tools like

llama-cli,llama-server,llama-perplexity, andllama-benchfor various use cases.

Supported Backends

lama.cpp supports multiple backends, targeting a wide array of devices:

| Backend | Target Devices |

|---|---|

| Metal | Apple Silicon |

| BLAS | All |

| BLIS | All |

| SYCL | Intel and Nvidia GPU |

| MUSA | Moore Threads GPU |

| CUDA | Nvidia GPU |

| HIP | AMD GPU |

| Vulkan | GPU |

| CANN | Ascend NPU |

| OpenCL | Adreno GPU |

| IBM zDNN | IBM Z & LinuxONE |

| WebGPU | All (In Progress) |

| RPC | All |

How to contribute to llama.cpp

Contributions to llama.cpp are welcome! You can contribute by:

- Opening pull requests with bug fixes or new features.

- Collaborating on existing issues and projects.

- Helping manage issues, PRs, and projects.

- Improving documentation and examples.

What is GGUF?

GGUF is a file format required by llama.cpp for storing models. Models in other data formats can be converted to GGUF using the convert_*.py Python scripts in the repository.

Conclusion

lama.cpp is a versatile and powerful library that makes LLM inference accessible to a broad audience. Whether you're a developer, researcher, or hobbyist, llama.cpp provides the tools and flexibility you need to harness the power of LLMs on your hardware of choice. With its focus on performance, ease of use, and community support, llama.cpp is poised to remain a key player in the rapidly evolving landscape of AI inference.

For more information, visit the llama.cpp GitHub repository.

Best Alternative Tools to "llama.cpp"

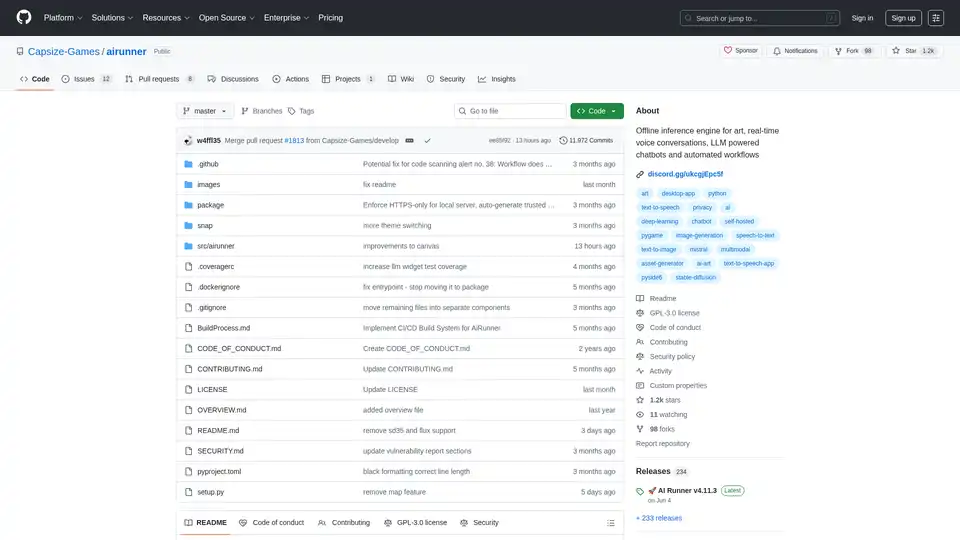

AI Runner is an offline AI inference engine for art, real-time voice conversations, LLM-powered chatbots, and automated workflows. Run image generation, voice chat, and more locally!

Lightning-fast AI platform for developers. Deploy, fine-tune, and run 200+ optimized LLMs and multimodal models with simple APIs - SiliconFlow.

GPT4All enables private, local execution of large language models (LLMs) on everyday desktops without API calls or GPUs. Accessible and efficient LLM usage with extended functionality.

PremAI is an applied AI research lab providing secure, personalized AI models, encrypted inference with TrustML™, and open-source tools like LocalAI for running LLMs locally.

Langtrace is an open-source observability and evaluations platform designed to improve the performance and security of AI agents. Track vital metrics, evaluate performance, and ensure enterprise-grade security for your LLM applications.

LM-Kit provides enterprise-grade toolkits for local AI agent integration, combining speed, privacy, and reliability to power next-generation applications. Leverage local LLMs for faster, cost-efficient, and secure AI solutions.

Falcon LLM is an open-source generative large language model family from TII, featuring models like Falcon 3, Falcon-H1, and Falcon Arabic for multilingual, multimodal AI applications that run efficiently on everyday devices.

Cloudflare Workers AI allows you to run serverless AI inference tasks on pre-trained machine learning models across Cloudflare's global network, offering a variety of models and seamless integration with other Cloudflare services.

Xander is an open-source desktop platform that enables no-code AI model training. Describe tasks in natural language for automated pipelines in text classification, image analysis, and LLM fine-tuning, ensuring privacy and performance on your local machine.

Firecrawl is the leading web crawling, scraping, and search API designed for AI applications. It turns websites into clean, structured, LLM-ready data at scale, powering AI agents with reliable web extraction without proxies or headaches.

Instantly run any Llama model from HuggingFace without setting up any servers. Over 11,900+ models available. Starting at $10/month for unlimited access.

xTuring is an open-source library that empowers users to customize and fine-tune Large Language Models (LLMs) efficiently, focusing on simplicity, resource optimization, and flexibility for AI personalization.

Explore Qwen3 Coder, Alibaba Cloud's advanced AI code generation model. Learn about its features, performance benchmarks, and how to use this powerful, open-source tool for development.

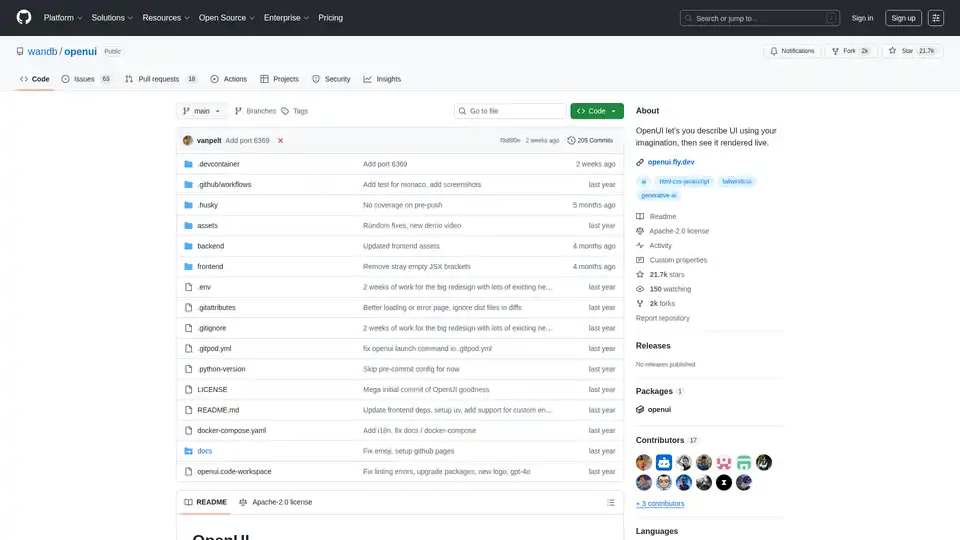

OpenUI is an open-source tool that lets you describe UI components in natural language and renders them live using LLMs. Convert descriptions to HTML, React, or Svelte for fast prototyping.