LM Studio

Overview of LM Studio

What is LM Studio? Unlocking Local Large Language Models

LM Studio is a groundbreaking desktop application designed to democratize access to cutting-edge artificial intelligence, specifically large language models (LLMs). It serves as a comprehensive platform that allows users to effortlessly explore, download, and run a wide array of open-source LLMs directly on their personal computers, entirely offline. This revolutionary tool makes advanced AI technology more accessible and manageable for everyone, regardless of their programming background.

At its core, LM Studio aims to simplify the often complex process of interacting with powerful AI models. By bringing LLMs to your local machine, it empowers users to harness the capabilities of models like LLaMa 2, MPT, Gemma, Falcon, Mistral, and StarCoder from Hugging Face, all within a secure and private environment. Whether you're an AI enthusiast, a developer, or someone simply curious about the potential of generative AI, LM Studio provides an intuitive gateway to the world of local LLMs.

Key Features of LM Studio: Empowering Your Local AI Journey

LM Studio is packed with features designed to offer a seamless and powerful local AI experience. Here's a closer look at what makes this platform stand out:

Offline LLM Execution for Enhanced Privacy and Speed

One of the most compelling advantages of LM Studio is its ability to run large language models entirely offline. This means all your interactions with the AI happen on your local machine, without needing an internet connection to send data to external servers. This feature is paramount for:

- Unparalleled Data Privacy and Security: Sensitive information never leaves your device, making it ideal for confidential projects, personal use, or environments with strict data governance requirements.

- Reduced Latency and Faster Responses: By eliminating network delays, you can experience near-instantaneous responses from the LLMs, leading to a much smoother and more efficient user experience.

- Reliable Accessibility: Your AI models are always available, even in areas with poor or no internet connectivity, ensuring uninterrupted workflow.

Intuitive In-App Chat UI: Interact with AI Instantly

LM Studio provides a user-friendly chat interface built directly into the application. This intuitive UI allows you to engage with your chosen LLMs in a conversational manner, similar to popular online AI chatbots. This feature is perfect for:

- Direct Interaction: Easily ask questions, generate text, summarize documents, or brainstorm ideas with the AI model running locally.

- Experimentation: Quickly test different prompts and observe model behavior without complex setup or coding.

- User Accessibility: Even individuals without any programming skills can comfortably interact with and utilize powerful AI models.

OpenAI Compatible Local Server: Bridging Local AI with Existing Workflows

For developers and those who wish to integrate local LLMs into their own applications, LM Studio offers an OpenAI compatible local server. This crucial feature means that your locally run LLMs can be accessed via an API endpoint that mimics the structure and behavior of the OpenAI API. This opens up a world of possibilities:

- Seamless Integration: Existing applications or scripts designed to work with the OpenAI API can be easily reconfigured to communicate with your local LM Studio server, saving significant development time.

- Flexible Development Environment: Test and develop AI-powered features offline, reducing reliance on cloud services and associated costs during the development phase.

- Customizable Deployments: Tailor your AI solutions with specific models and configurations, all while maintaining local control.

Seamless Model Download from Hugging Face Repositories

LM Studio simplifies the process of acquiring LLMs by allowing users to download compatible model files directly from Hugging Face repositories within the app. Hugging Face is a leading platform for machine learning models, and LM Studio provides access to a vast and growing library including:

- Popular Architectures: LLaMa 2, MPT, Gemma, Falcon, Mistral, StarCoder, and many more.

- Version Control and Updates: Easily find and download different versions or optimized variants of models.

- Simplified Model Management: No need to navigate complex file systems or command-line interfaces; everything is handled through LM Studio's interface.

Discover and Explore New AI Models

Staying updated with the rapidly evolving AI landscape is crucial. LM Studio includes a discovery feature on its home page, showcasing new and noteworthy LLMs. This helps users:

- Stay Informed: Keep abreast of the latest advancements and releases in the open-source LLM community.

- Expand Capabilities: Easily find and experiment with new models that might offer unique capabilities or improved performance for specific tasks.

How to Use LM Studio: Your Guide to Local AI Mastery

Getting started with LM Studio is straightforward, designed to be accessible even for beginners. The process typically involves:

Getting Started: Installation and Setup

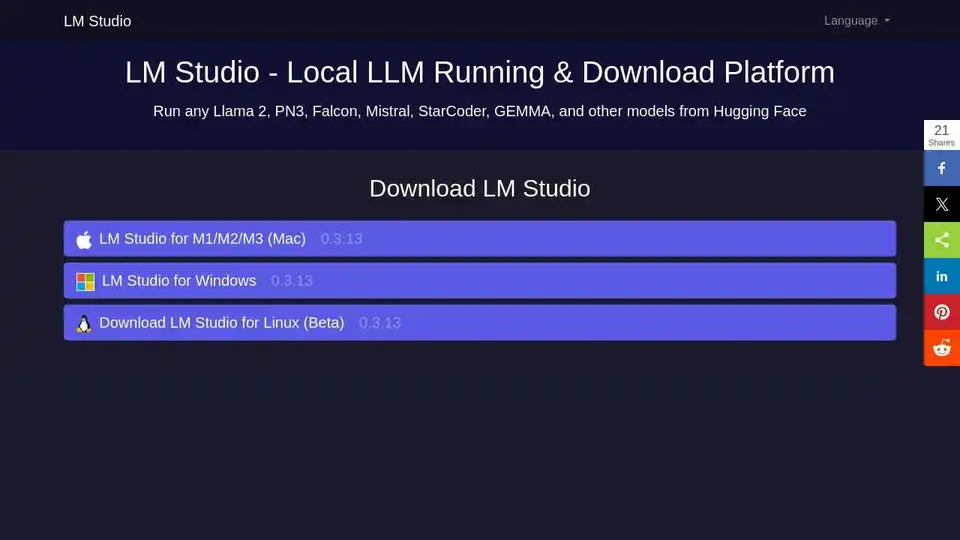

LM Studio is available as a native application for popular operating systems, ensuring broad compatibility:

- Mac: Optimized versions for M1/M2/M3 Apple Silicon chips.

- Windows: Full support for Windows environments.

- Linux (Beta): A beta version is available for Linux users, demonstrating continuous development and broader reach.

Simply download the appropriate installer from the official LM Studio website and follow the on-screen instructions. The installation is designed to be user-friendly, requiring no complex configurations or programming knowledge.

Selecting and Running Your First LLM

Once installed, the intuitive interface guides you through discovering and running models:

- Browse and Discover: Navigate the app's home page to find a model that suits your needs, or explore the Hugging Face repository section.

- Download: With a single click, download the desired compatible model file directly to your computer.

- Load and Chat: Select the downloaded model and interact with it using the in-app chat UI or connect through the local OpenAI-compatible server from your custom applications.

Who Can Benefit from LM Studio?

LM Studio caters to a diverse range of users, making advanced AI capabilities accessible to a broader audience.

AI Enthusiasts and Learners

For those new to the world of large language models, LM Studio offers a perfect sandbox. It allows you to experiment with AI without the need for expensive cloud computing services or deep technical expertise. Learn how LLMs work, understand their outputs, and explore their potential in a safe, controlled, and cost-free local environment.

Developers and Researchers

Developers can leverage LM Studio to build and test AI-powered applications efficiently. The OpenAI compatible local server is a game-changer for rapid prototyping, allowing for offline development and iteration. Researchers can use it to explore different model architectures, test hypotheses, and conduct experiments with specific LLMs, ensuring data privacy and full control over the environment.

Privacy-Conscious Individuals and Businesses

In an era where data privacy is paramount, LM Studio provides a robust solution. By running LLMs entirely offline, users can process sensitive information and generate content without concerns about data being transmitted to third-party servers. This makes it an invaluable tool for legal, medical, financial, or other highly regulated industries where data confidentiality is critical.

Content Creators and Writers

Whether you're generating creative stories, drafting marketing copy, summarizing research papers, or seeking inspiration, LLMs can be powerful assistants. With LM Studio, writers can utilize these tools locally, ensuring their creative process remains private and their generated content is secure.

The Practical Value of LM Studio in Today's AI Landscape

Choosing LM Studio offers distinct advantages that address common challenges in the AI ecosystem.

Cost-Effectiveness and Resource Optimization

Running LLMs locally through LM Studio significantly reduces or eliminates the recurring costs associated with cloud-based AI services and API calls. By utilizing your existing hardware, you can make AI highly economical, especially for frequent or intensive use cases. This is particularly beneficial for students, hobbyists, and small businesses looking to harness AI without substantial operational expenses.

Speed and Performance

Local execution means data travels minimal distances, resulting in significantly lower latency compared to interacting with remote cloud APIs. This translates to faster query processing and quicker responses, enhancing productivity and user experience, especially for real-time applications or interactive workflows.

Customization and Control

LM Studio grants users unprecedented control over their AI environment. You can experiment with different model quantization levels, adjust parameters, and fine-tune configurations to achieve optimal performance and desired outputs. This level of customization is often difficult or impossible to achieve with black-box cloud API solutions, allowing for truly tailored AI experiences.

Frequently Asked Questions about LM Studio

To help you better understand LM Studio, here are answers to some common inquiries:

What is LM Studio?

LM Studio is a user-friendly desktop software application that simplifies the process of exploring, downloading, and running various open-source large language models (LLMs) directly on your computer, providing an intuitive interface for both technical and non-technical users.

Do I need programming skills to use LM Studio?

No, you do not need programming skills. LM Studio is designed with an intuitive interface that makes it accessible to everyone, allowing users to interact with powerful AI models without writing a single line of code.

Can I run multiple AI models simultaneously with LM Studio?

The provided information on this page does not explicitly detail the capability to run multiple AI models simultaneously within LM Studio. Users interested in this specific functionality should consult the official LM Studio documentation or community forums for the most up-to-date and detailed information.

Is LM Studio free to use?

The provided text does not explicitly state whether LM Studio is free to use. For accurate information regarding licensing, pricing, and available editions, please refer to the official LM Studio website.

How does LM Studio ensure my computer can handle an AI model?

While the source content does not elaborate on specific mechanisms LM Studio employs for hardware compatibility checks, it is generally understood that running LLMs locally requires adequate system resources (RAM, CPU, GPU). Users are strongly advised to consult LM Studio's official documentation for detailed minimum hardware requirements and best practices to ensure optimal performance with various AI models.

Can I use LM Studio for commercial purposes?

The provided text does not address the terms of commercial use for LM Studio. If you intend to use LM Studio for commercial projects or applications, it is crucial to review the official licensing agreements and terms of service available on the LM Studio website to ensure compliance.

Best Alternative Tools to "LM Studio"

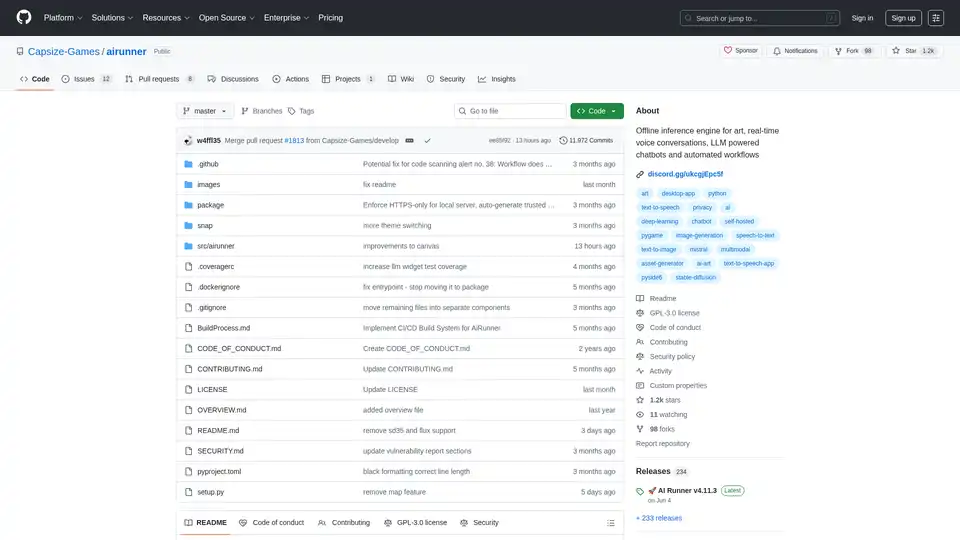

AI Runner is an offline AI inference engine for art, real-time voice conversations, LLM-powered chatbots, and automated workflows. Run image generation, voice chat, and more locally!

Enclave AI is a privacy-focused AI assistant for iOS and macOS that runs completely offline. It offers local LLM processing, secure conversations, voice chat, and document interaction without needing an internet connection.

GrammarBot is an AI-powered grammar and spelling checker for MacOS that works offline. Download the app and AI model once, and improve your English forever. Personal license $12.

Jan is an open-source, offline-first AI client. Run Large Language Models (LLMs) locally with privacy and no API bills. Connect to various models and services.

NativeMind is an open-source Chrome extension that runs local LLMs like Ollama for a fully offline, private ChatGPT alternative. Features include context-aware chat, agent mode, PDF analysis, writing tools, and translation—all 100% on-device with no cloud dependency.

Private LLM is a local AI chatbot for iOS and macOS that works offline, keeping your information completely on-device, safe and private. Enjoy uncensored chat on your iPhone, iPad, and Mac.

RecurseChat: A personal AI app that lets you talk with local AI, offline capable, and chats with PDF & markdown files.

ProxyAI is an AI-powered code assistant for JetBrains IDEs, offering code completion, natural language editing, and offline support with local LLMs. Enhance your coding with AI.

Ncurator is a browser extension that uses AI to help you manage and analyze your knowledge base. It can find and organize answers for you.

Dot is a local, offline AI chat tool powered by Mistral 7B, allowing you to chat with documents without sending away your data. Free and privacy-focused.

Roo Code is an open-source AI-powered coding assistant for VS Code, featuring AI agents for multi-file editing, debugging, and architecture. It supports various models, ensures privacy, and customizes to your workflow for efficient development.

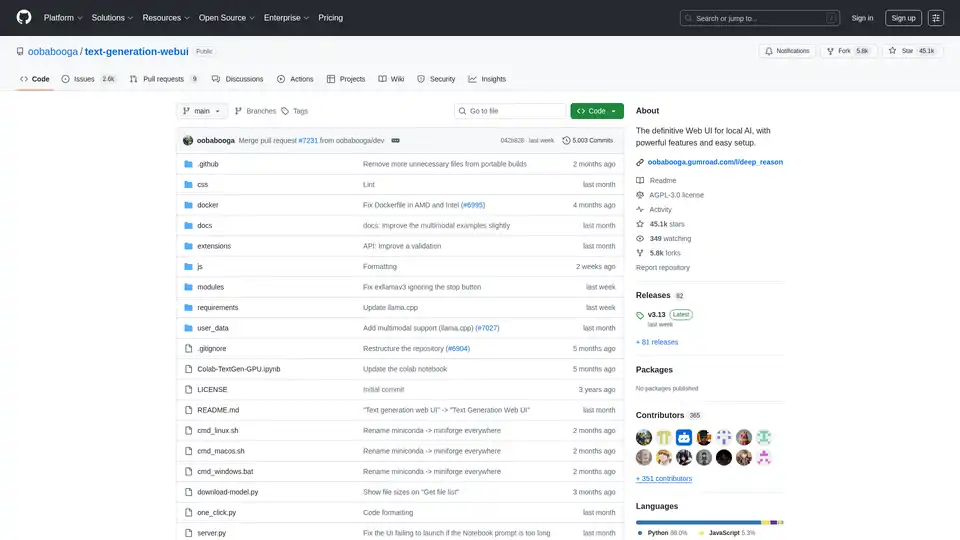

Text Generation Web UI is a powerful, user-friendly Gradio web interface for local AI large language models. Supports multiple backends, extensions, and offers offline privacy.

ProxyAI is an AI copilot for JetBrains IDEs, offering features like code completion, natural language editing, and integration with leading LLMs. It supports offline development and various models via API keys.

Sagify is an open-source Python tool that streamlines machine learning pipelines on AWS SageMaker, offering a unified LLM Gateway for seamless integration of proprietary and open-source large language models to boost productivity.