Lunary

Overview of Lunary

Lunary: The Open-Source LLM Engineering Platform

What is Lunary?

Lunary is an open-source LLM (Large Language Model) engineering platform designed to help teams build, monitor, and optimize AI applications with confidence. It provides a comprehensive suite of tools for observability, prompt management, evaluations, and product analytics.

How does Lunary work?

Lunary works by integrating into your AI application stack, capturing data from LLMs and related components. This data is then used to provide insights into model performance, user interactions, and overall application health. The platform offers features such as:

- Observability: Real-time monitoring of LLM performance, including latency, cost, and error rates.

- Prompt Management: Tools for creating, versioning, and A/B testing prompts.

- Evaluations: Automated scoring of LLM responses based on predefined criteria.

- Product Analytics: Tracking user engagement and satisfaction with AI-powered features.

Why choose Lunary?

- Open Source: Full transparency and control over your data and infrastructure.

- Self-Hostable: Deploy Lunary on your own infrastructure for enhanced security and compliance.

- 1-Line Integration: Easy to integrate with existing AI applications using lightweight SDKs.

Who is Lunary for?

Lunary is ideal for teams building:

- Internal Tools: Automate workflows and improve team access to company knowledge.

- Customer Support Chatbots: Provide accurate and helpful responses to customer inquiries.

- Autonomous Agents: Deploy agents to execute complex tasks and monitor their performance.

Key Features and Benefits

- Debug LLM Agents: Log prompts and results to understand how agents are performing in production.

- Track and Analyze Performance: Monitor GenAI project performance, costs, and user interactions.

- Iterate on Prompts: Create templates and collaborate on prompts with non-technical team members.

- Ensure Security: PII masking, RBAC, and SSO for enterprise-grade security and compliance.

Use Cases

- Chatbot Analytics: Understand the gap between your chatbot and your users.

- Internal Knowledge Bases: Help your team access company knowledge and automate workflows.

- Customer Support Automation: Build customer-facing chatbots that understand your documentation.

- Agent Monitoring: Deploy autonomous agents and monitor their performance in real-time.

Integration and Compatibility

Lunary offers seamless integration with various LLMs and frameworks, including:

- OpenAI

- LangChain

It supports multiple programming languages through its SDKs, including Python.

Security and Compliance

Lunary prioritizes security and compliance with features like:

- PII Masking: Protect user personal information.

- RBAC and SSO: Manage access control and ensure data security.

- Self-Hosting: Deploy in your own VPC with Kubernetes or Docker.

- SOC 2 Type II and ISO 27001 Certified

Customer Testimonials

- Nevo David, OSS Chief at Gitroom.com: "I had no idea how often our chatbots were hallucinating before monitoring their data with Lunary. Really helping a lot with improving their answer quality. Plus the integration literally took 2 minutes. Essential tool!"

- David Erik Mollberg, GenAI Engineer at Islandsbanki: "Lunary has been instrumental in our GenAI journey. It has provided us with a comprehensive overview of our GenAI application, significantly aiding in both its development and maintenance."

- Bart van der Meeren, CTO & Co-Founder of Growf: "Very happy with Lunary AI as it requires no oversight from us and can always trust that it works reliably. We were setup in minutes and directly got to monitoring and improving our prompts."

Getting Started

Lunary offers both self-hosted and cloud options. You can get started in minutes by:

- Self-hosting the open-source platform.

- Signing up for the cloud version.

Conclusion

Lunary is a powerful and versatile LLM engineering platform that empowers teams to build, monitor, and optimize AI applications effectively. With its open-source nature, comprehensive features, and focus on security, Lunary is an excellent choice for organizations looking to leverage the power of LLMs while maintaining control and compliance.

Best Alternative Tools to "Lunary"

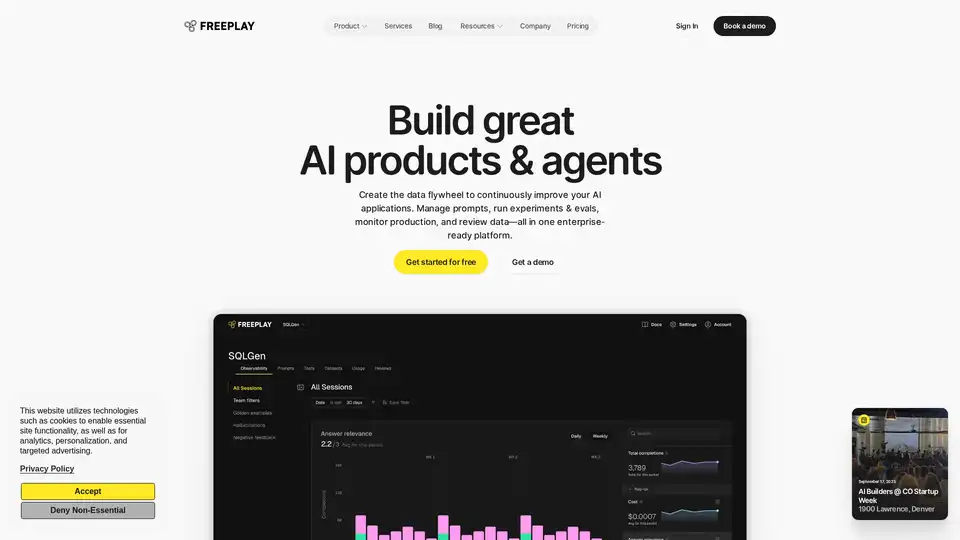

Freeplay is an AI platform designed to help teams build, test, and improve AI products through prompt management, evaluations, observability, and data review workflows. It streamlines AI development and ensures high product quality.

Maxim AI is an end-to-end evaluation and observability platform that helps teams ship AI agents reliably and 5x faster with comprehensive testing, monitoring, and quality assurance tools.

Future AGI is a unified LLM observability and AI agent evaluation platform that helps enterprises achieve 99% accuracy in AI applications through comprehensive testing, evaluation, and optimization tools.

Deliver impactful AI-driven software in minutes, without compromising on quality. Seamlessly ship, monitor, test and iterate without losing focus.

UsageGuard provides a unified AI platform for secure access to LLMs from OpenAI, Anthropic, and more, featuring built-in safeguards, cost optimization, real-time monitoring, and enterprise-grade security to streamline AI development.

Athina is a collaborative AI platform that helps teams build, test, and monitor LLM-based features 10x faster. With tools for prompt management, evaluations, and observability, it ensures data privacy and supports custom models.

Arize AI provides a unified LLM observability and agent evaluation platform for AI applications, from development to production. Optimize prompts, trace agents, and monitor AI performance in real time.

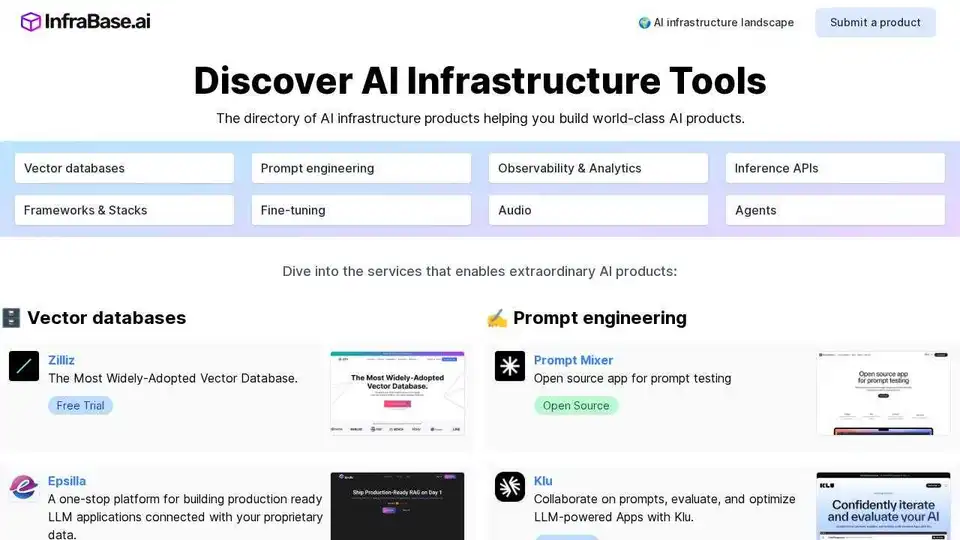

Infrabase.ai is the directory for discovering AI infrastructure tools and services. Find vector databases, prompt engineering tools, inference APIs, and more to build world-class AI products.

Keywords AI is a leading LLM monitoring platform designed for AI startups. Monitor and improve your LLM applications with ease using just 2 lines of code. Debug, test prompts, visualize logs and optimize performance for happy users.

Langtrace is an open-source observability and evaluations platform designed to improve the performance and security of AI agents. Track vital metrics, evaluate performance, and ensure enterprise-grade security for your LLM applications.

Enhance APM with OpenLIT, an open-source platform on OpenTelemetry. Simplify AI development with unified traces and metrics in a powerful interface, optimizing LLM & GenAI observability.

WhyLabs provides AI observability, LLM security, and model monitoring. Guardrail Generative AI applications in real-time to mitigate risks.

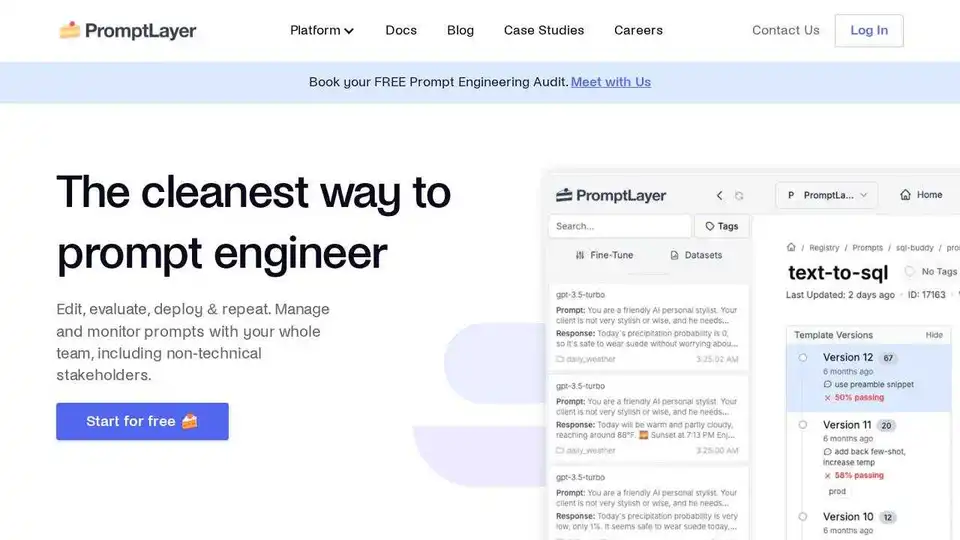

PromptLayer is an AI engineering platform for prompt management, evaluation, and LLM observability. Collaborate with experts, monitor AI agents, and improve prompt quality with powerful tools.

Future AGI offers a unified LLM observability and AI agent evaluation platform for AI applications, ensuring accuracy and responsible AI from development to production.