MagicAnimate

Overview of MagicAnimate

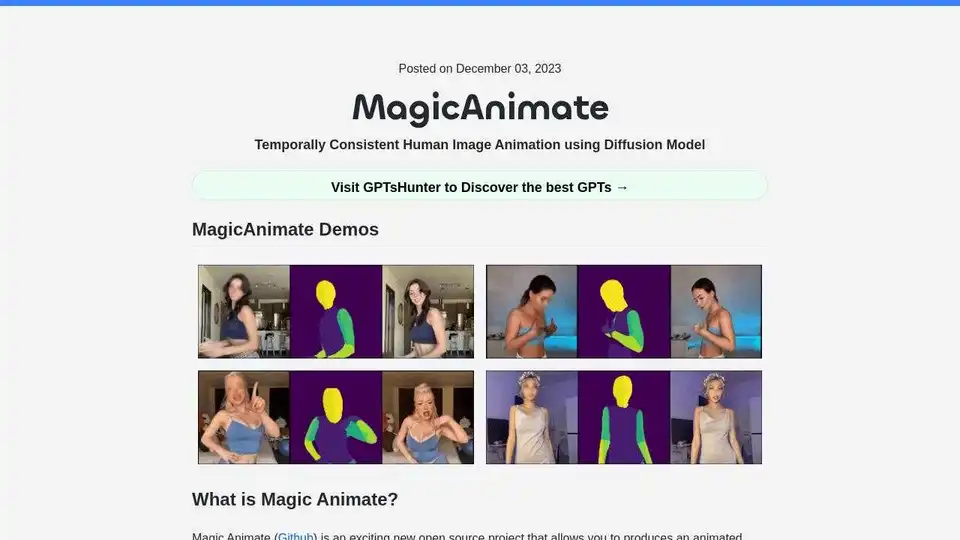

MagicAnimate: Temporally Consistent Human Image Animation using Diffusion Model

MagicAnimate is an open-source project that produces animated videos from a single image and a motion video using a diffusion-based framework. It focuses on maintaining temporal consistency and preserving the reference image while enhancing animation fidelity. This tool is developed by Show Lab, National University of Singapore & Bytedance.

What is MagicAnimate?

MagicAnimate excels in animating reference images with motion sequences from various sources, including cross-ID animations and unseen domains like oil paintings and movie characters. It integrates with text-to-image diffusion models like DALLE3, bringing text-prompted images to life with dynamic actions.

Key Features and Advantages:

- Temporal Consistency: Maintains consistency throughout the animation.

- Reference Image Preservation: Faithfully preserves the details of the reference image.

- Enhanced Animation Fidelity: Improves the quality and realism of the animation.

- Versatile Motion Sources: Supports motion sequences from various sources, including unseen domains.

- Integration with T2I Models: Compatible with text-to-image diffusion models like DALLE3.

Disadvantages:

- Some distortion in the face and hands.

- Style shifts from anime to realism in the faces.

- Body proportion changes when applying anime style to real human-based videos.

Getting Started with MagicAnimate:

- Prerequisites: Ensure you have Python >= 3.8, CUDA >= 11.3, and FFmpeg installed.

- Installation:

conda env create -f environment.yml conda activate manimate

How to Use MagicAnimate:

Online Demo: Try the MagicAnimate online demo on Hugging Face or Replicate.

Colab: Run MagicAnimate on Google Colab using this tutorial: How to Run MagicAnimate on Colab.

Replicate API: Use the Replicate API to generate animated videos.

import Replicate from "replicate"; const replicate = new Replicate({ auth: process.env.REPLICATE_API_TOKEN, }); const output = await replicate.run( "lucataco/magic-animate:e24ad72cc67dd2a365b5b909aca70371bba62b685019f4e96317e59d4ace6714", { input: { image: "https://example.com/image.png", video: "Input motion video", num_inference_steps: 25, // Number of denoising steps guidance_scale: 7.5, // Scale for classifier-free guidance seed: 349324 // Random seed. Leave blank to randomize the seed } } );

How to Generate Motion Videos:

- Use OpenPose, a real-time multi-person keypoint detection library, to convert videos into motion videos.

- Convert a motion video to OpenPose with this model: video to openpose.

- Use the magic-animate-openpose model with OpenPose.

Additional Resources:

- Official MagicAnimate introduction

- MagicAnimate Paper.pdf

- MagicAnimate arXiv

- MagicAnimate GitHub Code

- MagicAnimate Demo

What is the primary function of MagicAnimate?

MagicAnimate's primary function is to generate animated videos from a single reference image and a motion video, ensuring temporal consistency and high fidelity.

How does MagicAnimate work?

MagicAnimate uses a diffusion-based framework to analyze the motion in the input video and apply it to the reference image, generating a new video that mimics the motion while preserving the visual characteristics of the reference image.

Best Alternative Tools to "MagicAnimate"

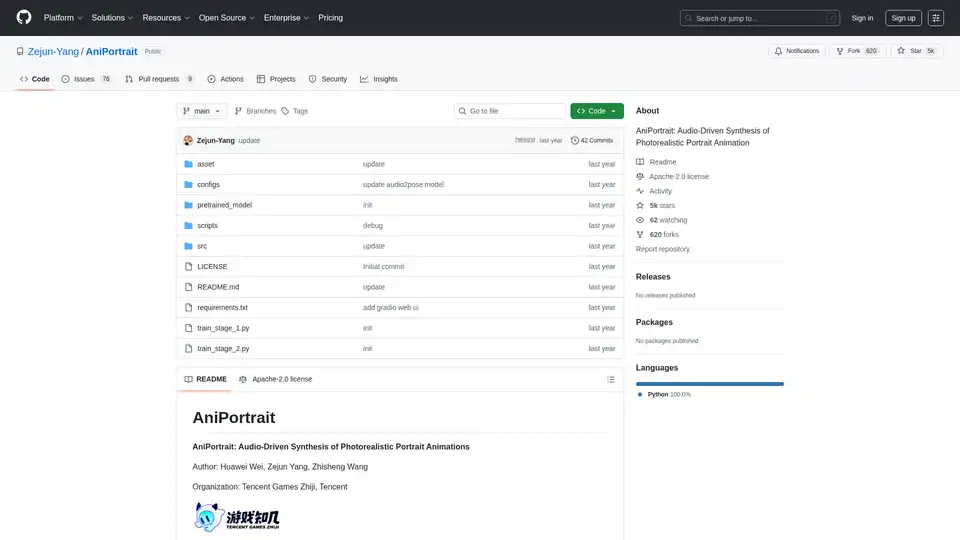

AniPortrait is an open-source AI framework for generating photorealistic portrait animations driven by audio or video inputs. It supports self-driven, face reenactment, and audio-driven modes for high-quality video synthesis.

AnimateDiff is a free online video maker that brings motion to AI-generated visuals. Create animations from text prompts or animate existing images with natural movements learned from real videos. This plug-and-play framework adds video capabilities to diffusion models like Stable Diffusion without retraining. Explore the future of AI content creation with AnimateDiff's text-to-video and image-to-video generation tools.

Free uncensored AI tools for dreamers. Create, edit, and animate videos with the power of AI. Unleash your imagination through free unrestricted AI technology.

Transform photos into captivating cartoons with ToonCrafter AI, an open-source AI tool for seamless cartoon interpolation and video generation. Perfect for animation enthusiasts and creative directors.

Anime Art Studio is a 100% free AI anime generator with 24/7 access to 100+ stable diffusion anime models. Transform text into stunning anime art with ease.

FramePack enables high-quality video generation on consumer GPUs with just 6GB VRAM. This open-source video diffusion technology uses frame context packing for fast, local execution.

Explore AI Library, the comprehensive catalog of over 2150 neural networks and AI tools for generative content creation. Discover top AI art models, tools for text-to-image, video generation, and more to boost your creative projects.

MimicPC is an open-source AI platform for creating AI images, videos, and audio. Train LoRA models without deployment and customize with your own models at an affordable price.

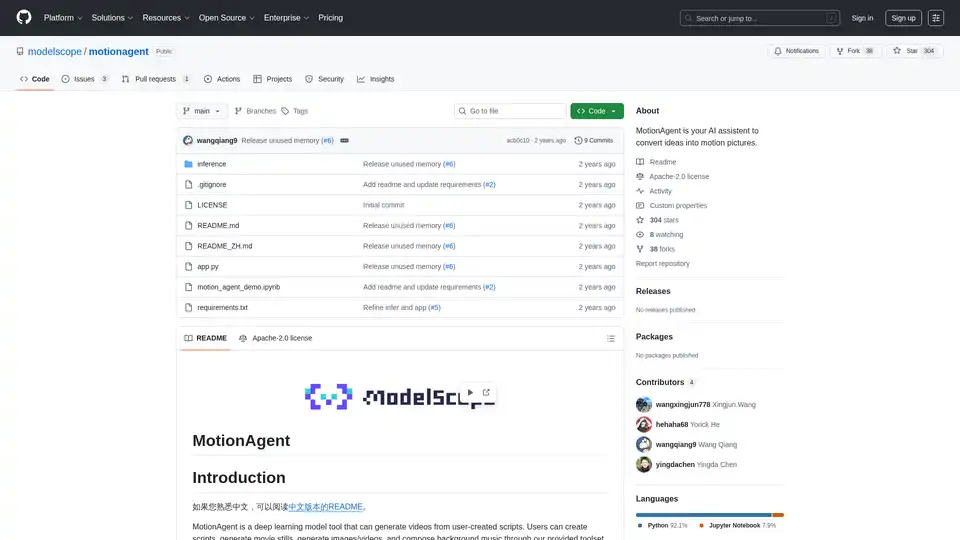

MotionAgent is an open-source AI tool that transforms ideas into motion pictures by generating scripts, movie stills, high-res videos, and custom background music using models like Qwen-7B-Chat and SDXL.

Discover NightCafe, the ultimate free AI art generator with top models like Flux and DALL-E 3, vibrant community, and daily challenges for endless creativity.

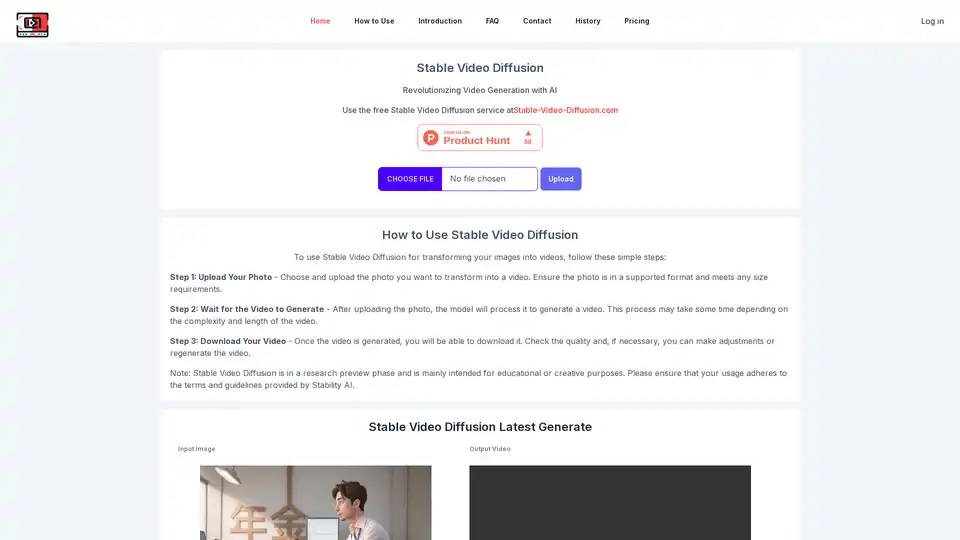

Stable Video Diffusion is a free AI tool by Stability AI that transforms images into videos. Perfect for creative and educational purposes. Try AI video generation now!

All-in-One AI Creator Tools: Your One-Stop AI Platform for Text, Image, Video, and Digital Human Creation. Transform ideas into stunning visuals quickly with advanced AI features.

Lumiere, by Google Research, is a space-time diffusion model for video generation. It supports text-to-video, image-to-video, video stylization, cinemagraphs, and inpainting, generating realistic and coherent motion.

Ray3 is the world's first AI video model with HDR generation and intelligent reasoning. Transform text into stunning 4K HDR videos using Draft Mode, Hi-Fi Diffusion, voiceover, and subtitles. Ideal for creators, businesses, and professionals.