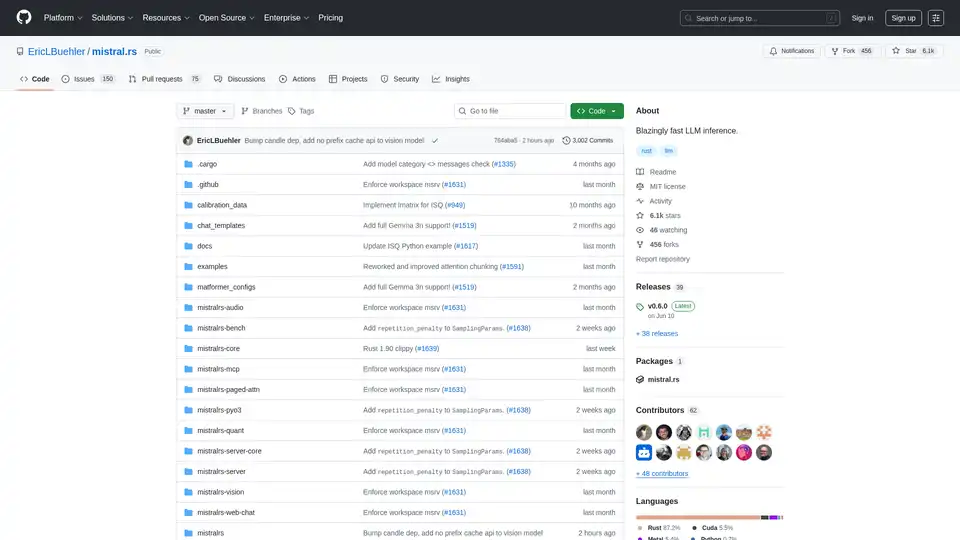

mistral.rs

Overview of mistral.rs

What is mistral.rs?

Mistral.rs is a cross-platform, blazingly fast Large Language Model (LLM) inference engine written in Rust. It's designed to provide high performance and flexibility across various platforms and hardware configurations. Supporting multimodal workflows, mistral.rs handles text, vision, image generation, and speech.

Key Features and Benefits

- Multimodal Workflow: Supports text↔text, text+vision↔text, text+vision+audio↔text, text→speech, text→image.

- APIs: Offers Rust, Python, and OpenAI HTTP server APIs (with Chat Completions, Responses API) for easy integration into different environments.

- MCP Client: Connect to external tools and services automatically, such as file systems, web search, databases, and other APIs.

- Performance: Utilizes technologies like ISQ (In-place quantization), PagedAttention, and FlashAttention for optimized performance.

- Ease of Use: Includes features like automatic device mapping (multi-GPU, CPU), chat templates, and tokenizer auto-detection.

- Flexibility: Supports LoRA & X-LoRA adapters with weight merging, AnyMoE for creating MoE models on any base model, and customizable quantization.

How does mistral.rs work?

Mistral.rs leverages several key techniques to achieve its high performance:

- In-place Quantization (ISQ): Reduces the memory footprint and improves inference speed by quantizing the model weights.

- PagedAttention & FlashAttention: Optimizes memory usage and computational efficiency during attention mechanisms.

- Automatic Device Mapping: Automatically distributes the model across available hardware resources, including multiple GPUs and CPUs.

- MCP (Model Context Protocol): Enables seamless integration with external tools and services by providing a standardized protocol for tool calls.

How to use mistral.rs?

Installation: Follow the installation instructions provided in the official documentation. This typically involves installing Rust and cloning the mistral.rs repository.

Model Acquisition: Obtain the desired LLM model. Mistral.rs supports various model formats, including Hugging Face models, GGUF, and GGML.

API Usage: Utilize the Rust, Python, or OpenAI-compatible HTTP server APIs to interact with the inference engine. Examples and documentation are available for each API.

- Python API:

pip install mistralrs - Rust API:

Add

mistralrs = { git = "https://github.com/EricLBuehler/mistral.rs.git" }to yourCargo.toml.

- Python API:

Run the Server: Launch the mistralrs-server with the appropriate configuration options. This may involve specifying the model path, quantization method, and other parameters.

./mistralrs-server --port 1234 run -m microsoft/Phi-3.5-MoE-instruct

Use Cases

Mistral.rs is suitable for a wide range of applications, including:

- Chatbots and Conversational AI: Power interactive and engaging chatbots with high-performance inference.

- Text Generation: Generate realistic and coherent text for various purposes, such as content creation and summarization.

- Image and Video Analysis: Process and analyze visual data with integrated vision capabilities.

- Speech Recognition and Synthesis: Enable speech-based interactions with support for audio processing.

- Tool Calling and Automation: Integrate with external tools and services for automated workflows.

Who is mistral.rs for?

Mistral.rs is designed for:

- Developers: Who need a fast and flexible LLM inference engine for their applications.

- Researchers: Who are exploring new models and techniques in natural language processing.

- Organizations: That require high-performance AI capabilities for their products and services.

Why choose mistral.rs?

- Performance: Offers blazingly fast inference speeds through techniques like ISQ, PagedAttention, and FlashAttention.

- Flexibility: Supports a wide range of models, quantization methods, and hardware configurations.

- Ease of Use: Provides simple APIs and automatic configuration options for easy integration.

- Extensibility: Allows for integration with external tools and services through the MCP protocol.

Supported Accelerators

Mistral.rs supports a variety of accelerators:

- NVIDIA GPUs (CUDA): Use the

cuda,flash-attn, andcudnnfeature flags. - Apple Silicon GPU (Metal): Use the

metalfeature flag. - CPU (Intel): Use the

mklfeature flag. - CPU (Apple Accelerate): Use the

acceleratefeature flag. - Generic CPU (ARM/AVX): Enabled by default.

To enable features, pass them to Cargo:

cargo build --release --features "cuda flash-attn cudnn"

Community and Support

Conclusion

Mistral.rs stands out as a powerful and versatile LLM inference engine, offering blazing-fast performance, extensive flexibility, and seamless integration capabilities. Its cross-platform nature and support for multimodal workflows make it an excellent choice for developers, researchers, and organizations looking to harness the power of large language models in a variety of applications. By leveraging its advanced features and APIs, users can create innovative and impactful AI solutions with ease.

For those seeking to optimize their AI infrastructure and unlock the full potential of LLMs, mistral.rs provides a robust and efficient solution that is well-suited for both research and production environments.

Best Alternative Tools to "mistral.rs"

Lorelight is an AI monitoring platform designed for PR teams to track brand mentions across major AI platforms like ChatGPT, Claude, and Gemini, offering real-time insights and competitive intelligence.

Botpress is a complete AI agent platform powered by the latest LLMs. It enables you to build, deploy, and manage AI agents for customer support, internal automation, and more, with seamless integration capabilities.

Superlines is an AI-powered solution for Generative Engine Optimization (GEO) & LLM SEO. Track and optimize your brand’s visibility across AI platforms like ChatGPT, Gemini, and Perplexity. It helps you stay visible as search shifts toward AI systems.

HUMAIN provides full-stack AI solutions, covering infrastructure, data, models, and applications. Accelerate progress and unlock real-world impact at scale with HUMAIN's AI-native platforms.

AI Runner is an offline AI inference engine for art, real-time voice conversations, LLM-powered chatbots, and automated workflows. Run image generation, voice chat, and more locally!

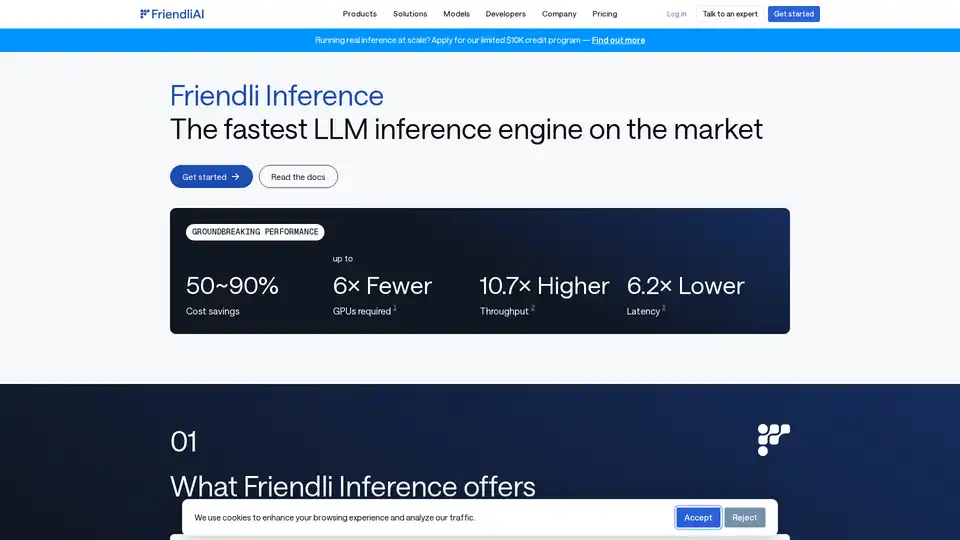

Friendli Inference is the fastest LLM inference engine, optimized for speed and cost-effectiveness, slashing GPU costs by 50-90% while delivering high throughput and low latency.

PocketLLM is a private AI knowledge search engine by ThirdAI. Search through PDFs, documents, and URLs locally on your device. Fine-tune results and summarize for easy understanding.

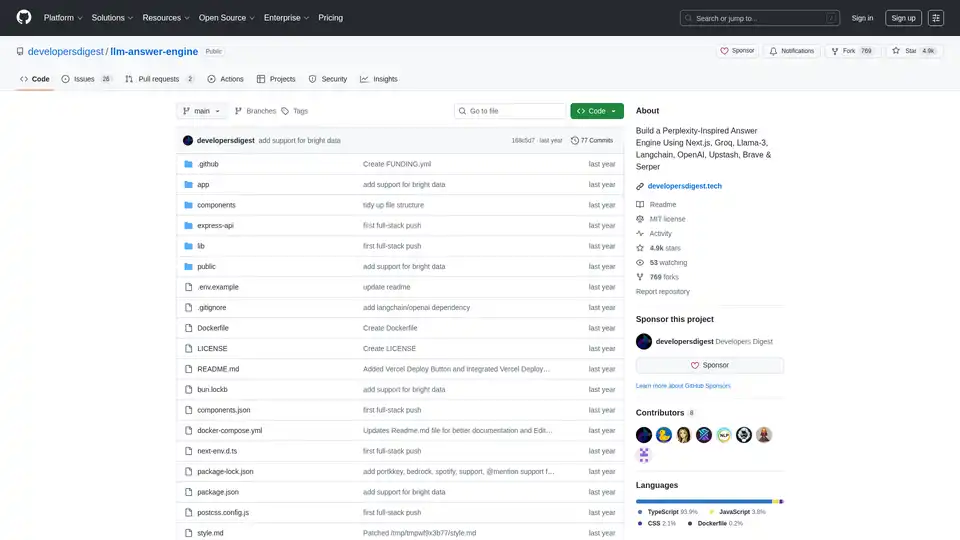

Build a Perplexity-inspired AI answer engine using Next.js, Groq, Llama-3, and Langchain. Get sources, answers, images, and follow-up questions efficiently.

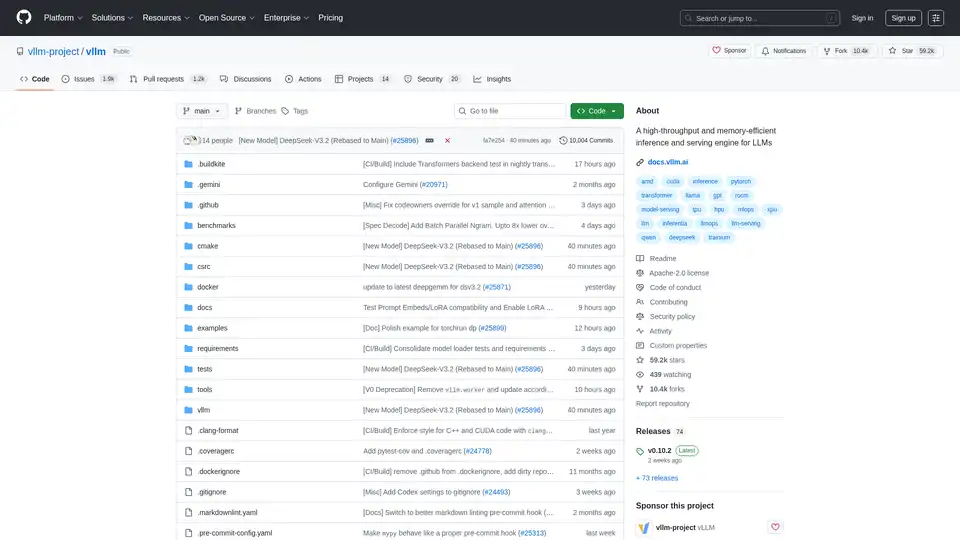

vLLM is a high-throughput and memory-efficient inference and serving engine for LLMs, featuring PagedAttention and continuous batching for optimized performance.

Lightning-fast AI platform for developers. Deploy, fine-tune, and run 200+ optimized LLMs and multimodal models with simple APIs - SiliconFlow.

Xander is an open-source desktop platform that enables no-code AI model training. Describe tasks in natural language for automated pipelines in text classification, image analysis, and LLM fine-tuning, ensuring privacy and performance on your local machine.

Rierino is a powerful low-code platform accelerating ecommerce and digital transformation with AI agents, composable commerce, and seamless integrations for scalable innovation.

Spice.ai is an open source data and AI inference engine for building AI apps with SQL query federation, acceleration, search, and retrieval grounded in enterprise data.

Groq offers a hardware and software platform (LPU Inference Engine) for fast, high-quality, and energy-efficient AI inference. GroqCloud provides cloud and on-prem solutions for AI applications.