Release.ai

Overview of Release.ai

What is Release.ai?

Release.ai is a platform designed to simplify the deployment and management of AI models. It offers high-performance inference capabilities with sub-100ms latency, enterprise-grade security, and seamless scalability, making it easier for developers to integrate AI into their applications.

How does Release.ai work?

Release.ai provides an optimized infrastructure for various AI model types, from Large Language Models (LLMs) to computer vision models. It allows users to deploy models quickly with just a few lines of code using comprehensive SDKs and APIs. The platform automatically scales to handle thousands of concurrent requests while ensuring consistent performance.

Key Features of Release.ai:

- High-Performance Inference: Deploy models with sub-100ms latency, ensuring rapid response times for AI applications.

- Seamless Scalability: Automatically scale from zero to thousands of concurrent requests, adapting to your needs.

- Enterprise-Grade Security: Benefit from SOC 2 Type II compliance, private networking, and end-to-end encryption.

- Optimized Infrastructure: Leverage fine-tuned infrastructure for LLMs, computer vision, and other model types.

- Easy Integration: Integrate with your existing tech stack using comprehensive SDKs and APIs.

- Reliable Monitoring: Track model performance with real-time monitoring and detailed analytics.

- Cost-Effective Pricing: Pay only for what you use, with pricing that scales with your usage.

- Expert Support: Access assistance from ML experts to optimize models and resolve issues.

Why Choose Release.ai?

Leading companies choose Release.ai for its ability to deploy AI models quickly and efficiently. Unlike other platforms, Release.ai offers fully automated infrastructure management, enterprise-grade security, and superior performance optimization.

| Feature | Release.ai | Baseten.co |

|---|---|---|

| Model Deployment Time | Under 5 minutes | 15-30 minutes |

| Infrastructure Management | Fully automated | Partially automated |

| Performance Optimization | Sub-100ms latency | Variable latency |

| Security Features | Enterprise-grade (SOC 2 Type II compliant) | Standard |

| Scaling Capabilities | Automatic (zero to thousands of concurrent requests) | Manual configuration required |

How to use Release.ai?

- Sign Up: Create a Release.ai account to access the platform.

- Deploy Model: Use the SDKs and APIs to deploy your AI model with just a few lines of code.

- Integrate: Integrate the deployed model into your existing applications.

- Monitor: Use real-time monitoring and analytics to track model performance.

Who is Release.ai for?

Release.ai is ideal for:

- Developers: Quickly deploy and integrate AI models into applications.

- AI Engineers: Optimize model performance and scalability.

- Businesses: Leverage AI for various use cases with enterprise-grade security.

Explore AI Models on Release.ai

Release.ai offers a variety of pre-trained AI models that you can deploy, including:

- deepseek-r1: Reasoning models with performance comparable to OpenAI-o1.

- olmo2: Models trained on up to 5T tokens, competitive with Llama 3.1.

- command-r7b: Efficient models for building AI applications on commodity GPUs.

- phi4: State-of-the-art open model from Microsoft.

- dolphin3: Instruct-tuned models for coding, math, and general use cases.

Best way to deploy AI models?

Release.ai offers a streamlined solution for deploying AI models with high performance, security, and scalability. Its optimized infrastructure and easy integration tools make it a top choice for developers and businesses looking to leverage AI.

Release.ai's platform is designed to offer high-performance, secure, and scalable AI inference through its optimized deployment platform. It stands out by providing sub-100ms latency, enterprise-grade security, and seamless scalability, ensuring rapid response times and consistent performance for AI applications.

Release.ai is optimized for various model types, including LLMs and computer vision, with comprehensive SDKs and APIs that allow for quick deployment using just a few lines of code. Its features include real-time monitoring and detailed analytics for tracking model performance, ensuring users can identify and resolve issues quickly.

With cost-effective pricing that scales with usage, Release.ai also offers expert support to assist users in optimizing their models and resolving any issues. The platform's commitment to enterprise-grade security, SOC 2 Type II compliance, private networking, and end-to-end encryption ensures that models and data remain secure and compliant.

Best Alternative Tools to "Release.ai"

Lightning-fast AI platform for developers. Deploy, fine-tune, and run 200+ optimized LLMs and multimodal models with simple APIs - SiliconFlow.

BrainHost VPS provides high-performance KVM virtual servers with NVMe storage, ideal for AI inference, websites, and e-commerce. Quick 30s provisioning in Hong Kong and US West ensures reliable global access.

Runpod is an AI cloud platform simplifying AI model building and deployment. Offering on-demand GPU resources, serverless scaling, and enterprise-grade uptime for AI developers.

Denvr Dataworks provides high-performance AI compute services, including on-demand GPU cloud, AI inference, and a private AI platform. Accelerate your AI development with NVIDIA H100, A100 & Intel Gaudi HPUs.

Nebius AI Studio Inference Service offers hosted open-source models for faster, cheaper, and more accurate results than proprietary APIs. Scale seamlessly with no MLOps needed, ideal for RAG and production workloads.

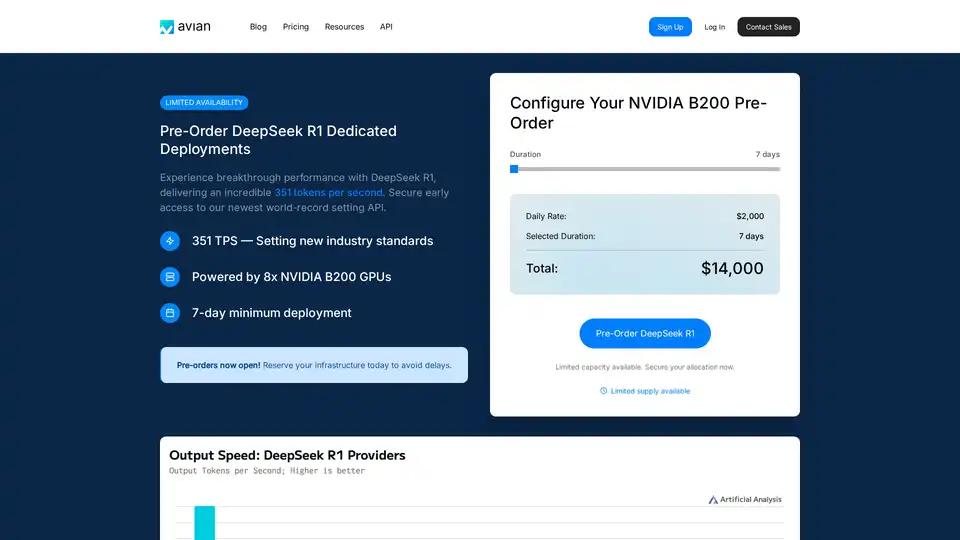

Avian API offers the fastest AI inference for open source LLMs, achieving 351 TPS on DeepSeek R1. Deploy any HuggingFace LLM at 3-10x speed with an OpenAI-compatible API. Enterprise-grade performance and privacy.

ThirdAI is a GenAI platform that runs on CPUs, offering enterprise-grade AI solutions with enhanced security, scalability, and performance. It simplifies AI application development, reducing the need for specialized hardware and skills.

Simplify AI deployment with Synexa. Run powerful AI models instantly with just one line of code. Fast, stable, and developer-friendly serverless AI API platform.

Datature is an end-to-end vision AI platform that accelerates data labeling, model training, and deployment for enterprises and developers. Build production-ready datasets 10x faster and seamlessly integrate vision intelligence.

UsageGuard provides a unified AI platform for secure access to LLMs from OpenAI, Anthropic, and more, featuring built-in safeguards, cost optimization, real-time monitoring, and enterprise-grade security to streamline AI development.

Rierino is a powerful low-code platform accelerating ecommerce and digital transformation with AI agents, composable commerce, and seamless integrations for scalable innovation.

Inferless offers blazing fast serverless GPU inference for deploying ML models. It provides scalable, effortless custom machine learning model deployment with features like automatic scaling, dynamic batching, and enterprise security.

Prodia turns complex AI infrastructure into production-ready workflows — fast, scalable, and developer-friendly.

Wavify is the ultimate platform for on-device speech AI, enabling seamless integration of speech recognition, wake word detection, and voice commands with top-tier performance and privacy.