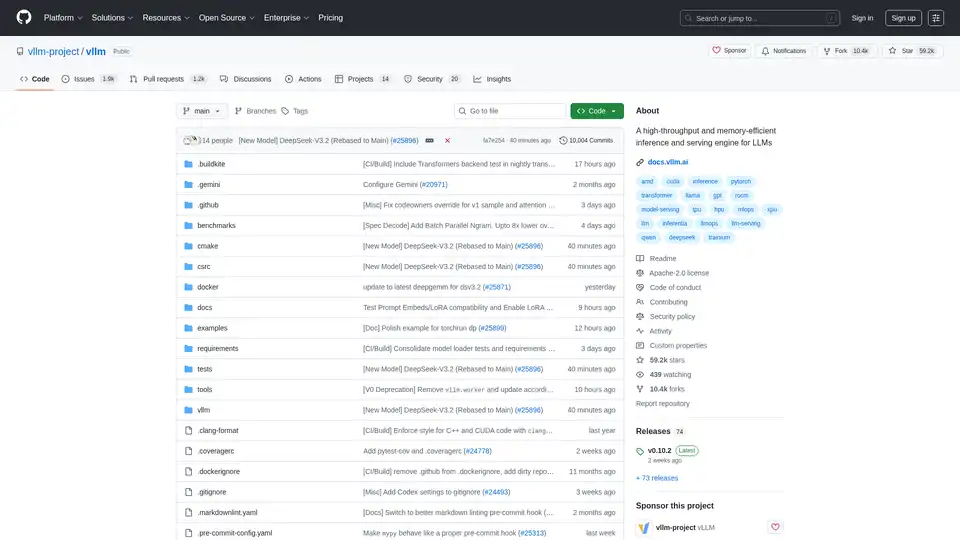

vLLM

Overview of vLLM

vLLM: Fast and Easy LLM Serving

vLLM is a high-throughput and memory-efficient inference and serving engine for large language models (LLMs). Originally developed in the Sky Computing Lab at UC Berkeley, it has grown into a community-driven project supported by both academia and industry.

What is vLLM?

vLLM stands for Versatile, Low-Latency, and Memory-Efficient Large Language Model serving. It's designed to make LLM inference and serving faster and more accessible.

Key Features of vLLM

vLLM is engineered for speed, flexibility, and ease of use. Here's a detailed look at its features:

- State-of-the-art Serving Throughput: vLLM is designed to maximize the throughput of your LLM serving, allowing you to handle more requests with less hardware.

- Efficient Memory Management with PagedAttention: This innovative technique efficiently manages attention key and value memory, a critical component for LLM performance.

- Continuous Batching of Incoming Requests: vLLM continuously batches incoming requests to optimize the utilization of computing resources.

- Fast Model Execution with CUDA/HIP Graph: By leveraging CUDA/HIP graphs, vLLM ensures rapid model execution.

- Quantization Support: vLLM supports various quantization techniques like GPTQ, AWQ, AutoRound, INT4, INT8, and FP8 to reduce memory footprint and accelerate inference.

- Optimized CUDA Kernels: Includes integration with FlashAttention and FlashInfer for enhanced performance.

- Speculative Decoding: Enhances the speed of LLM serving by predicting and pre-computing future tokens.

- Seamless Integration with Hugging Face Models: vLLM works effortlessly with popular models from Hugging Face.

- High-Throughput Serving with Various Decoding Algorithms: Supports parallel sampling, beam search, and more.

- Tensor, Pipeline, Data, and Expert Parallelism: Offers various parallelism strategies for distributed inference.

- Streaming Outputs: Provides streaming outputs for a more interactive user experience.

- OpenAI-Compatible API Server: Simplifies integration with existing systems.

- Broad Hardware Support: Compatible with NVIDIA GPUs, AMD CPUs and GPUs, Intel CPUs and GPUs, PowerPC CPUs, and TPUs. Also supports hardware plugins like Intel Gaudi, IBM Spyre, and Huawei Ascend.

- Prefix Caching Support: Improves performance by caching prefixes of input sequences.

- Multi-LoRA Support: Enables the use of multiple LoRA (Low-Rank Adaptation) modules.

How does vLLM work?

vLLM utilizes several key techniques to achieve high performance:

- PagedAttention: Manages attention key and value memory efficiently by dividing it into pages, similar to virtual memory management in operating systems.

- Continuous Batching: Groups incoming requests into batches to maximize GPU utilization.

- CUDA/HIP Graphs: Compiles the model execution graph to reduce overhead and improve performance.

- Quantization: Reduces the memory footprint of the model by using lower-precision data types.

- Optimized CUDA Kernels: Leverages highly optimized CUDA kernels for critical operations like attention and matrix multiplication.

- Speculative Decoding: Predicts and pre-computes future tokens to accelerate decoding.

How to Use vLLM?

Installation:

pip install vllmQuickstart:

Refer to the official documentation for a quickstart guide.

Why Choose vLLM?

vLLM offers several compelling advantages:

- Speed: Achieve state-of-the-art serving throughput.

- Efficiency: Optimize memory usage with PagedAttention.

- Flexibility: Seamlessly integrate with Hugging Face models and various hardware platforms.

- Ease of Use: Simple installation and setup.

Who is vLLM for?

vLLM is ideal for:

- Researchers and developers working with large language models.

- Organizations deploying LLMs in production environments.

- Anyone seeking to optimize the performance and efficiency of LLM inference.

Supported Models

vLLM supports most popular open-source models on Hugging Face, including:

- Transformer-like LLMs (e.g., Llama)

- Mixture-of-Expert LLMs (e.g., Mixtral, Deepseek-V2 and V3)

- Embedding Models (e.g., E5-Mistral)

- Multi-modal LLMs (e.g., LLaVA)

Find the full list of supported models here.

Practical Value

vLLM provides significant practical value by:

- Reducing the cost of LLM inference.

- Enabling real-time applications powered by LLMs.

- Democratizing access to LLM technology.

Conclusion

vLLM is a powerful tool for anyone working with large language models. Its speed, efficiency, and flexibility make it an excellent choice for both research and production deployments. Whether you're a researcher experimenting with new models or an organization deploying LLMs at scale, vLLM can help you achieve your goals.

By using vLLM, you can achieve:

- Faster Inference: Serve more requests with less latency.

- Lower Costs: Reduce hardware requirements and energy consumption.

- Greater Scalability: Easily scale your LLM deployments to meet growing demand.

With its innovative features and broad compatibility, vLLM is poised to become a leading platform for LLM inference and serving. Consider vLLM if you are looking for high-throughput LLM serving or memory-efficient LLM inference.

Tags Related to vLLM