MCP Server Application Scenarios Evaluation and Technical Selection Guide

In building agents driven by Large Language Models (LLM), a core challenge lies in granting them access to external tools and data in a secure and controllable manner. The Model Context Protocol (MCP), proposed by Anthropic, acts as an open protocol that standardizes interactions between AI systems and external tools, services, and data sources through a defined client-server architecture. This systematic abstraction mitigates the complexity and security boundary issues inherent in traditional API integrations. MCP is not a simple replacement for Function Calling; rather, it provides a structured protocol layer for multi-tool, multi-source, and cross-system environments.

During the 2025–2026 phase, as AI Agents evolve from experimental exploration to enterprise-level deployment, the MCP Server is becoming a critical infrastructure for tool and data governance.

This article summarizes abstract insights based on real-world project experience in deploying enterprise AI Agents and MCP Servers. it is designed to serve as a reference for companies or technical teams planning to implement MCP Servers in 2026 and beyond.

Target Audience:

- Technology enthusiasts and entry-level learners

- Enterprise decision-makers and business department heads

- General users interested in the future trends of AI

Table of Contents:

- Core Application Scenarios for MCP Server

- Recommended Industry Scenarios

- Unsuitable Scenarios and Limitations

- Technical Selection and Evaluation Framework

- Selection Strategy

- Risk Management and Mitigation Strategies

- Case Studies

- Challenges and Solutions during Implementation

- Investment Return Analysis Framework

- Implementation Roadmap Suggestions

- Future Trends in MCP

- Final Recommendation for MCP Selection

- Frequently Asked Questions (FAQ)

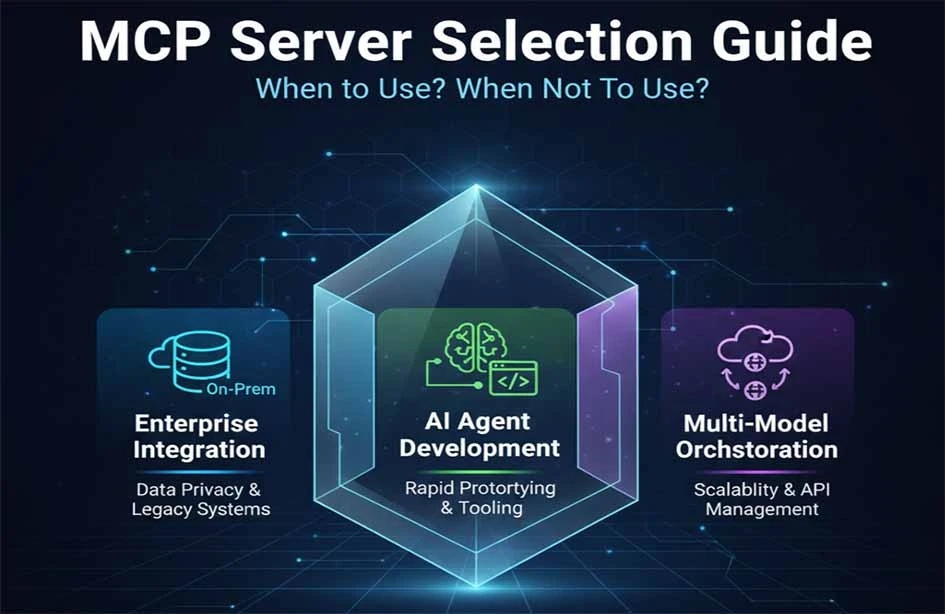

Core Application Scenarios for MCP Server

An MCP Server is not a typical application server. In practice, it usually assumes the role of a control plane. Its typical application scenarios include the following.

Multi-Source Data Integration

When your AI application needs to retrieve information from multiple heterogeneous systems (such as internal databases, CRM, ERP, and third-party APIs), writing individual integration code for each source is tedious and fragile. An MCP Server can act as a unified access layer, encapsulating data and operations from different sources into standardized Tools and Resources. AI agents only need to interact with the MCP protocol without worrying about whether the underlying source is a SQL database or a REST API, thereby simplifying integration in complex data environments.

Smart Assistants and AI Agent Platforms

In building enterprise AI assistant platforms or complex Multi-Agent systems, the core requirements are dynamic discovery and secure invocation of tools. The MCP Server fits this role perfectly:

- Tool Discovery: Upon startup, an AI Agent can query the connected MCP Server to obtain a list of available tools and their usage instructions, enabling plug-and-play functionality.

- Context Injection: Beyond tool calls, MCP supports "Resources" to inject structured data (such as user profiles or project documents) as context into the model, either at the start of a session or on-demand, enhancing personalization.

- Permission Boundaries: Each MCP Server can independently configure authentication and authorization logic, ensuring that agents only access permitted data and operations. This is cleaner and more secure than hardcoding permission checks within the agent's logic.

Automated Operations and Real-Time Analysis

For automated operation scenarios requiring decision analysis based on real-time data (such as monitoring metrics, logs, and business dashboards), an MCP Server can encapsulate analytical queries, report generation, or alert triggers as tools. Operations staff or automated agents can trigger these analytical workflows via natural language commands, significantly boosting efficiency.

Why MCP is Suited for the "Tool / Data Governance Layer"

Defining the position of the MCP Server is crucial: it is part of the Agent architecture, not the Agent itself.

- AI Agent: Acts as the "brain," responsible for understanding intent, planning, deciding when and how to call tools, and processing results.

- MCP Server: Acts as the provider and manager of "hands" and "senses," responsible for securely exposing a well-defined set of tools and data sources.

This separation provides architectural clarity: changes in business systems only require updates to the corresponding MCP Server implementation, rather than modifying code across multiple agents. Furthermore, security policies, audit logs, and access controls can be centralized at this layer. Therefore, MCP is better suited as the decision and orchestration interface for Agents rather than a simple function call or real-time stream processing engine.

Recommended Industry Scenarios

Enterprise Internal AI Assistant Platforms

Many enterprises aim to build a unified internal assistant capable of handling diverse tasks. The MCP Server serves as the access and permission isolation layer, safely exposing departmental systems to the AI.

- HR Scenarios: Encapsulate HR systems to allow employees to check leave balances, submit expense reports, or query company policies through chat without the AI having direct access to the HR database.

- Customer Service Scenarios: Integrate CRM, order systems, and knowledge bases. Customer service AI can securely access these via the MCP Server to query order history, process returns, or find solutions in real-time, improving efficiency and consistency.

- Data Analysis: Encapsulate the query capabilities of data warehouses or BI tools (like Tableau or Looker) into tools, allowing business users to generate reports or perform ad-hoc queries using natural language.

AI Integration for SaaS Products

SaaS providers can offer secure AI extension capabilities to their customers via MCP Servers. For instance, a project management software could release an official MCP Server, allowing a customer's AI assistant to safely read project statuses or create tasks without granting the AI a full API key. This functions as a protocol adaptation layer, adapting private SaaS APIs to the standard MCP protocol.

Digital Transformation for Traditional Enterprises

Traditional enterprises often possess numerous Legacy Systems. Directly modernizing these systems is costly. Dedicated MCP Servers can be developed for key legacy systems to act as a protocol adaptation layer, making their capabilities accessible to modern AI applications and enabling incremental intelligent upgrades.

Intelligent Upgrades for Development Toolchains

DevOps teams can build MCP Servers to integrate Git, CI/CD pipelines, error monitoring systems (like Sentry), and infrastructure management tools. This allows developers to perform code reviews, deploy builds, or query logs through an AI assistant, which interacts with complex toolchains via a secure, standardized MCP interface.

Unsuitable Scenarios and Limitations

Simple Static Content Access

If your requirement is merely to let an AI read unchanging, public documents or webpages, using Retrieval-Augmented Generation (RAG) to embed them into a knowledge base is a simpler and more direct solution that avoids the complexity of the MCP framework.

Single API Integration

If an agent only needs to interact with one external API and lacks requirements for dynamic discovery, multi-source integration, or complex permission management, calling the API directly within the agent code is more lightweight than maintaining an MCP Server.

Scenarios with Extreme Real-Time Requirements

Current implementations of the MCP protocol are primarily based on a request-response pattern, which may involve multiple network round-trips (Agent → MCP Client → MCP Server → Target System). For trading systems or hardware control loops requiring sub-second or millisecond latency, MCP may introduce unacceptable delays.

Hardware-Dependent Applications

Controlling lab equipment, industrial robots, or IoT edge devices typically requires specialized drivers and ultra-low latency protocols. While an MCP Server can act as a high-level command orchestration layer, underlying control should rely on dedicated real-time systems. Therefore, IoT real-time control systems should prioritize specialized protocols over MCP.

Systems with Mature Existing Integration Solutions

If an enterprise already has a stable, efficient SOA (Service-Oriented Architecture) or internal API gateway, and AI agents can work securely through these existing interfaces, introducing MCP may not add extra value unless the goal is to unify under a standardized AI tool protocol.

Lessons from Failed Cases

Attempting to use an MCP Server as a replacement for a core business message bus or stream processing pipeline (like Kafka or Flink) usually leads to failure. MCP is essentially a Control Plane + Protocol Layer used for instruction delivery and result retrieval, and it should not be treated as:

- A Real-Time Stream Processing Engine

- An Ultra-Low Latency RPC Framework

- A Direct Hardware Control System

Confusing its role leads to improper system design.

Technical Selection and Evaluation Framework

Evaluation Dimension Matrix

Self-Built vs. Managed Cloud Service Decision Matrix

| Consideration | Self-Built MCP Server | Managed Cloud Service |

|---|---|---|

| Control | High. Full control over code, deployment, network, and security. | Medium-Low. Dependent on provider features, SLA, and roadmap. |

| Speed to Market | Slow. Requires development, testing, deployment, and O&M. | Fast. Register and use; focus on business logic encapsulation. |

| O&M Burden | High. Responsible for servers, monitoring, scaling, and security patches. | Low. Infrastructure maintenance handled by the provider. |

| Cost | Variable. High upfront dev costs; ongoing costs are mainly human resources. | Clear. Pay-as-you-go based on usage (e.g., API calls). |

| Customization | High. Deeply customize protocol extensions, integration, and governance. | Low. Usually limited to provider-defined configuration options. |

| Compliance | High. Data stays in-house, meeting strict residency requirements. | Needs Assessment. Must verify provider certifications (e.g., HIPAA, SOC2). |

Open Source vs. Commercial Solutions

Open Source (e.g., official reference implementations):

Pros: Transparent, auditable, free, and customizable. Driven by community innovation.

Cons: Requires self-integration, maintenance, and security management. Enterprise features like advanced monitoring or GUI management may be missing.

Commercial/Cloud Solutions:

- Pros: Out-of-the-box with SLA guarantees, professional support, GUI management, and security hardening. Often integrates better with the provider's other AI services.

Cons: Ongoing costs, risk of Vendor Lock-in, and limited customization flexibility.

Technical Stack Compatibility

Evaluate how well a candidate solution fits your existing stack:

- Programming Languages: Official MCP Server SDKs are available for TypeScript and Python, with community extensions for Java, Go, and others. Choose the language your team knows best to reduce maintenance costs.

- Deployment Environment: Does it support containerization (Docker/K8s)? Can it run on existing cloud platforms or on-premises servers?

- Dependency Management: Do the introduced libraries conflict with your current system versions?

Ecosystem Support

- Community Solutions: Active developer communities (e.g., GitHub) contribute various connectors and libraries, accelerating integration with common systems like Slack, PostgreSQL, and Salesforce. However, quality and maintenance status vary.

- Official Toolchains: Entities like OpenAI provide official MCP tools and client libraries. These offer guaranteed compatibility and stability, making them a safe starting point for production apps.

Trade-offs in the Decision Process

Decision factors should be based on:

- Team Capability: Do you have the dev and O&M resources to build and maintain a custom solution?

- Security Requirements: Does data sensitivity mandate on-premises deployment and total control?

- Lifecycle: Is this a short-term experimental project or long-term core infrastructure? Long-term projects justify the investment in controllable, scalable self-built solutions.

Note: The core of technical selection is matching business needs with team reality, rather than chasing technical "perfection" or "novelty."

Selection Strategy

Mature Implementations vs. Custom Builds

- Choose Mature Implementations: When your core goal is rapid validation of business scenarios, you lack dedicated dev resources, or high-quality open-source connectors already exist for your needs. Prioritize official SDKs and community-verified solutions.

- Build Custom: When you have unique business logic, strict performance or security requirements, need deep customization of protocol behavior, or when existing solutions cannot meet integration needs.

Local Execution vs. Cloud-Hosted MCP Server

- Local/Private Execution: Best for highly regulated industries (finance, healthcare, government) or enterprises handling sensitive intellectual property. Ensures data never leaves the domain.

- Cloud-Hosted: Suitable for most SaaS applications, internet companies, and teams looking to minimize the O&M burden. Leverages cloud elasticity and global networking.

Security and Compliance Framework

When evaluating options, you must assess:

- Authentication & Authorization: Does the solution support standards like OAuth, API keys, and Role-Based Access Control (RBAC)?

- Audit & Logging: Are all tool calls and resource accesses recorded for compliance audits?

- Data Encryption: Is data encrypted both in transit and at rest?

- Compliance Certification: Does the provider or software hold relevant industry certifications (e.g., ISO 27001, GDPR readiness)?

Risk Management and Mitigation Strategies

Technical Risk Identification

- Protocol Immaturity: MCP is still evolving, and future versions may introduce breaking changes. Strategy: Follow official announcements and design isolation layers to avoid tight coupling with specific protocol implementations.

- Performance Bottlenecks: Poorly implemented MCP Servers can become latency bottlenecks. Strategy: Conduct load testing, implement caching, and optimize response speeds for high-frequency tools.

- Single Point of Failure: A crash in a core MCP Server could disable all dependent AI functions. Strategy: Design high-availability architectures with multi-instance deployment, load balancing, and failover mechanisms.

Business Continuity Measures

- Phased Deployment: Enable MCP integration for non-critical business functions first, then expand as stability is proven.

- Circuit Breakers & Degradation: Implement circuit breaker patterns in the MCP client. If the Server is unavailable, the AI agent should degrade gracefully, providing basic service or clear user notifications.

- Version Management & Rollback: Use strict version control for MCP Server updates and maintain rapid rollback procedures.

Vendor Risk Management

If choosing a commercial cloud service, develop strategies for service outages, significant price hikes, or service termination. This includes evaluating alternatives regularly, avoiding vendor-specific features, and planning for data migration or architectural switches.

Case Studies

Case 1: Risk Management System Integration for FinTech

Pain Point: A risk control AI needed to query user credit scores (external API), transaction history (internal DB), and blacklists (another internal system) in real-time. Integration was complex and latency-sensitive.

Solution: Developed a unified MCP Server as a unified access layer to aggregate all sources and implemented a caching strategy for high-frequency, slow-changing data like credit scores.

Results: Integration time for the AI risk model was reduced by 60%. System stability and response speeds improved due to standardized interfaces and caching.

Case 2: Smart Customer Service Upgrade for E-commerce

Pain Point: Customer service AI could not securely access order systems or logistics data, leading to inaccurate answers.

Solution: Built MCP Servers for core systems (orders, logistics) to wrap internal APIs with strict permissions (AI can only query orders for the current user).

Results: The AI successfully resolved most order status inquiries, reducing human intervention and increasing customer service efficiency by 40%.

Case 3: Data Analysis Platform for Medical Research

Pain Point: Researchers needed to analyze sensitive de-identified medical data, but direct DB access posed massive privacy risks.

Solution: Built an MCP Server providing standardized data query and statistical tools connected to a secure data sandbox, integrated with fine-grained access control based on user roles and projects.

Results: Researchers used the AI assistant to safely explore data via natural language. The system passed audits, achieved HIPAA compliance, and significantly boosted research productivity.

Case 4: Manufacturing Equipment Maintenance and Knowledge Integration

Pain Point: Field engineers had to consult fragmented PDF manuals, historical work orders, and real-time sensor data, making troubleshooting inefficient.

Solution: Built a Manufacturing Execution System (MES) MCP Server integrating document libraries (via RAG), real-time monitoring APIs, work order systems, and ERP inventory modules.

Results: Engineers used natural language to get manuals, real-time parameters, and spare part availability simultaneously. Mean Time to Repair (MTTR) dropped by 35%.

Case 5: Compliance and Market Analysis for Cross-Border E-commerce

Pain Point: Business teams struggled to track sales, regulations, and logistics costs across multiple markets (EU, North America, SE Asia) due to diverse data sources.

Solution: Created multiple dedicated MCP Servers (e.g., "Sales Data Server," "Regulatory Monitor Server") to aggregate and standardize data from authoritative sources.

Results: Staff could generate multi-dimensional market reports rapidly. Market decision cycles were shortened by 50%, and regulatory compliance risks were mitigated.

Challenges and Solutions during Implementation

- Inaccurate Tool Definitions

- Challenge: The

descriptionof an MCP Tool directly affects how and if the AI calls it. Vague descriptions lead to errors. - Solution: Follow best practices to write clear, specific descriptions with examples. Iterate based on AI invocation logs.

- Challenge: The

- Encapsulating Complex Operations

- Challenge: Wrapping multi-step business processes (e.g., placing an order) into an atomic Tool is difficult regarding state management and rollbacks.

- Solution: Handle complex orchestration inside the MCP Server. The Tool interface should trigger a clear intent (e.g., "Generate Quarterly Report"), while the backend handles sub-steps (query, format, synthesize) and returns the final result or a task handle. This keeps Agent logic simple.

- Error Handling and UX

- Challenge: When a Tool call fails (timeout, permission, etc.), how do you translate technical errors into user-friendly responses while providing diagnostic info for devs?

- Solution: The MCP Server should return structured errors (e.g.,

user_input_error,system_error). The AI client captures these to either prompt the user for correction or log an alert.

- Version Management and Compatibility

- Challenge: Upgrading tools without breaking existing AI clients developed by different teams.

- Solution: Semantic Versioning: Use semver for interface changes. Multi-Version Support: Support old and new API versions simultaneously for a transition period.

- Client Adaptation Layer: Use an adaptation layer between the AI and MCP client to hide call details.

- Performance Monitoring and Cost Control

- Challenge: MCP Server bottlenecks are hard to detect, and tool calls can consume expensive API credits or compute resources.

- Solution: Full Monitoring: Integrate APM tools to track latency, success rates, and resource consumption. Quotas and Rate Limiting: Set frequency and resource quotas for different users or agents. Cost Attribution: Log cost factors (external API hits, tokens) to provide transparency and drive optimization.

Investment Return Analysis Framework

Direct Cost Savings Calculation

- Development Efficiency: Estimate the man-days required to integrate N data sources for each AI app without MCP. With MCP, you build N servers once for all apps to reuse. The saved man-hours represent direct savings.

- O&M Simplification: Centralized permissions, auditing, and monitoring reduce long-term maintenance complexity compared to scattered implementations.

Indirect Benefit Assessment

- Business Agility: Measure the reduction in time-to-market for new AI features.

- Employee Productivity: Evaluate the reduction in task processing time for roles like customer service or data analysis.

- Error Reduction: Compare error rates of AI performing operations via standardized interfaces versus manual operations.

Long-term Strategic Value

- Platform Capability: MCP investment builds a unified "AI Capability Access Layer," preventing repetitive construction and increasing in value as the number of AI projects grows.

- Data Asset Activation: Securely enables more data to be utilized by AI, unlocking the potential value of legacy systems and data warehouses.

Implementation Roadmap Suggestions

Pilot Project Selection Criteria

A pilot should have: 1) Clear business value; 2) 2-3 integrated systems/data sources; 3) Some tolerance for failure; 4) Support from active business stakeholders.

Phased Implementation Plan

- Phase 1: Exploration and Validation (1-2 months): Select a pilot, set up the basic MCP framework, integrate 1-2 core tools, and verify technical feasibility.

- Phase 2: Expansion and Standardization (3-6 months): Develop internal MCP coding standards. Replicate the model to 2-3 other scenarios and build a core tool library.

- Phase 3: Platforming and Promotion (6-12 months): Establish an internal MCP Server registry/discovery center. Promote MCP as the standard for AI integration company-wide.

Key Milestones

- Milestone 1: First MCP Server running stably in production, handling real user requests.

- Milestone 2: Complete documentation of the MCP project lifecycle (design, dev, deploy, monitor).

- Milestone 3: Over 5 different AI applications connected to the same MCP infrastructure.

- Milestone 4: Automated deployment, elastic scaling, and advanced monitoring/alerting for MCP services.

Future Trends in MCP

For teams planning to introduce MCP Servers in 2026, understanding the evolution over the next 12–24 months will help avoid early lock-in to immature solutions.

Protocol Evolution

MCP 2.0 or later may introduce features like bi-directional streaming (for server-push updates) and stronger type systems (like gRPC/protobuf integration) to enhance reliability (based on current engineering trends).

Development of interoperability between MCP and other AI ecosystem standards (like OpenAI Assistants tool calls or LangChain Tools).

Tools and Platforms Prediction

Low-code/No-code MCP Server platforms may emerge, allowing business users to expose APIs via configuration.

Serverless MCP deployment will become more common, further reducing O&M efforts.

Expect Enterprise-grade MCP Management Consoles providing centralized permissions, usage analytics, cost accounting, and compliance reporting.

Growth in Governance Capabilities As MCP is widely adopted in enterprises, demand for fine-grained access control, tool auditing, and policy engine integration will increase.

Final Recommendation for MCP Selection

For most enterprises, the rational strategy is to start pilot projects using official SDKs or mature open-source frameworks. This ensures compliance with protocol standards and leverages community support. Once business value is proven, decide whether to continue building a custom ecosystem or purchase commercial solutions based on scale, compliance, and customization needs. Always view MCP as a strategic AI infrastructure component rather than just a temporary integration tool.

Frequently Asked Questions (FAQ)

Q1. What is MCP Server and why is it important for AI Agents? MCP Server, or Model Context Protocol Server, acts as a control and integration layer for AI Agents. It standardizes access to multiple data sources, tools, and APIs, enabling secure, context-aware interactions. It is essential because it allows AI Agents to operate safely and efficiently across complex enterprise systems.

Q2. Can MCP Server replace existing APIs or real-time data pipelines? No. MCP Server is designed as a control plane and protocol layer for AI Agents, not as a high-frequency real-time data pipeline or a replacement for existing APIs. It is suitable for orchestrating tool calls and injecting context, but not for ultra-low latency or hardware-dependent tasks. (learn.microsoft.com)

Q3. Should I choose a cloud-hosted MCP Server or build it locally? The choice depends on your organization's requirements:

- Local deployment: Preferred for sensitive data, strict compliance, and full control.

- Cloud-hosted: Suitable for rapid scaling, ease of maintenance, and integrating multiple external services. Evaluate based on team capability, security, and lifecycle management.

Q4. Which industries benefit most from MCP Server integration? MCP Server is particularly effective in:

- Enterprise AI assistants (HR, customer service, data analytics)

- SaaS product AI integration

- Traditional enterprise digital transformation

- Intelligent developer toolchains

It provides secure API access, context management, and tool orchestration across systems. (airbyte.com)

Q5. What are the main risks of implementing MCP Server and how to mitigate them?

Key risks include:

- Misconfigured permissions or unauthorized tool access

- Integration errors with heterogeneous systems

- Vendor or supply chain dependency

Mitigation strategies involve: - Conducting thorough security assessments

- Defining clear access and governance policies

- Establishing redundancy and monitoring for business continuity

- Choosing reputable open-source or commercial MCP implementations

MCP Article Series:

- Comprehensive Analysis of MCP Server: The Context and Tool Communication Hub in the AI Agent Era

- What Key Problems Does MCP Server Solve? Why AI Agents Need It

- MCP Server Architecture and Working Principles: From Protocol to Execution Flow

- MCP Server Practical Guide: Building, Testing, and Deploying from 0 to 1

- MCP Server Application Scenarios Evaluation and Technical Selection Guide

About the Author

This content is compiled and published by the NavGood Content Editorial Team. NavGood is a navigation and content platform focused on the AI tool and application ecosystem, tracking the development and implementation of AI Agents, automated workflows, and generative AI.

Disclaimer: This article represents the author's personal understanding and practical experience. It does not represent the official position of any framework, organization, or company, nor does it constitute business, financial, or investment advice. All information is based on public sources and independent research.

References:

[1]: https://modelcontextprotocol.io/docs/getting-started/intro "What is the Model Context Protocol (MCP)?"

[2]: https://platform.openai.com/docs/assistants/migration "Assistants migration guide"

[3]: https://learn.microsoft.com/en-us/fabric/real-time-intelligence/mcp-overview "What is the Fabric RTI MCP Server (preview)?"

[4]: https://arxiv.org/abs/2511.20920 "Securing the Model Context Protocol (MCP)"

[5]: https://github.com/modelcontextprotocol/python-sdk "Managed Control Plane (MCP) for AI Agents"

[6]: https://atlan.com/know/what-is-atlan-mcp/ "Use Atlan MCP Server for Context-Aware AI Agents & Metadata-Driven Decisions"

[7]: https://docs.oracle.com/en/learn/oci-aiagent-mcp-server/index.html "Build an AI Agent with Multi-Agent Communication Protocol Server for Invoice Resolution"

[8]: https://www.vectara.com/blog/mcp-the-control-plane-of-agentic-ai "MCP: The control plane of Agentic AI"

[9]: https://www.fingent.com/blog/creating-mcp-servers-for-building-ai-agents/ "Creating MCP Servers for Building AI Agents"