What Key Problems Does MCP Server Solve? Why AI Agents Need It

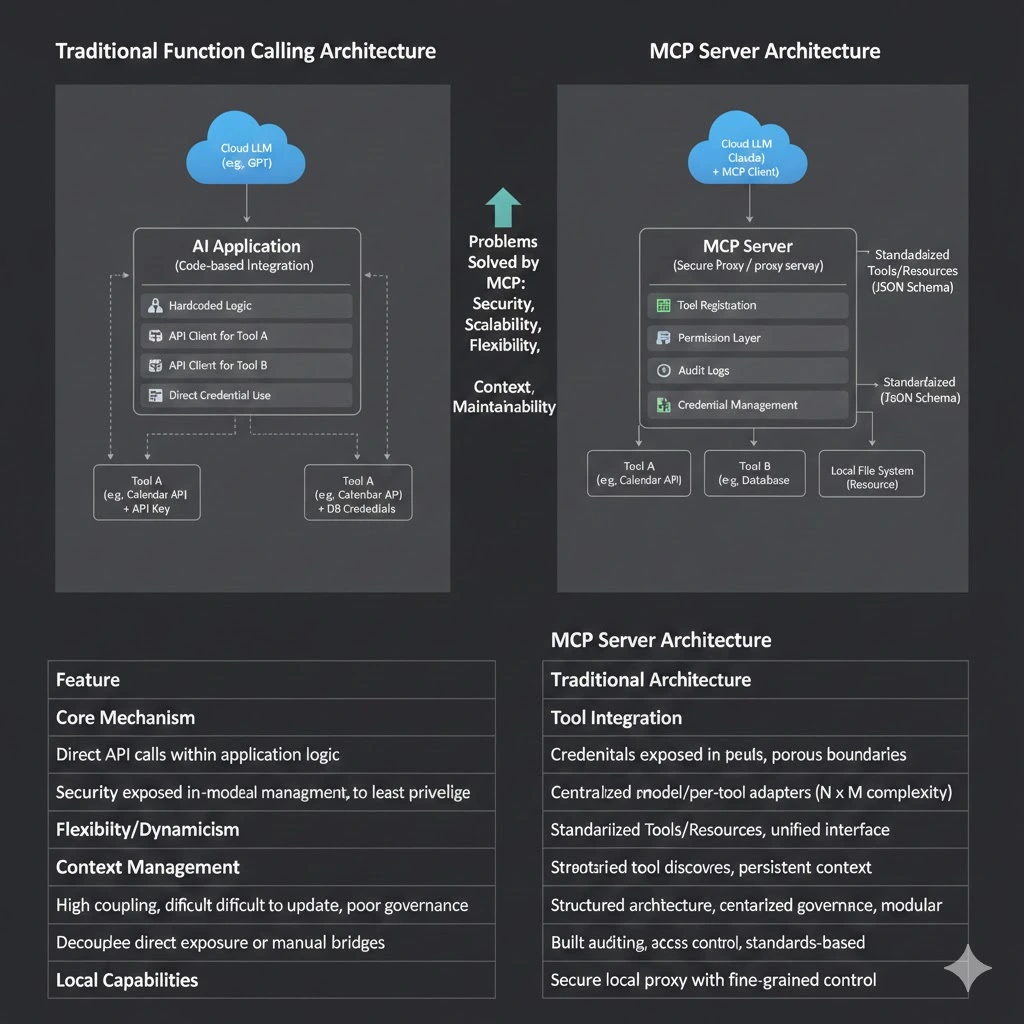

In the early days of AI applications, calling APIs via "Function Calling" was sufficient for simple automation needs. However, as AI evolves from a "conversation tool" into an Agent with autonomous planning and execution capabilities, we have begun to encounter a series of systemic engineering challenges: difficulty in unifying and securely connecting tools, and architectures that struggle to evolve flexibly alongside model iterations.

The root of these problems lies in the lack of a standardized capability layer designed specifically for AI interaction patterns. The Model Context Protocol (MCP) and its core implementation, the MCP Server, emerged in this context. It defines a standardized way to connect models with external data sources, tools, and services, thereby supporting scalable AI Agent systems.

This article interprets the core value brought by the emergence of MCP Servers and the key problems they solve.

Target Audience:

- Tech enthusiasts and entry-level learners

- Professionals and managers seeking efficiency improvements

- Enterprise decision-makers and business department heads

- General users interested in the future trends of AI

Table of Contents:

- 1. When LLMs Need "Action Capabilities": How to Securely Access the Real World?

- 2. When Tasks Become Open-Ended: Why Hardcoded Workflows Fail?

- 3. When Models Keep Changing: How to Avoid Tool-Model Coupling?

- 4. When Tasks Get Long: Why Does Context Become Fragmented?

- 5. When Capabilities are Local: How to Securely Connect to Cloud Models?

- 6. When Agent Scale Increases: How to Maintain the System?

- 7. When AI Enters the Enterprise: How to Securely Reuse Internal Systems?

- MCP Server is Not Just a Protocol, But a Key Infrastructure for the AI Agent Era

1. When LLMs Need "Action Capabilities": How to Securely Access the Real World?

Background

Large Language Models (LLMs) are essentially text generators, but they do not inherently possess the ability to directly access external systems or execute actions. To connect an LLM to the external world, one must provide access to APIs, databases, or file systems. This immediately introduces security and adaptation hurdles; without a unified secure execution layer, adapting each tool individually is both tedious and insecure.

Without MCP Server

Developers need to write specific integration code for every tool and expose sensitive credentials (such as API keys) or internal systems directly to the AI application. This results in blurred security boundaries and high risks. Summary:

- LLMs can only "generate text" and cannot trigger physical actions.

- Every external system must have unique adaptation code written for every type of model.

- API keys or internal credentials must be exposed to the caller, creating security risks.

The MCP Server Solution

One of the core roles of an MCP Server is to act as a security proxy. It runs in a trusted environment and is responsible for holding and managing all sensitive credentials. According to official MCP definitions, the Server exposes standardized Tools, which describe their functions and required parameters via a clear JSON Schema. The AI application (MCP Client) retrieves tool descriptions through tools/list, and the model decides when to call a tool based on these descriptions. Specific execution—including permission validation and audit logging—is entirely controlled by the MCP Server. This design ensures the implementation of the Principle of Least Privilege.

An MCP Server is a server-side program following the MCP protocol. It can standardly encapsulate external capabilities into tool interfaces (Tools) or resource interfaces (Resources) and control access through security mechanisms.

- Encapsulates various real-world capabilities (databases, APIs, file systems) into standardized tools.

- Models only need to understand the tool's capability description and parameter structure.

- Actual permission verification, execution, and security boundaries are handled by the MCP Server.

LLMs are thus able to interact securely with the real world, evolving from "just chatting" to task-executing AI Agents without the risk of directly exposing core systems. It is important to note that while the MCP protocol defines the standard for exposing and calling capabilities, security control and permission models are handled by specific MCP Server implementations.

2. When Tasks Become Open-Ended: Why Hardcoded Workflows Fail?

Background

The value of an AI Agent lies in handling open-domain, multi-step complex tasks where the execution path is often dynamic and non-deterministic. Traditional API integration models rely on developers pre-writing fixed calling logic, which cannot adapt to this flexibility.

Limitations of Traditional API / Function Calling

Every time a task workflow changes or a tool is added, the application code must be modified and redeployed. This creates a rigid system that struggles to adapt to rapidly changing needs. Key issues include:

- Calling logic is hardcoded into the application.

- Adding tools or changing processes requires code modification and redeployment.

- Unsuitable for open-ended, dynamic decision-making tasks.

The MCP Server Solution

The MCP protocol supports dynamic tool discovery. An MCP Client (integrated into the AI application) can automatically discover which tools a connected Server provides upon startup. This means the toolset can be updated and expanded independently of the AI application. Agents can autonomously reason and plan which tools to call and in what order based on real-time discovered capabilities. This marks a fundamental shift from "human-defined workflows" to "model-driven dynamic planning."

The MCP protocol defines standard Schemas for tools and resources, allowing them to be "dynamically discovered and called."

- Tools expose their functionality through standardized Schemas.

- Calling decisions and execution sequences are determined by model reasoning rather than hardcoding.

- MCP provides the necessary capability exposure and context foundation for autonomous Agent planning.

The Essential Change

The intelligence core of the system shifts from fixed code logic to real-time reasoning performed by the model based on its understanding of tool capabilities. It moves from "human-defined processes" to "model-planned processes based on semantics."

3. When Models Keep Changing: How to Avoid Tool-Model Coupling?

Background

Models in the AI field iterate rapidly. An organization might simultaneously use models from OpenAI, Anthropic, Google, or other open-source providers. If tool integration code is deeply bound to a specific model's SDK or calling method, switching or adding models will incur massive costs in redundant development and maintenance.

Without MCP Server

Each model requires an independent tool adaptation layer, creating an integration complexity of N models x M tools (N x M). Maintenance costs rise exponentially.

- Each model has its own set of tool definitions.

- Developers must write compatibility logic for different models separately.

- System maintenance costs increase significantly.

The MCP Server Solution

As an open protocol, MCP acts as a universal intermediate language. Any AI application that implements an MCP Client (such as Claude Desktop, Cursor IDE, etc.) can communicate with any Server following the MCP protocol. Tool developers only need to implement an MCP Server once according to the standard to make its capabilities available to multiple AI applications with different backends. This completely decouples tools from specific AI models; a single MCP Server can be used by multiple different LLMs simultaneously.

The emergence of the MCP Server frees developers from the repetitive labor of "writing adapters" for specific models, allowing them to build a sustainable, reusable, model-agnostic AI capability infrastructure.

4. When Tasks Get Long: Why Does Context Become Fragmented?

Background

In complex, multi-turn Agent tasks, information is scattered across different tool return results, read file contents, and conversation history. In traditional methods, organizing this unstructured information effectively and providing it continuously to the model is a huge challenge, often leading to the model "forgetting" key information or making contradictory decisions.

Common Problems

- Tool call results are difficult to reuse.

- State is inconsistent across multi-stage tasks.

- Models lack holistic contextual awareness.

The MCP Server Solution

The MCP protocol defines Resources as a core primitive. Resources are read-only, structured data sources (such as files, API documentation, or database schemas). An MCP Server can expose Resources, and an MCP Client can read them as needed (resources/read) to provide their content to the model as context. This provides the Agent with continuous, structured external knowledge, effectively alleviating the problem of context fragmentation.

MCP Servers allow Agents to maintain continuous, structured context throughout multi-step tasks rather than "temporarily remembering information."

5. When Capabilities are Local: How to Securely Connect to Cloud Models?

Many core enterprise capabilities (such as accessing local file systems, Git repositories, or internal dev toolchains) reside on a user's local machine or within an enterprise intranet. Users may want to use powerful cloud-based LLMs to drive these capabilities, but granting local permissions directly to cloud models is highly insecure.

Without MCP Server

- File systems and version control systems cannot be accessed safely.

- Excessively high permissions must be granted to enable calls.

- High risk of credential leakage or abuse.

The MCP Server Solution

An MCP Server can run as a lightweight process in a local environment and expose only authorized capabilities; thus, the model can safely access required resources without overstepping bounds. For example, in Claude Desktop, users can configure local file system or Git MCP Servers. These Servers expose only strictly defined, harmless operations (like reading files in a specific directory or fetching a git log) to the cloud-based Claude. All requests pass through a secure local channel, and instructions from the cloud model are validated and executed by the local Server. The scope of capability exposure is precisely controlled. This is precisely why the MCP architecture can balance powerful functionality with security in IDE intelligent coding assistant scenarios.

This design is the core reason for MCP's rapid adoption in IDEs and local development scenarios.

6. When Agent Scale Increases: How to Maintain the System?

In large-scale systems, an Agent may need to access dozens of tools. Complex permission policies and scattered calling logic make maintenance difficult.

Pain Points of Traditional Architecture

- Tools are scattered everywhere with no unified governance.

- Agent logic becomes too complex to maintain.

- Permissions and auditing are fragmented.

The MCP Server Solution

MCP provides a centralized management layer for tools and data access:

- Centralized registration and management of tools.

- Agents only care about their available capabilities.

- The MCP Server becomes the "capability abstraction layer" for the Agent.

This layered governance is ideal for upgrading enterprise-level system architectures.

7. When AI Enters the Enterprise: How to Securely Reuse Internal Systems?

Enterprises want to leverage AI to improve operational efficiency, but internal systems (such as CRM, ERP, and databases) usually have non-standard interfaces, complex permission models, and strict compliance and auditing requirements. Directly exposing these systems to a model is unsafe.

The MCP Server Solution

In enterprise architecture, the MCP Server can act as an AI-specific "Capability Gateway." Every key internal system (like Salesforce, Jira, or internal databases) can be encapsulated by a dedicated MCP Server. This Server is responsible for:

- Adaptation and Abstraction: Converting complex internal APIs into standardized MCP Tools, hiding the complexity of internal APIs.

- Permission Centralization: Integrating enterprise identity authentication (like SSO) and implementing access control at the tool level.

- Audit Logging: Centrally recording detailed logs of all AI-initiated operations to meet compliance requirements.

In this way, an MCP Server can be viewed as the "firewall and adapter" of the AI capability layer within an enterprise architecture. it provides a consistent and secure access method for different Agents and models, enabling AI to empower business under safe and controllable conditions.

MCP Server is Not Just a Protocol, But a Key Infrastructure for the AI Agent Era

In summary, the MCP Server does not solve a single technical point; rather, it addresses a series of structural challenges faced by AI Agents as they move toward large-scale, engineered applications:

- Security Challenges: Implementing least-privilege access via a proxy model.

- Integration Challenges: Decoupling tools and models through a standardized protocol to reduce

N x Mintegration complexity. - Flexibility Challenges: Supporting autonomous Agent planning through dynamic discovery to adapt to open-domain tasks.

- Context Challenges: Providing structured external memory through primitives like Resources.

- Enterprise Challenges: Satisfying permission, auditing, and compliance requirements via a gateway model.

MCP (Model Context Protocol) not only defines a standardized way for models to communicate with external systems but also provides the infrastructure for building scalable, secure AI Agent platforms.

It solves a whole class of systemic problems ranging from "capability exposure" and "tool governance" to "model decoupling," "context management," and "secure access," making AI Agent development in multi-model, multi-tool, and complex task scenarios sustainable, maintainable, and secure.

As Anthropic stated when announcing the donation of MCP to the Linux Foundation to establish the Agentic AI Foundation, this move aims to promote the open development of AI Agent interoperability standards. The MCP Server is a key component of this vision, providing a solid and reliable infrastructure layer for a future where different AIs and tools collaborate safely and efficiently. As AI moves from demos to production and from single-point applications to complex systems, the MCP Server provides a well-thought-out, scalable engineering answer.

MCP Article Series:

- Comprehensive Analysis of MCP Server: The Context and Tool Communication Hub in the AI Agent Era

- What Key Problems Does MCP Server Solve? Why AI Agents Need It

- MCP Server Architecture and Working Principles: From Protocol to Execution Flow

- MCP Server Practical Guide: Building, Testing, and Deploying from 0 to 1

- MCP Server Application Scenarios Evaluation and Technical Selection Guide

About the Author

This content is compiled and published by the NavGood Content Editorial Team. NavGood is a platform focused on AI tools and the AI application ecosystem, providing long-term tracking of AI Agents, automated workflows, and the development and practical implementation of Generative AI technology.

Disclaimer: This article represents the author's personal understanding and practical experience. It does not represent the official position of any framework, organization, or company, nor does it constitute commercial, financial, or investment advice. All information is based on public sources and the author's independent research.

References:

[1]: https://modelcontextprotocol.io/docs/learn/server-concepts "Understanding MCP servers - Model Context Protocol"

[2]: https://modelcontextprotocol.io/docs/learn/architecture "Architecture overview - Model Context Protocol"

[3]: https://cloud.google.com/blog/products/ai-machine-learning/announcing-official-mcp-support-for-google-services "Announcing Model Context Protocol (MCP) support for Google services"

[4]: https://developer.pingidentity.com/identity-for-ai/agents/idai-what-is-mcp.html "What is Model Context Protocol (MCP)?"