MCP Server Architecture and Working Principles Explained: From Protocol to Execution Flow

As AI systems evolve, they are becoming increasingly complex. Traditional API calling paradigms (such as independent HTTP requests and provider-specific SDKs) face challenges like low development efficiency, high security risks, and poor maintainability when building sophisticated AI Agent systems. For instance, independent API definitions lack a unified standard; different models provide varying function-calling specifications; and there is a lack of efficient capability discovery and dynamic integration mechanisms.

The Model Context Protocol (MCP) emerged to solve these problems. Through standardization at the protocol level, it introduces an extensible, cross-service tool and resource calling mechanism, enabling AI agents to collaborate robustly with external systems. The core design philosophy of MCP lies in protocol abstraction and separation of concerns. It does not dictate specific implementations but defines a universal language for interaction between AI applications and external capabilities.

This article provides an authoritative explanation of the MCP Server architecture, protocol principles, and execution flow, highlighting why a protocol layer is preferred over an SDK and how MCP supports the scalable tool calling required by modern intelligent systems.

Target Audience:

- Tech enthusiasts and entry-level learners

- Professionals and managers seeking efficiency improvements

- Enterprise decision-makers and business department heads

- General users interested in the future trends of AI

Contents:

- 1. Core Design Philosophy of the MCP Protocol

- 2. Core Components of MCP

- 3. MCP vs. Traditional API Integration: Architectural Paradigms

- 4. How MCP Servers Collaborate with AI Agents

- 5. The Three Core Capability Primitives of MCP

- 6. Communication Relationship: MCP Client, Server, and LLM

- 7. Complete MCP Server Calling Process

- 8. MCP vs. Traditional API / Function Calling: Model Interface Layer Differences

- 9. Limitations and Challenges of MCP

- Conclusion

- MCP Frequently Asked Questions (FAQ)

1. Core Design Philosophy of the MCP Protocol

MCP chooses protocol-layer abstraction, offering core advantages such as standardization, language independence, and strong universality, rather than providing a unified SDK. This means any program implementing the MCP protocol specification—regardless of the programming language or framework used—can communicate with others. This design maximizes ecological openness and interoperability. Communication is based on JSON-RPC 2.0, a lightweight remote procedure call protocol whose structured request and response formats are ideal for automated machine-to-machine interaction.

Communication Message Format

MCP utilizes JSON-RPC 2.0 as its foundational message format and communication standard.

JSON-RPC is a stateless, light-weight remote procedure call (RPC) protocol. It uses JSON as its data format and can be carried over different transport layers such as STDIO or HTTP.

In MCP, JSON-RPC is responsible for:

- Defining request/response message structures;

- Standardizing method calls (e.g.,

initialize,tools/list,tools/call); - Providing extensible error and batch processing mechanisms.

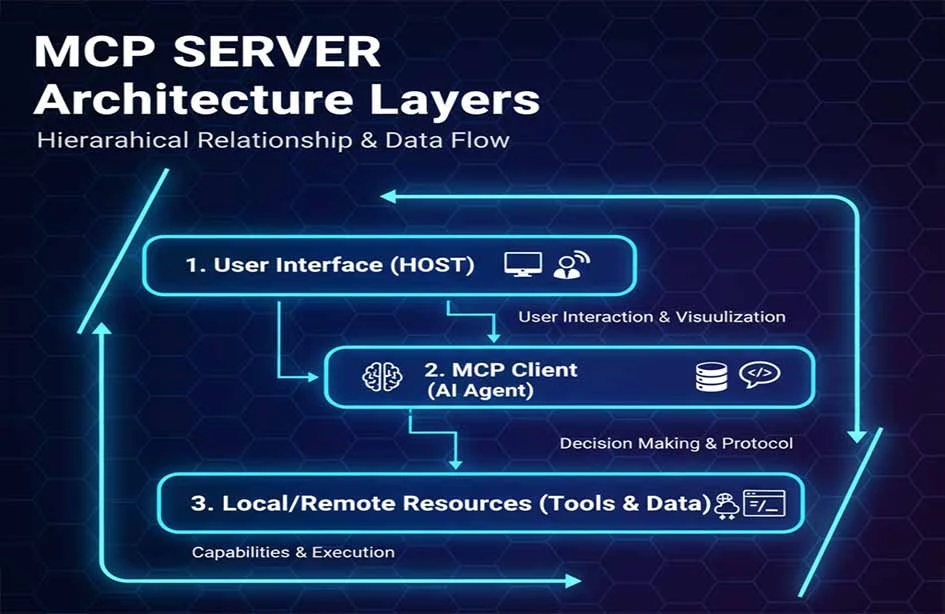

Three-Layer Architecture Model

MCP adopts a three-layer architecture model: MCP Client, MCP Server, and Resource/Tool.

| MCP Hosts |

|---|

| MCP Clients |

| MCP Servers |

- MCP Hosts: The LLM application initiating the request (e.g., Claude Desktop, an IDE, or an AI tool).

- MCP Clients: Located within the host program, maintaining a 1:1 connection with the MCP server.

- MCP Servers: Providing context, tools, and prompt information to the MCP client.

This layered design significantly reduces coupling between modules and enhances the scalability of the MCP Server.

2. Core Components of MCP

Transport Layer The MCP standard transport includes STDIO and HTTP + Server-Sent Events (SSE), where SSE provides streaming message capabilities from server to client. MCP currently defines two standard transport mechanisms for client-server communication:

- STDIO: Security and Performance First. The MCP Server starts as a child process and runs locally with the AI application. It uses local inter-process communication (IPC), offering low latency and simple deployment. Since all communication stays local, it avoids network risks for credentials and data. This is the preferred method for integrating local capabilities into personal productivity tools like Claude Desktop.

- HTTP with Server-Sent Events (SSE): Accessibility and Scalability First. The MCP Server is deployed as a network service accessible by multiple remote clients. It uses HTTP/POST combined with JSON-RPC for message transmission. This is suitable for shared capability hubs within enterprises or SaaS services. HTTPS and standard HTTP authentication provide the security foundation.

This mechanism allows MCP to support both simple tool calls and large-scale distributed servers. The choice depends on the scenario: STDIO is more efficient for local process communication, while HTTP offers better scalability for remote cluster deployments.

Protocol Layer

At the protocol layer, MCP uses the JSON-RPC 2.0 specification to define standard methods, such as:

- Initializing connections (

initialize); - Capability discovery (

tools/list); - Tool execution (

tools/call).

This variant design maintains the core protocol structure of JSON-RPC while introducing MCP-specific communication semantics. JSON-RPC Tools

Security and Authentication

MCP does not provide security mechanisms directly. The security design relies on the specific MCP Server implementation using established solutions, such as:

- HTTPS/TLS encrypted transmission;

- Authentication via OAuth2, API Keys, or mTLS;

- Least-privilege authorization control.

This means the MCP protocol itself does not contain security specs; instead, server implementers are responsible for designing and integrating third-party security systems. For example, enterprise platforms may integrate MCP with identity management systems for secure access control, governance, and auditing.

Extensibility and Plugin Architecture

The extensibility of MCP stems naturally from its protocol design. Developing a "custom tool" involves creating a server program that implements the MCP protocol. When this server starts and connects to the AI application (MCP Client), it dynamically registers its tools via the standard tools/list method. The AI application discovers and uses these new capabilities without pre-configuration or recompilation, achieving true plug-and-play functionality.

MCP Servers typically include mechanisms for:

- Custom tool development;

- Dynamic registration/discovery of tools and capabilities;

- Plugin support to extend behavior without modifying the protocol core.

Tool Lifecycle and Session Management

The lifecycle of an MCP connection begins with the initialize handshake and ends when the connection closes. During this period, all tool calls and resource reads share a logical session. For MCP Servers that need to serve multiple independent users or sessions (especially in HTTP mode), the implementation must manage state isolation between different clients or requests.

The MCP Server manages:

- Session context: Saving state across multiple calls;

- Isolation mechanisms: Ensuring sessions between different clients are isolated to prevent data leaks or state pollution.

This management supports advanced use cases like long sessions and multi-step operations.

System Performance and Reliability Design

As a lightweight protocol, MCP's performance depends heavily on server implementation efficiency and network latency. For high-availability requirements, multiple MCP Server instances can be deployed behind a load balancer. The protocol defines a logging interface, allowing servers to send execution logs to the client, which forms the basis for auditing.

MCP Server designs often include:

- Load balancing for multi-replica deployment;

- Fault recovery to ensure high availability;

- Logging and auditing for tracking call history and diagnostics.

These mechanisms enhance stability for enterprise-grade use cases.

Integration with Modern Ecosystems

To adapt to cloud-native environments, MCP Servers often collaborate with Kubernetes and cloud load balancers for auto-scaling and elastic deployment. MCP Servers can be containerized and deployed on platforms like Kubernetes, allowing for health checks and centralized management within modern enterprise infrastructure stacks.

3. MCP vs. Traditional API Integration: Architectural Paradigms

Compared to independent API integration, MCP utilizes protocol abstraction to improve security, maintainability, and cross-platform capabilities.

| Feature | MCP Server | Traditional API Integration | Plugin Systems | SDK Mode |

|---|---|---|---|---|

| Standardization | Industry Standard | Varies per API | Platform-specific | Provider-specific |

| Development Cost | Low (Implement once) | High | Medium | High |

| Maintenance Complexity | Low | Very High | Medium | High |

| Cross-platform Capability | Excellent | Poor | Poor | Poor |

| Security Model | Unified Control | Fragmented | Platform-controlled | Fragmented |

| Real-time Support | Native Support | Polling/Webhooks | Limited | Custom |

| Community Ecosystem | Growing Rapidly | Fragmented | Closed | Vendor Lock-in |

4. How MCP Servers Collaborate with AI Agents

In a typical planning-based AI Agent architecture, components include a Planner, Executor, and Memory. The MCP Server acts as the standardized execution backend in this architecture.

- Planner: (Usually the LLM) formulates strategies based on input. It retrieves the current list of available

Toolsand their descriptions from the MCP Client. The Planner then outlines a sequence of steps (e.g., "Use tool A to query data, then tool B to process results"). - Executor: (Usually the MCP Client) issues

tools/callrequests to the corresponding MCP Server based on the plan. - Memory: Stores state and context. Execution results are returned to the Agent, stored in Memory, and used for subsequent planning. Additionally,

Resourcescan proactively provide background knowledge relevant to the task.

The MCP Server serves as the capability provider for the Executor, allowing the Planner to access tools and resources via a standard protocol.

The position of MCP in an AI Agent architecture is similar to an operating system's interface for external services, unifying and standardizing capability execution.

MCP is complementary to frameworks like LangChain and AutoGen. While these frameworks provide high-level abstraction and orchestration for AI Agent workflows, MCP serves as the standardized tool execution layer. It solves the "glue code" and fragmentation issues these frameworks face when integrating specific tools.

5. The Three Core Capability Primitives of MCP

These primitives define the MCP protocol data model and how AI applications interact with the server.

- Tools: Callable functions like search or database operations. Each tool has a clear name, description, and an input parameter JSON Schema. The AI model triggers these when necessary (e.g., a

send_emailtool). - Resources: Accessible data sources such as file systems or object storage. Each resource has a URI and MIME type. AI applications read these contents to inject context into prompts (e.g., a

file:///reports/weekly.mdresource). - Prompts: Pre-defined templates or contexts used to assist LLM decision-making. These provide a structured way for users to launch specific tasks (e.g., a "Plan Weekend Trip" prompt that guides the model to use relevant tools).

This three-tier design allows the MCP Server to clearly express its executable functions while supporting dynamic discovery. Additionally, the protocol allows servers to request model generation (LLM sampling) from the client. This enables the server to use the client's LLM for reasoning during its own execution logic. Clients must declare this capability during initialization to support it.

6. Communication Relationship: MCP Client, Server, and LLM

Understanding the relationship between these three is key to understanding the MCP workflow:

- Who initiates the connection? The MCP Client (integrated into the AI app) actively initiates the connection to the MCP Server.

- Who decides to call a tool? The LLM (within the AI app) makes decisions based on internal logic. The MCP Client provides the list of available tools as context, and the LLM decides whether to call a tool, which one, and with what parameters.

- Who executes the operation? The MCP Server calls the actual service or resource. It receives the standardized

tools/callrequest from the client, translates it into actions for the underlying system (database, API, file), and returns the standardized result.

7. Complete MCP Server Calling Process

A typical workflow includes:

- Initialization: Client and Server establish a connection and exchange protocol versions and capabilities.

- Capability Discovery: The Client retrieves the list of Tools and Resources from the Server via

tools/list. - Tool Calling: After the LLM makes a decision, the Client issues a

tools/callrequest. The Server executes the actual operation. - Result Injection: The Server returns structured results, which the Client provides back to the LLM for final response generation or further planning.

Typical timing diagram for a call protocol:

Below is a simplified timing diagram for a conference room booking case, showing core protocol interaction from initialization to execution:

8. MCP vs. Traditional API / Function Calling: Model Interface Layer Differences

Traditional API integration depends on vendor-defined specs and SDKs. MCP abstracts calling behavior into JSON-RPC methods, maintaining a standard interface that is independent of specific SDKs.

- Traditional Function Calling This is a capability provided by specific model vendors. Developers define functions in the vendor's format, and the model learns to output requests in that format. It is locked to the vendor's proprietary interface.

- MCP Architecture MCP is a model-agnostic open protocol. It defines universal, standardized formats for capability description and calling. Any AI app supporting MCP (regardless of the underlying model) can interact with any MCP Server. It liberates tool integration from model vendor lock-in.

MCP is better suited for long-term maintenance and ecosystem building in large-scale, multi-model environments.

Use Case Boundaries: If you use a single model with simple, fixed tool requirements, Function Calling may suffice. If you are building a complex Agent system involving multiple models and tools that require long-term scalability, the standardization and decoupling provided by MCP are essential.

9. Limitations and Challenges of MCP

While the MCP Server provides a standardized way for AI Agents to interact with tools, it is not a "magic bullet." Understanding what MCP does not solve and the challenges in real engineering environments is crucial.

1. Capability Conflict and Governance with Multiple MCP Servers

In complex systems, multiple MCP Servers are often connected. Different servers may expose tools with similar semantics or overlapping functions. The MCP protocol handles declaration and calling specifications but does not include:

- Tool priority ranking

- Conflict detection or resolution

- Strategy for optimal tool selection based on context

This means: Decision-making responsibility falls entirely on the MCP Client or AI Agent layer. The Planner or strategy module must implement its own tool selection logic.Therefore,MCP is a "capability exposure and communication layer," not an "intelligent scheduling system.

2. Context Pressure from Long-term and Multi-round Calls

In Agent scenarios, tasks involve multiple steps:

- Planning

- Multiple tool calls (

tools/call) - Result injection

- Re-reasoning and decision-making

This process continuously appends information to the LLM's context window. It is important to note:

- MCP is not responsible for context pruning, compression, or summarization.

- It does not have built-in memory management or context optimization.

In long tasks, this can lead to:

- Rapidly rising Token costs

- Approaching model context limits

- Key information being "drowned out"

Context management remains a challenge for the Agent architecture layer to solve, not the MCP protocol.

3. Differences in Maturity Across Language Implementations

While the protocol is language-agnostic, maturity varies in practice:

- The JavaScript/Node.js and Python ecosystems are the most mature.

- Implementations for Rust, Go, and Java are still evolving.

- Support for the latest specs may vary between implementations.

Engineering teams must:

- Ensure consistency between implementations and specifications.

- Evaluate the stability and maintenance of MCP Server implementations for production environments.

This is common for any emerging protocol in its early stages.

4. Security and Permissions Rely on External Systems

MCP is designed to be "protocol neutral" and does not include a built-in security system. Specifically:

- MCP defines capability boundaries (Tools / Resources).

- It does not mandate specific authentication, authorization, or auditing mechanisms.

In real-world deployment, security must be implemented via external systems such as:

- OAuth / OIDC

- API Gateways

- Zero Trust architectures

- Enterprise IAM systems

An MCP Server should be viewed as:

A capability service wrapped in a security system, rather than the security control center itself.

This is a key reason why MCP can flexibly adapt to different enterprises and cloud environments, but it also increases the complexity of the system design.

5. Rapid Evolution of the Protocol and Ecosystem

As a new protocol for AI Agents, MCP is evolving quickly:

- Protocol details and primitives are being refined.

- There is a time lag for different implementations to support new features.

- Best practices for advanced use cases are still being established.

MCP should be viewed as a long-term infrastructure protocol rather than a finalized, static standard.

MCP Servers focus on providing a standardized way to discover, call, and combine tools. Understanding these limitations helps developers:

- Define clear responsibility boundaries.

- Avoid over-reliance on the protocol itself.

- Resolve complexity at the appropriate architectural layer.

Conclusion

In practical engineering, MCP acts as a "capability protocol foundation," whose value becomes more apparent as system complexity grows. The MCP Server does not replace traditional APIs or Function Calling; instead, it provides a standardized, extensible, and secure protocol layer for AI Agents. By introducing this layer, it addresses the core engineering challenges of AI Agent scaling: reducing integration complexity from N×M to N+M, providing a framework for dynamic discovery and secure execution, and decoupling the tool ecosystem from AI models. This allows intelligent agents to:

- Access capabilities systematically;

- Dynamically discover and call tools/resources;

- Interoperate across models and platforms.

MCP lays the groundwork for a rich, composable AI tool ecosystem. It marks a shift from "manual integration" to "standardized infrastructure," providing a foundation for building interoperable, scalable, and secure enterprise AI systems.

MCP Frequently Asked Questions (FAQ)

1. Will MCP replace traditional APIs or Function Calling?

No. MCP is not a replacement but acts at a higher level, allowing the MCP Client to dynamically discover capabilities from an MCP Server via the list method.

- For simple, fixed, single-model scenarios, Function Calling is efficient.

- For multi-model, multi-tool, evolving Agent systems, MCP offers better decoupling and maintainability.

In real-world systems, the two often have a complementary relationship.

2. Does MCP require a specific LLM?

No. MCP is model-agnostic. It regulates communication between the Client and Server and doesn't care if the underlying model is from OpenAI, Anthropic, Google, or a local open-source model.As long as the AI application can expose the tool context and initiate calls according to the MCP protocol, it can use the MCP Server.

3. Does MCP have built-in security?

No. It is protocol-neutral. Security (HTTPS, OAuth2, IAM) must be provided by the deployment environment and external systems.

4. How are tool conflicts handled with multiple MCP Servers?

MCP does not resolve conflicts. If multiple servers offer similar tools, the responsibility for tool selection and priority lies with the Client or the Agent's Planner layer.

5. Does MCP cause context window bloat?

It can, but this is an inherent challenge of LLM Agents. MCP does not manage context; summarization and memory management are the responsibility of the Agent architecture.

6. Is MCP suitable for high-concurrency enterprise use?

Yes, provided the implementation is robust. High concurrency depends on server quality, multi-instance deployment, and cloud-native architectures like Kubernetes.

7. Is the cost of adopting MCP high?

The coding is relatively simple, but it requires an architectural understanding of Agent systems and tool governance. It is best suited for teams building mid-to-large scale AI applications.

8. Is MCP "stable"?

The core design is stable, but the ecosystem is still evolving. It is a forward-looking infrastructure protocol rather than a "one-and-done" solution.

9. Is MCP suitable for individual developers?

Yes, if there is a need for scalability. For very simple projects, it might be "overkill," but it prevents high refactoring costs as a system grows.

10. What problem does MCP solve best?

It excels at standardizing access to multiple tools/services, decoupling capability discovery from execution, and enabling capability reuse across different models and teams.

MCP Article Series:

- Comprehensive Analysis of MCP Server: The Context and Tool Communication Hub in the AI Agent Era

- What Key Problems Does MCP Server Solve? Why AI Agents Need It

- MCP Server Architecture and Working Principles: From Protocol to Execution Flow

- MCP Server Practical Guide: Building, Testing, and Deploying from 0 to 1

- MCP Server Application Scenarios Evaluation and Technical Selection Guide

About the Author

This content is compiled and published by the NavGood Content Editorial Team. NavGood is a platform focused on AI tools and application ecosystems, tracking the development and implementation of AI Agents, automated workflows, and Generative AI.

Disclaimer: This article represents the author's personal understanding and experience. It does not represent the official position of any framework or company and does not constitute commercial or investment advice.

References:

[1]: https://json-rpc.dev/learn/mcp-basics "Understanding JSON-RPC for AI Integration"

[2]: https://modelcontextprotocol.io/docs/learn/architecture "Architecture overview - Model Context Protocol"

[3]: https://json-rpc.org/specification "JSON-RPC 2.0 Specification"

[4]: https://modelcontextprotocol.io/docs/learn/architecture "About MCP: Architecture overview"