fal.ai

Overview of fal.ai

What is fal.ai?

fal.ai is a generative media platform designed for developers, offering a wide range of AI models for image, video, and audio generation. It provides developers with the easiest and most cost-effective way to integrate generative AI into their applications.

Key Features:

- Extensive Model Gallery: Access over 600 production-ready image, video, audio, and 3D models.

- Serverless GPUs: Run inference at lightning speed with fal's globally distributed serverless engine. No GPU configuration or autoscaling setup required.

- Unified API and SDKs: Use a simple API and SDKs to call hundreds of open models or your own LoRAs in minutes.

- Dedicated Clusters: Spin up dedicated compute to fine-tune, train, or run custom models with guaranteed performance.

- Fastest Inference Engine: fal Inference Engine™ is up to 10x faster.

How to use fal.ai?

- Explore Models: Choose from a rich library of models for image, video, voice, and code generation.

- Call API: Access the models using a simple API. No fine-tuning or setup needed.

- Deploy Models: Deploy private or fine-tuned models with one click.

- Utilize Serverless GPUs: Accelerate your workloads with fal Inference Engine.

Why choose fal.ai?

- Speed: Fastest inference engine for diffusion models.

- Scalability: Scale from prototype to 100M+ daily inference calls.

- Ease of Use: Unified API and SDKs for easy integration.

- Flexibility: Deploy private or fine-tuned models with one click.

- Enterprise-Grade: SOC 2 compliant and ready for enterprise procurement processes.

Where can I use fal.ai?

fal.ai is used by developers and leading companies to power AI features in various applications, including:

- Image and Video Search: Used by Perplexity to scale generative media efforts.

- Text-to-Speech Infrastructure: Used by PlayAI to provide near-instant voice responses.

- Image and Video Generation Bots: Used by Quora to power Poe's official bots.

import { fal } from "@fal-ai/client";

const result = await fal.subscribe("fal-ai/fast-sdxl", {

input: {

prompt: "photo of a cat wearing a kimono"

},

logs: true,

onQueueUpdate: (update) => {

if (update.status === "IN_PROGRESS") {

update.logs.map((log) => log.message).forEach(console.log);

}

},

});

Best Alternative Tools to "fal.ai"

Cloudflare Workers AI allows you to run serverless AI inference tasks on pre-trained machine learning models across Cloudflare's global network, offering a variety of models and seamless integration with other Cloudflare services.

Explore NVIDIA NIM APIs for optimized inference and deployment of leading AI models. Build enterprise generative AI applications with serverless APIs or self-host on your GPU infrastructure.

Cerebrium is a serverless AI infrastructure platform simplifying the deployment of real-time AI applications with low latency, zero DevOps, and per-second billing. Deploy LLMs and vision models globally.

Friendli Inference is the fastest LLM inference engine, optimized for speed and cost-effectiveness, slashing GPU costs by 50-90% while delivering high throughput and low latency.

Lightning-fast AI platform for developers. Deploy, fine-tune, and run 200+ optimized LLMs and multimodal models with simple APIs - SiliconFlow.

Modal: Serverless platform for AI and data teams. Run CPU, GPU, and data-intensive compute at scale with your own code.

UltiHash: Lightning-fast, S3-compatible object storage built for AI, reducing storage costs without compromising speed for inference, training, and RAG.

The AI Engineer Pack by ElevenLabs is the AI starter pack every developer needs. It offers exclusive access to premium AI tools and services like ElevenLabs, Mistral, and Perplexity.

Bria.ai offers Gen AI Developer Toolkits for enterprise solutions. Access fully-licensed datasets, source-available models, and APIs to create tailored generative AI solutions for image generation and editing.

GPUX is a serverless GPU inference platform that enables 1-second cold starts for AI models like StableDiffusionXL, ESRGAN, and AlpacaLLM with optimized performance and P2P capabilities.

Simplify AI deployment with Synexa. Run powerful AI models instantly with just one line of code. Fast, stable, and developer-friendly serverless AI API platform.

Runpod is an all-in-one AI cloud platform that simplifies building and deploying AI models. Train, fine-tune, and deploy AI effortlessly with powerful compute and autoscaling.

Scade.pro is a comprehensive no-code AI platform that enables users to build AI features, automate workflows, and integrate 1500+ AI models without technical skills.

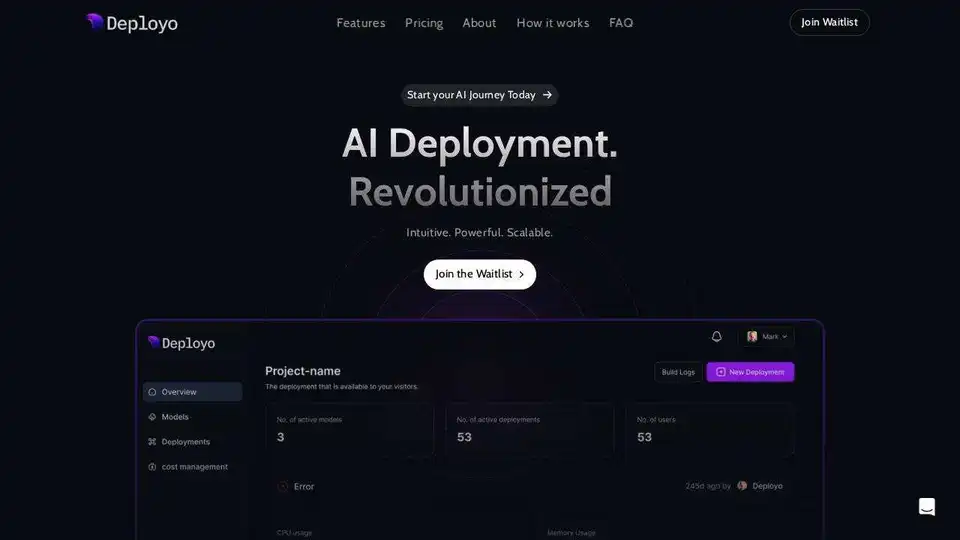

Deployo simplifies AI model deployment, turning models into production-ready applications in minutes. Cloud-agnostic, secure, and scalable AI infrastructure for effortless machine learning workflow.