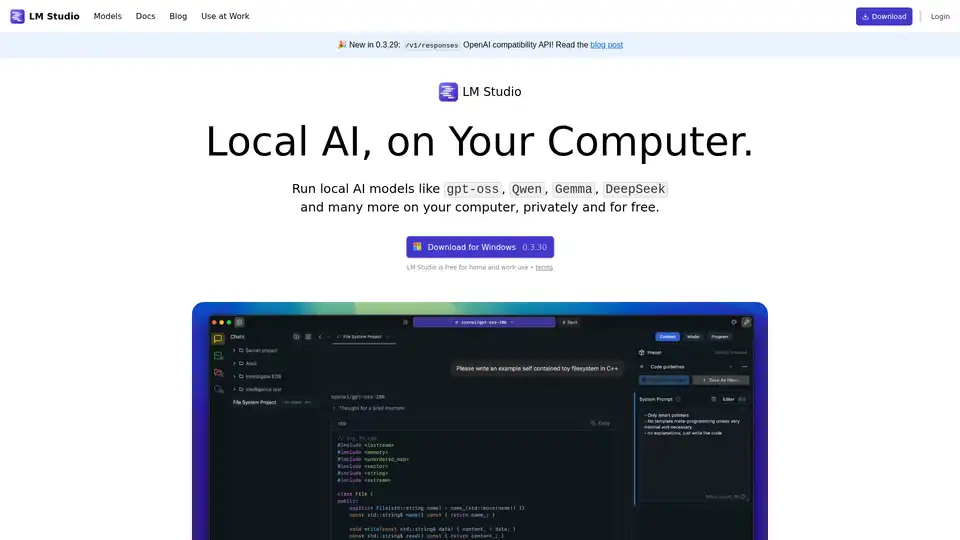

LM Studio

Overview of LM Studio

LM Studio: Your Local AI Powerhouse

What is LM Studio? LM Studio is a powerful tool that allows you to run AI models locally on your computer, privately and for free. It supports a wide range of models, including gpt-oss, Qwen, Gemma, and DeepSeek, giving you the flexibility to experiment with different AI capabilities without relying on cloud services.

How does LM Studio work?

LM Studio provides a user-friendly interface for downloading, configuring, and running AI models on your local machine. It handles the complexities of setting up the necessary software and dependencies, allowing you to focus on exploring the capabilities of the models themselves.

- Download and Installation: Download the LM Studio application for your operating system (Windows). Installation is straightforward.

- Model Selection: LM Studio integrates with the Hugging Face Hub, allowing you to download various models. Simply search for the model you need, and LM Studio will handle the download and setup.

- Inference: Once the model is downloaded, you can use LM Studio's interface to interact with it. This typically involves providing input text and receiving output generated by the model.

Key Features of LM Studio

- Local Execution: Run AI models directly on your computer, ensuring privacy and eliminating the need for an internet connection.

- Wide Model Support: Compatible with various popular AI models, including gpt-oss, Llama, Gemma, Qwen, and DeepSeek.

- Free to Use: LM Studio is free for both home and work use, making it accessible to a broad audience.

- Developer Resources: Offers JS and Python SDKs for seamless integration into your projects.

- OpenAI Compatibility API: The /v1/responses OpenAI compatibility API lets you use LM Studio models with tools that expect the OpenAI API.

Why choose LM Studio?

- Privacy: Keep your data and AI processing within your local environment.

- Cost-Effective: Avoid the costs associated with cloud-based AI services.

- Flexibility: Experiment with different models and configurations without limitations.

- Offline Access: Use AI models even without an internet connection.

Who is LM Studio for?

- Developers: Integrate AI capabilities into your applications using the JS and Python SDKs.

- Researchers: Explore and experiment with different AI models locally.

- AI Enthusiasts: Learn about and use AI technology without relying on cloud services.

Developer Resources

LM Studio provides developer resources to help you integrate AI capabilities into your projects:

- JS SDK: The JS SDK allows you to use LM Studio models within JavaScript applications. Documentation is available for

lmstudio-js. - Python SDK: The Python SDK enables you to use LM Studio models within Python applications. Documentation is available for

lmstudio-python. - LM Studio CLI (lms): A command-line interface for interacting with LM Studio models.

- OpenAI compatibility API: Use tools designed for the OpenAI API with LM Studio.

Practical Applications of LM Studio

LM Studio can be used for a variety of applications, including:

- Natural Language Processing (NLP): Text generation, sentiment analysis, and language translation.

- Code Generation: Automate code creation and completion.

- Data Analysis: Extract insights from data using AI models.

- Education and Research: Explore and experiment with AI technologies.

Conclusion

LM Studio empowers you to harness the power of AI locally on your computer. With its support for a wide range of models, developer resources, and user-friendly interface, LM Studio is an excellent choice for developers, researchers, and anyone interested in exploring the world of AI.

What is the best way to run AI models locally? LM Studio provides a straightforward and efficient solution.

Best Alternative Tools to "LM Studio"

Inworld TTS offers state-of-the-art AI text-to-speech for consumer applications with lower latency, more control, and flexible deployment options. Explore diverse AI voices and clone your own.

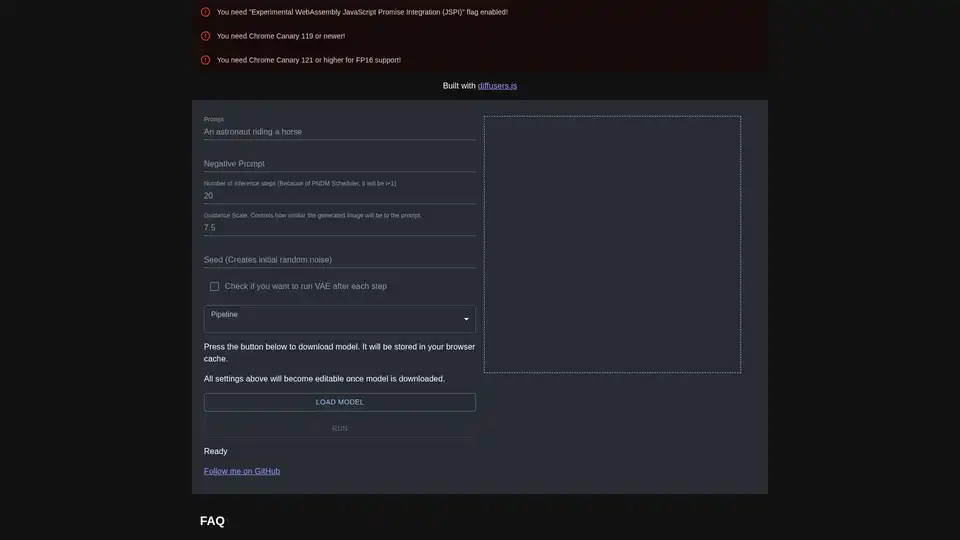

diffusers.js is a JavaScript library enabling Stable Diffusion AI image generation in the browser via WebGPU. Download models, input prompts, and create stunning visuals directly in Chrome Canary with customizable settings like guidance scale and inference steps.

Cerebrium is a serverless AI infrastructure platform simplifying the deployment of real-time AI applications with low latency, zero DevOps, and per-second billing. Deploy LLMs and vision models globally.

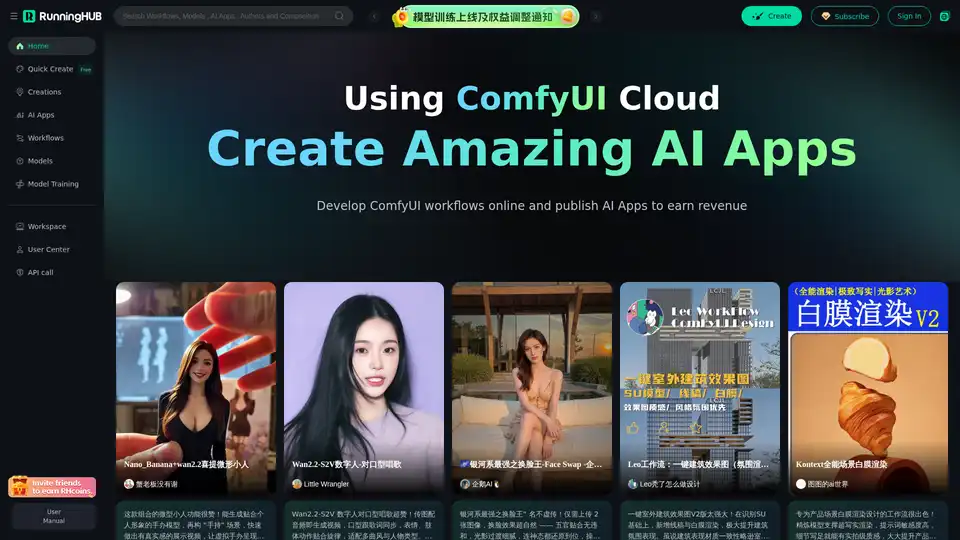

Highly Reliable Cloud-Based ComfyUI, Edit and Run ComfyUI Workflows Online, Publish Them as AI Apps to Earn Revenue, Hundreds of new AI apps daily.

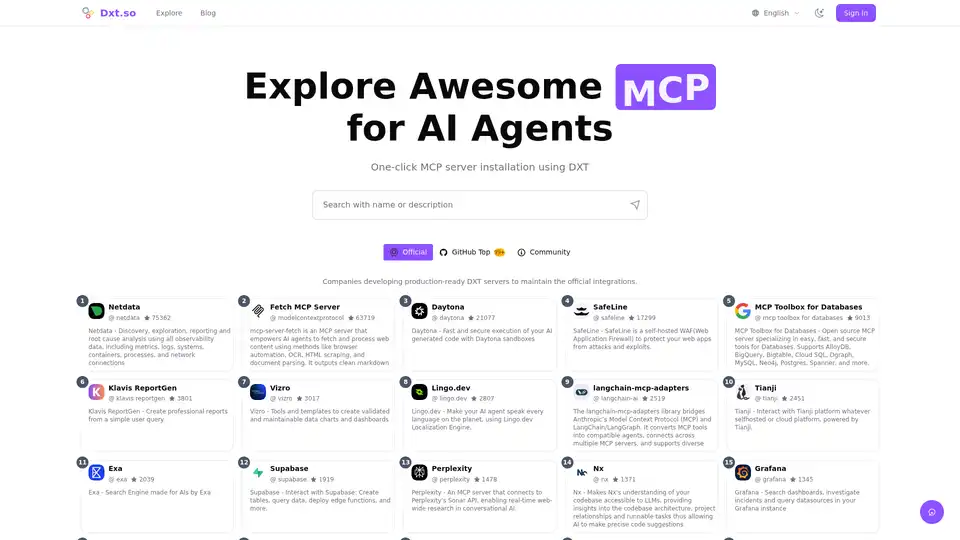

DXT Explorer is the leading platform to find and install DXT/MCP extensions for AI agents. Explore a curated collection of tools to extend your AI's capabilities.

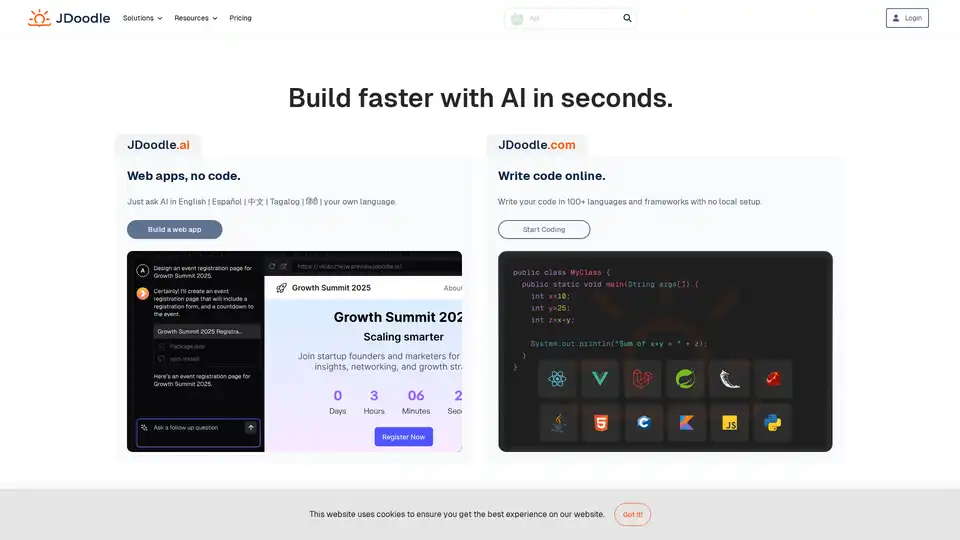

JDoodle is an AI-powered cloud-based online coding platform for learning, teaching, and compiling code in 96+ programming languages like Java, Python, PHP, C, and C++. Ideal for educators, developers, and students seeking seamless code execution without setup.

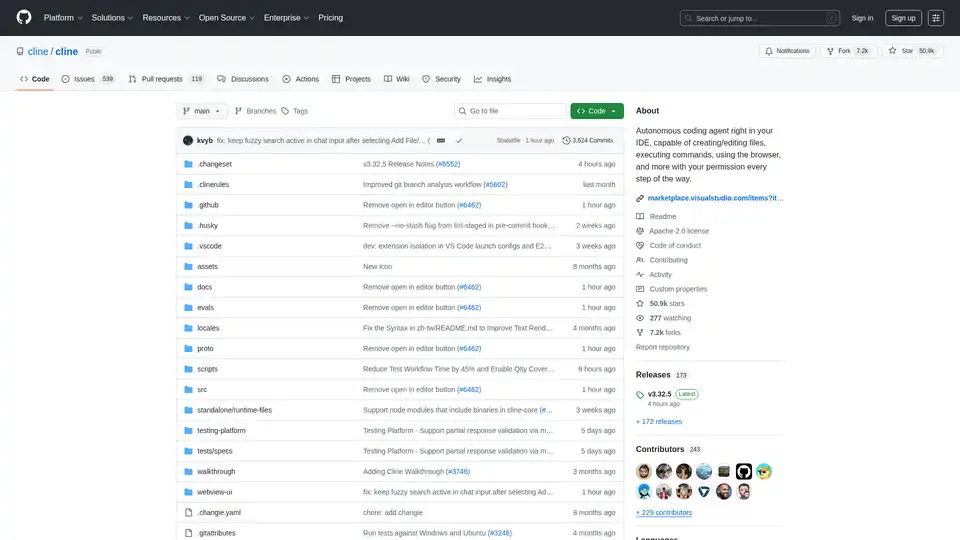

Cline is an autonomous AI coding agent for VS Code that creates/edits files, executes commands, uses the browser, and more with your permission.

Gru.ai is an advanced AI developer tool for coding, testing, and debugging. It offers features like unit test generation, Android environments for agents, and an open-source sandbox called gbox to boost software development efficiency.

Float16.Cloud provides serverless GPUs for fast AI development. Run, train, and scale AI models instantly with no setup. Features H100 GPUs, per-second billing, and Python execution.

Essential is an open-source MacOS app that acts as an AI co-pilot for your screen, helping developers fix errors instantly and remember key workflows with summaries and screenshots—no data leaves your device.

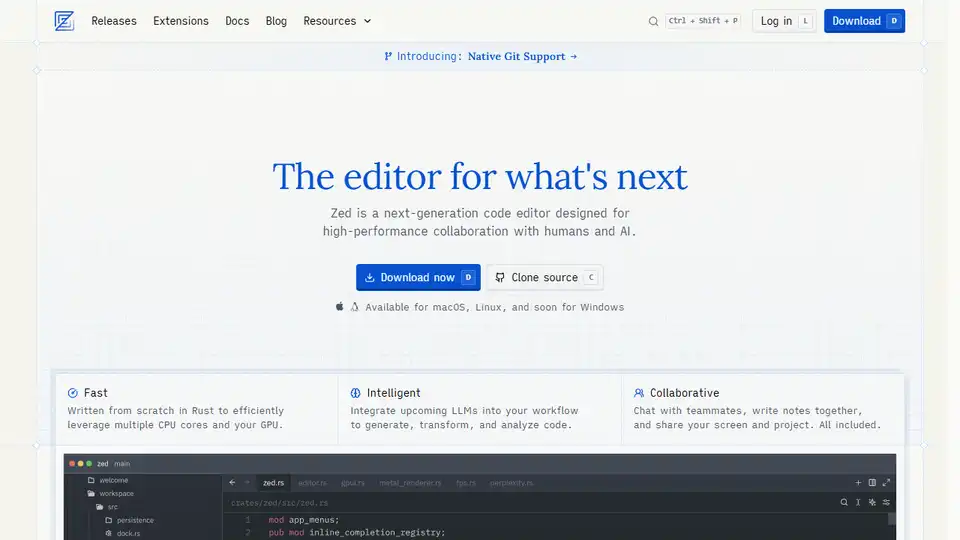

Zed is a high-performance code editor built in Rust, designed for collaboration with humans and AI. Features include AI-powered agentic editing, native Git support, and remote development.

Alter is a macOS AI assistant that integrates with apps, automates tasks with voice & smart AI. It understands your workflow and prioritizes privacy with encrypted, local data processing.

Experiment with AI models locally with zero technical setup using local.ai, a free and open-source native app designed for offline AI inferencing. No GPU required!

Enclave AI is a privacy-focused AI assistant for iOS and macOS that runs completely offline. It offers local LLM processing, secure conversations, voice chat, and document interaction without needing an internet connection.