Local AI

Overview of Local AI

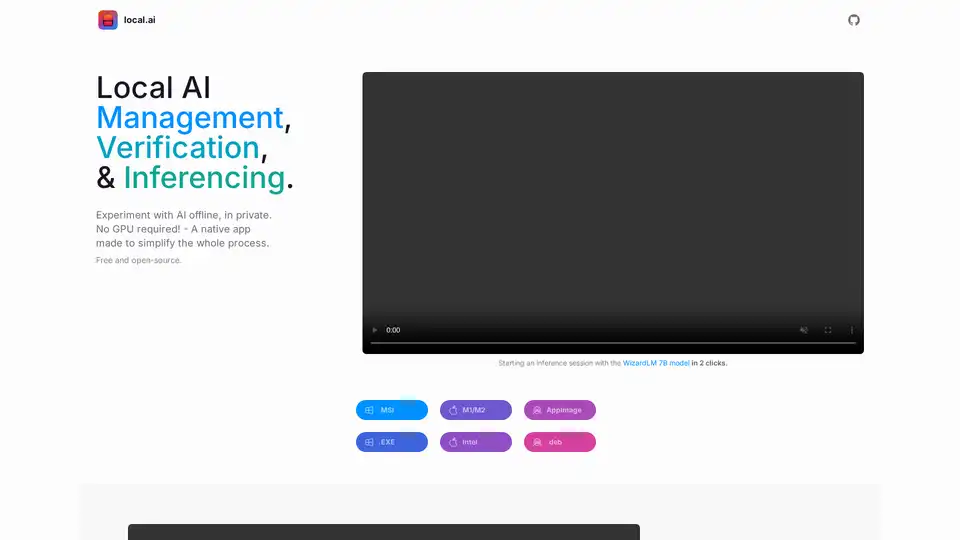

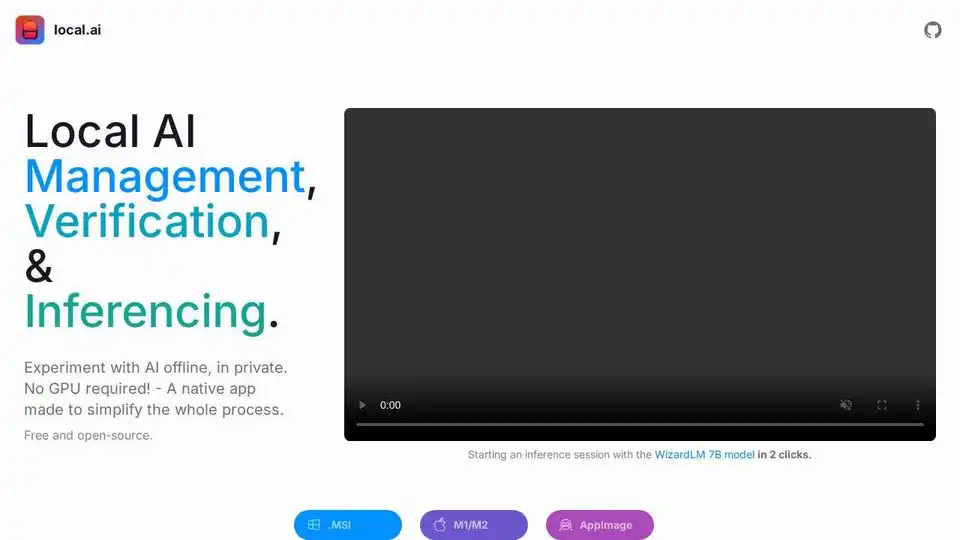

Local AI: Your Private AI Playground

What is Local AI? Local AI is a free and open-source native application designed to simplify the process of experimenting with AI models locally. It allows users to run AI inferences offline and in private, without requiring a GPU.

Key Features of Local AI:

- CPU Inferencing: Adapts to available threads and supports GGML quantization (q4, 5.1, 8, f16).

- Model Management: Centralized location to keep track of AI models, with resumable downloads and usage-based sorting.

- Digest Verification: Ensures the integrity of downloaded models with BLAKE3 and SHA256 digest compute features.

- Inferencing Server: Start a local streaming server for AI inferencing in just a few clicks.

How does Local AI work?

Local AI leverages a Rust backend for memory efficiency and a compact footprint (under 10MB on Mac M2, Windows, and Linux .deb). The application provides a user-friendly interface for:

- Downloading and managing AI models from various sources.

- Verifying the integrity of downloaded models using cryptographic digests.

- Running inference sessions on the CPU without requiring a GPU.

- Starting a local streaming server for AI inferencing.

How to use Local AI?

Using Local AI is designed to be straightforward:

- Download: Download and install the application for your operating system (.MSI, .EXE, M1/M2, Intel, AppImage, .deb).

- Model Management: Select a directory to store your AI models. Local AI will track and manage the models in that location.

- Inference: Load a model and start an inference session with just a few clicks. You can use the built-in quick inference UI or start a streaming server for more advanced applications.

- Digest Verification: Verify the integrity of your downloaded models using the BLAKE3 and SHA256 digest compute feature.

Who is Local AI for?

Local AI is ideal for:

- AI Enthusiasts: Individuals interested in experimenting with AI models without the need for expensive hardware or complex setups.

- Privacy-Conscious Users: Those who want to run AI inferences offline and in private, without sending data to external servers.

- Developers: Who need a simple and efficient way to test and deploy AI models locally.

Why choose Local AI?

- Ease of Use: Simplifies the process of experimenting with AI models.

- Privacy: Runs AI inferences offline and in private.

- Efficiency: Uses a Rust backend for memory efficiency and a compact footprint.

- Versatility: Supports a variety of AI models and applications.

Upcoming Features

The Local AI team is actively working on new features, including:

- GPU Inferencing

- Parallel sessions

- Nested directory for model management

- Custom sorting and searching

- Model explorer and search

- Audio/image inferencing server endpoints

In conclusion, Local AI is a valuable tool for anyone looking to experiment with AI models locally, offering a user-friendly interface, robust features, and a commitment to privacy and efficiency. What is the best way to start experimenting with AI models? With Local AI, it's easier than ever. Try it out today!

Best Alternative Tools to "Local AI"

Experiment with AI models locally with zero technical setup using local.ai, a free and open-source native app designed for offline AI inferencing. No GPU required!

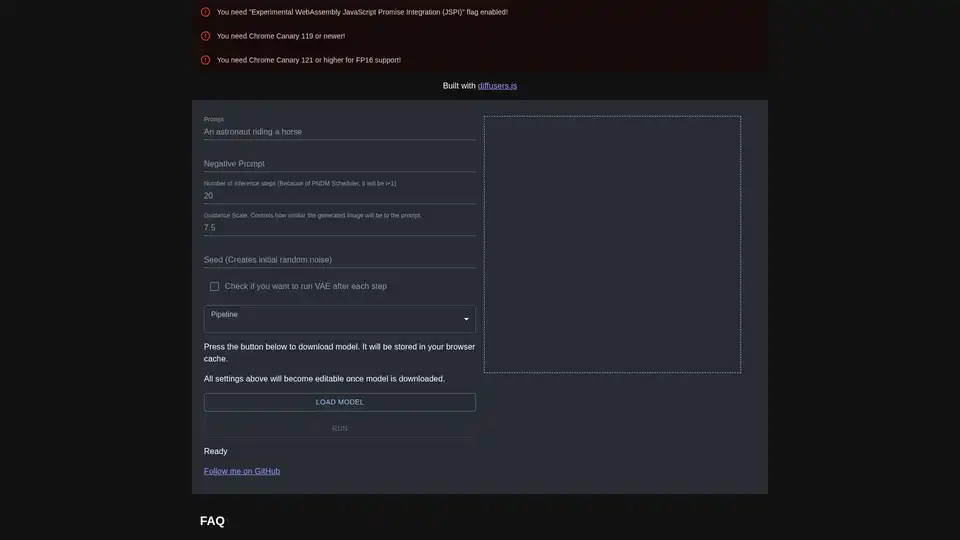

diffusers.js is a JavaScript library enabling Stable Diffusion AI image generation in the browser via WebGPU. Download models, input prompts, and create stunning visuals directly in Chrome Canary with customizable settings like guidance scale and inference steps.

Sagify is an open-source Python tool that streamlines machine learning pipelines on AWS SageMaker, offering a unified LLM Gateway for seamless integration of proprietary and open-source large language models to boost productivity.

Explore Stable Diffusion, an open-source AI image generator for creating realistic images from text prompts. Access via Stablediffusionai.ai or local install for art, design, and creative projects with high customization.

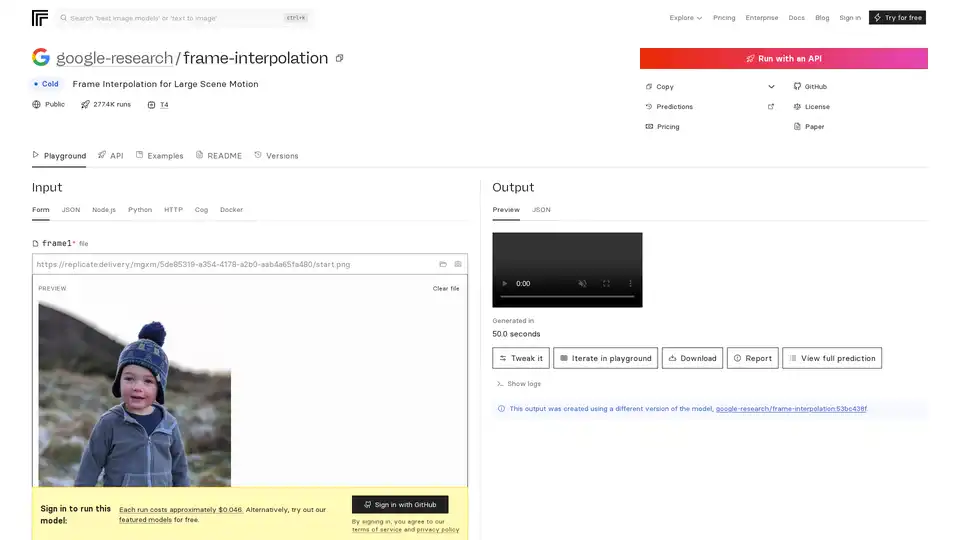

FILM is Google's advanced AI model for frame interpolation, enabling smooth video generation from two input frames even with large scene motion. Achieve state-of-the-art results without extra networks like optical flow.

Online AI manga translator with OCR for vertical/horizontal text. Batch processing and layout-preserving typesetting for manga and doujin.

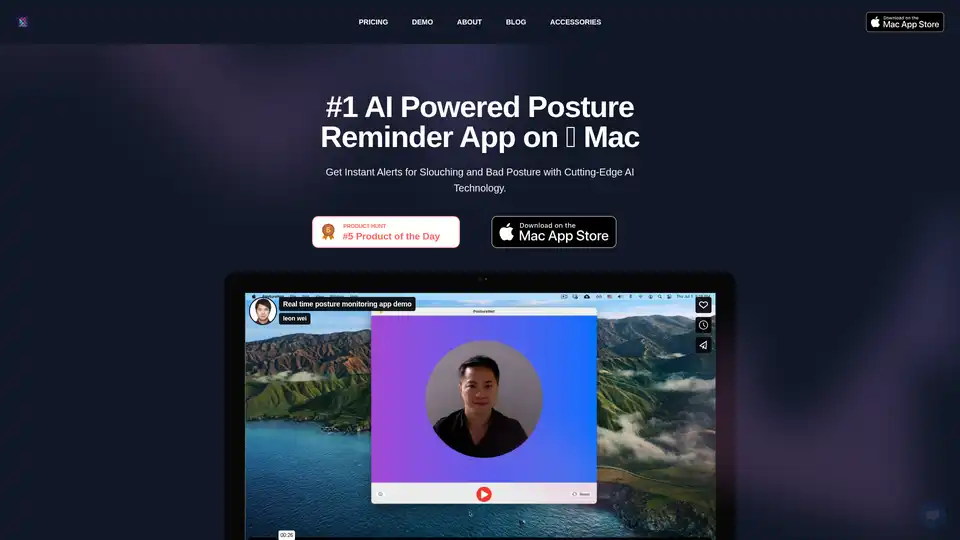

Enhance your workday with the AI Posture Reminder App on Mac. It tracks posture using advanced AI, sends real-time slouch alerts, and promotes better health—all while prioritizing privacy with on-device processing.

NativeMind is an open-source Chrome extension that runs local LLMs like Ollama for a fully offline, private ChatGPT alternative. Features include context-aware chat, agent mode, PDF analysis, writing tools, and translation—all 100% on-device with no cloud dependency.

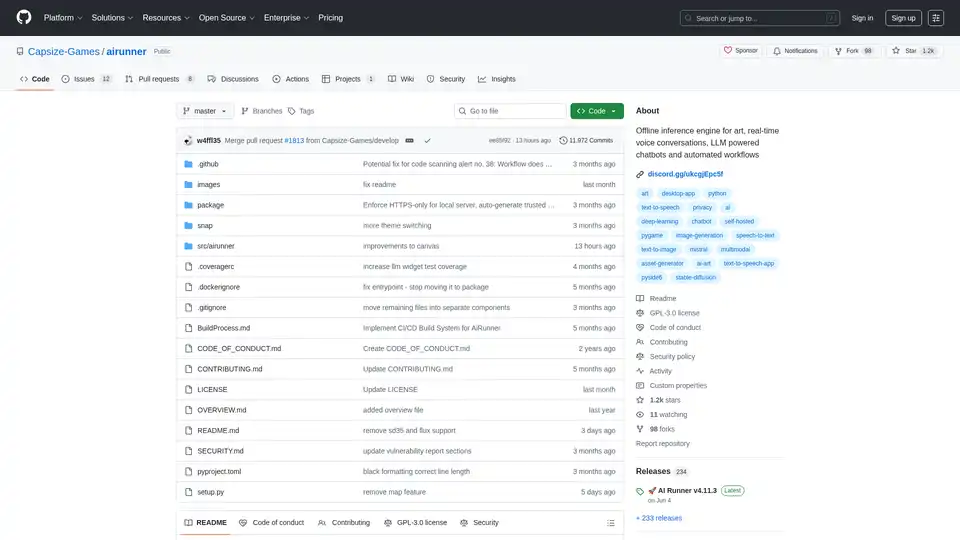

AI Runner is an offline AI inference engine for art, real-time voice conversations, LLM-powered chatbots, and automated workflows. Run image generation, voice chat, and more locally!

Mancer AI provides unrestricted language processing with unfiltered LLMs. Run any prompt without filters or guidelines. Access free and paid models for your AI needs.

Explore the UP AI Development Kit, designed for edge computing, industrial automation, and AI solutions. Powered by Hailo-8 for advanced performance.

Denvr Dataworks provides high-performance AI compute services, including on-demand GPU cloud, AI inference, and a private AI platform. Accelerate your AI development with NVIDIA H100, A100 & Intel Gaudi HPUs.

PremAI is an applied AI research lab providing secure, personalized AI models, encrypted inference with TrustML™, and open-source tools like LocalAI for running LLMs locally.

PremAI is an AI research lab providing secure, personalized AI models for enterprises and developers. Features include TrustML encrypted inference and open-source models.