Inferless

Overview of Inferless

What is Inferless?

Inferless is a cutting-edge platform designed to deploy machine learning models quickly and efficiently using serverless GPU inference. It eliminates the need for managing infrastructure, allowing developers and data scientists to focus on building and refining their models rather than dealing with operational complexities.

How Does Inferless Work?

Inferless simplifies the deployment process by supporting multiple sources, including Hugging Face, Git, Docker, and CLI. Users can choose automatic redeploy, enabling seamless updates without manual intervention. The platform's in-house load balancer ensures optimal performance by scaling from zero to hundreds of GPUs instantly, handling spiky and unpredictable workloads with minimal overhead.

Key Features

- Custom Runtime: Tailor containers with necessary software and dependencies for model execution.

- Volumes: Utilize NFS-like writable volumes that support simultaneous connections across replicas.

- Automated CI/CD: Enable auto-rebuild for models, eliminating manual re-imports and streamlining continuous integration.

- Monitoring: Access detailed call and build logs to monitor and refine models during development.

- Dynamic Batching: Increase throughput by enabling server-side request combining, optimizing resource usage.

- Private Endpoints: Customize endpoints with settings for scale, timeout, concurrency, testing, and webhooks.

Core Functionality

Inferless excels in providing scalable, serverless GPU inference, ensuring models run efficiently regardless of size or complexity. It supports various machine learning frameworks and models, making it versatile for diverse use cases.

Practical Applications

- Production Workloads: Ideal for enterprises needing reliable, high-performance model deployment.

- Spiky Workloads: Handles sudden traffic surges without pre-provisioning, reducing costs and improving responsiveness.

- Development and Testing: Facilitates rapid iteration with automated tools and detailed monitoring.

Target Audience

Inferless is tailored for:

- Data Scientists seeking effortless model deployment.

- Software Engineers managing ML infrastructure.

- Enterprises requiring scalable, secure solutions for AI applications.

- Startups looking to reduce GPU costs and accelerate time-to-market.

Why Choose Inferless?

- Zero Infrastructure Management: No setup or maintenance of GPU clusters.

- Cost Efficiency: Pay only for usage, with no idle costs, saving up to 90% on GPU bills.

- Fast Cold Starts: Sub-second responses even for large models, avoiding warm-up delays.

- Enterprise Security: SOC-2 Type II certification, penetration testing, and regular vulnerability scans.

User Testimonials

- Ryan Singman (Cleanlab): "Saved almost 90% on GPU cloud bills and went live in less than a day."

- Kartikeya Bhardwaj (Spoofsense): "Simplified deployment and enhanced performance with dynamic batching."

- Prasann Pandya (Myreader.ai): "Works seamlessly with 100s of books processed daily at minimal cost."

Inferless stands out as a robust solution for deploying machine learning models, combining speed, scalability, and security to meet modern AI demands.

Best Alternative Tools to "Inferless"

Float16.Cloud provides serverless GPUs for fast AI development. Run, train, and scale AI models instantly with no setup. Features H100 GPUs, per-second billing, and Python execution.

AIMLAPI provides a single API to access 300+ AI models for chat, reasoning, image, video, audio, voice, search, and 3D. It offers fast inference, top-tier serverless infrastructure, and robust data security, saving up to 80% compared to OpenAI.

Baseten is a platform for deploying and scaling AI models in production. It offers performant model runtimes, cross-cloud high availability, and seamless developer workflows, powered by the Baseten Inference Stack.

Cloudflare Workers AI allows you to run serverless AI inference tasks on pre-trained machine learning models across Cloudflare's global network, offering a variety of models and seamless integration with other Cloudflare services.

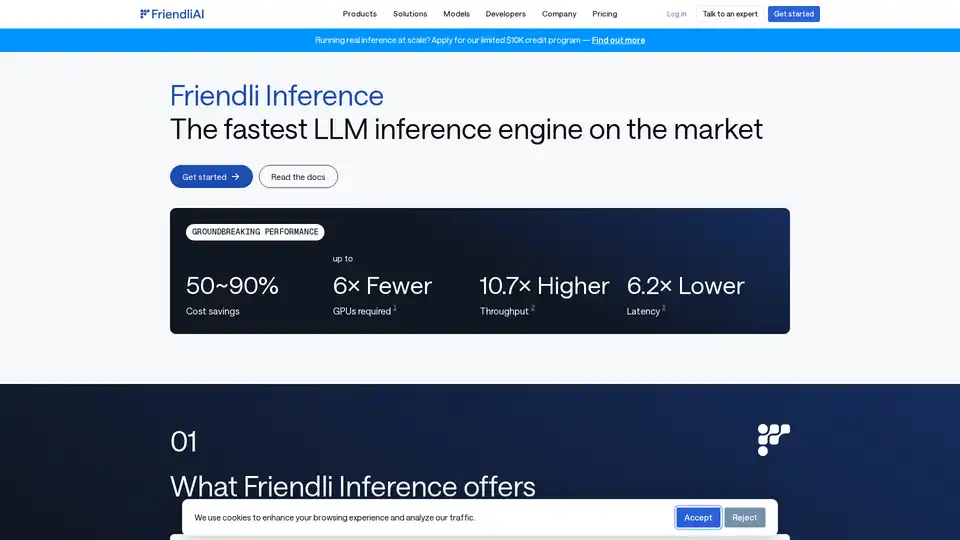

Friendli Inference is the fastest LLM inference engine, optimized for speed and cost-effectiveness, slashing GPU costs by 50-90% while delivering high throughput and low latency.

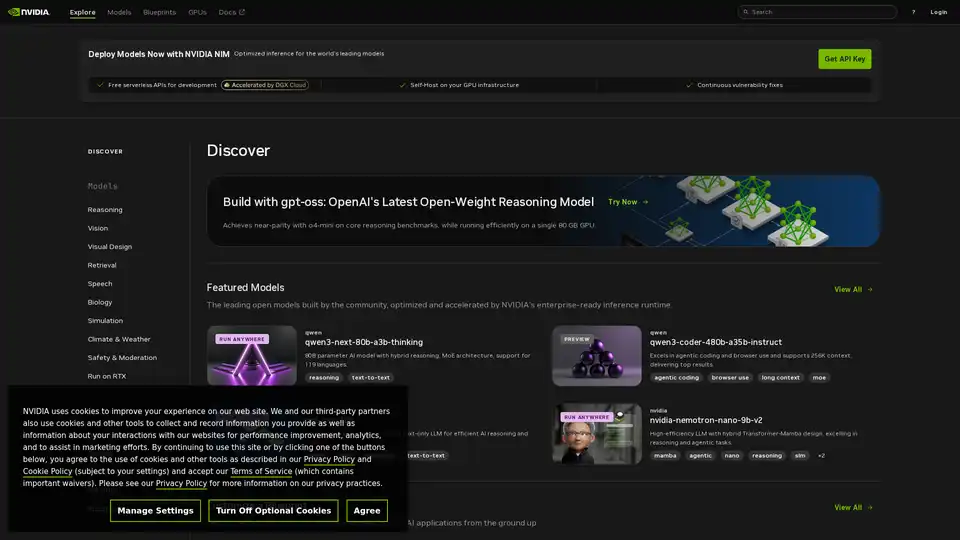

Explore NVIDIA NIM APIs for optimized inference and deployment of leading AI models. Build enterprise generative AI applications with serverless APIs or self-host on your GPU infrastructure.

Runpod is an AI cloud platform simplifying AI model building and deployment. Offering on-demand GPU resources, serverless scaling, and enterprise-grade uptime for AI developers.

GPUX is a serverless GPU inference platform that enables 1-second cold starts for AI models like StableDiffusionXL, ESRGAN, and AlpacaLLM with optimized performance and P2P capabilities.

Lightning-fast AI platform for developers. Deploy, fine-tune, and run 200+ optimized LLMs and multimodal models with simple APIs - SiliconFlow.

Runpod is an all-in-one AI cloud platform that simplifies building and deploying AI models. Train, fine-tune, and deploy AI effortlessly with powerful compute and autoscaling.

Simplify AI deployment with Synexa. Run powerful AI models instantly with just one line of code. Fast, stable, and developer-friendly serverless AI API platform.

fal.ai: Easiest & most cost-effective way to use Gen AI. Integrate generative media models with a free API. 600+ production ready models.

Modal: Serverless platform for AI and data teams. Run CPU, GPU, and data-intensive compute at scale with your own code.

Instantly run any Llama model from HuggingFace without setting up any servers. Over 11,900+ models available. Starting at $10/month for unlimited access.